Chapter 6 Binormal model

6.2 Introduction

The equal variance binormal model was described in Chapter 2. The ratings method of acquiring ROC data and calculation of operating points was discussed in Chapter 4. It was shown there that for a clinical dataset the unequal-variance binormal model visually fitted the data better than the equal-variance binormal model.

This chapter deals with the unequal-variance binormal model, often abbreviated to binormal model. It is applicable to univariate datasets in which there is one rating per case, as in a single observer interpreting cases, one at a time, in a single modality. By convention the qualifier “univariate” is often omitted. In Chapter 10 a bivariate model will be described where each case yields two ratings, as in a single observer interpreting cases in two modalities, or the similar problem of two observers interpreting the same cases in a single modality, but this is not the focus of this chapter.

6.3 Binormal model

The binormal model is defined by (capital letters indicate random variables lower-case are realized values and \(t\) denotes the truth state):

\[\begin{equation} \left. \begin{aligned} Z_{k_tt} \sim &N\left ( \mu_t,\sigma_{t}^{2} \right )\\ t&=1,2 \end{aligned} \right \} \tag{6.1} \end{equation}\]

where

\[\begin{equation} \left. \begin{aligned} \mu_1=&0\\ \mu_2=&\mu\\ \sigma_{1}^{2}=&1\\ \sigma_{2}^{2}=&\sigma^{2} \end{aligned} \right \} \tag{6.2} \end{equation}\]

Eqn. (6.1) states that the z-samples for non-diseased cases (\(t = 1\)) are distributed as a \(N(0,1)\) distribution, i.e., the unit normal distribution, while the z-samples for diseased cases (\(t = 2\)) are distributed as a \(N(\mu,\sigma^2)\) distribution, i.e., a normal distribution with mean \(\mu\) and variance \(\sigma^2\). In the unequal-variance binormal model, the variance \(\sigma^2\) of the z-samples for diseased cases is allowed to be different from unity. Most ROC datasets are consistent with \(\sigma > 1\).9

6.3.1 Binned data

In an R-rating ROC study the observed ratings \(r\) take on integer values 1 through \(R\) it being understood that higher ratings correspond to greater confidence for presence of disease. Define \(R-1\) ordered cutoffs \(\zeta_i\) where \(i=1,2,...,R-1\) and \(\zeta_1 < \zeta_2,...< \zeta_{R-1}\). Also define two dummy cutoffs \(\zeta_0 = -\infty\) and \(\zeta_R = +\infty\). The binning rule for a case with realized z-sample z is (Chapter 4, Eqn. (4.4)):

\[\begin{equation} \left. \begin{aligned} \text{if} \left (\zeta_{r-1} \le z < \zeta_r \right )&\Rightarrow \text{rating} = r\\ &r = 1, 2, ..., R \end{aligned} \right \} \tag{6.3} \end{equation}\]

#> Warning: A numeric `legend.position` argument in `theme()` was deprecated in ggplot2

#> 3.5.0.

#> ℹ Please use the `legend.position.inside` argument of `theme()` instead.

#> This warning is displayed once every 8 hours.

#> Call `lifecycle::last_lifecycle_warnings()` to see where this warning was

#> generated.

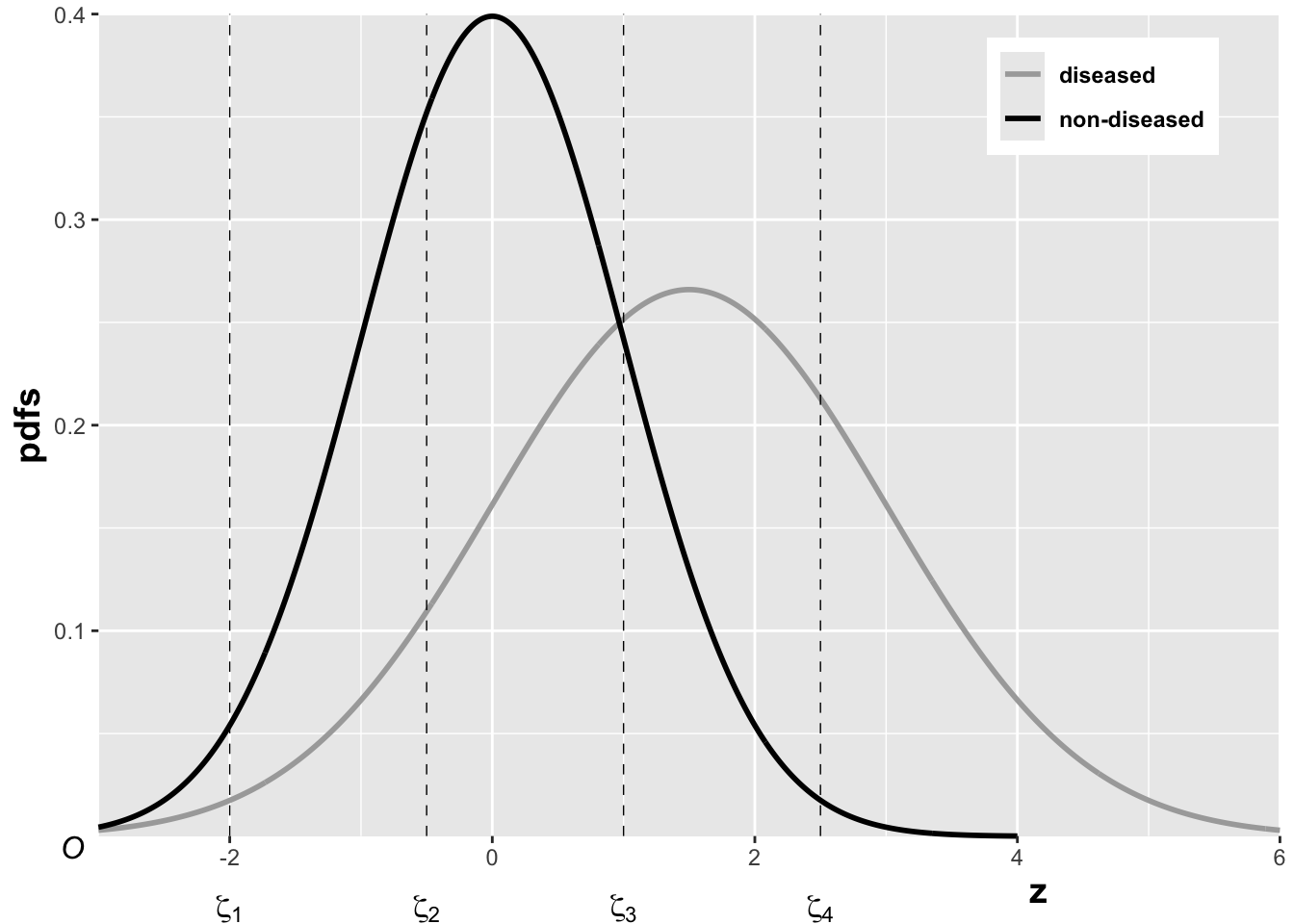

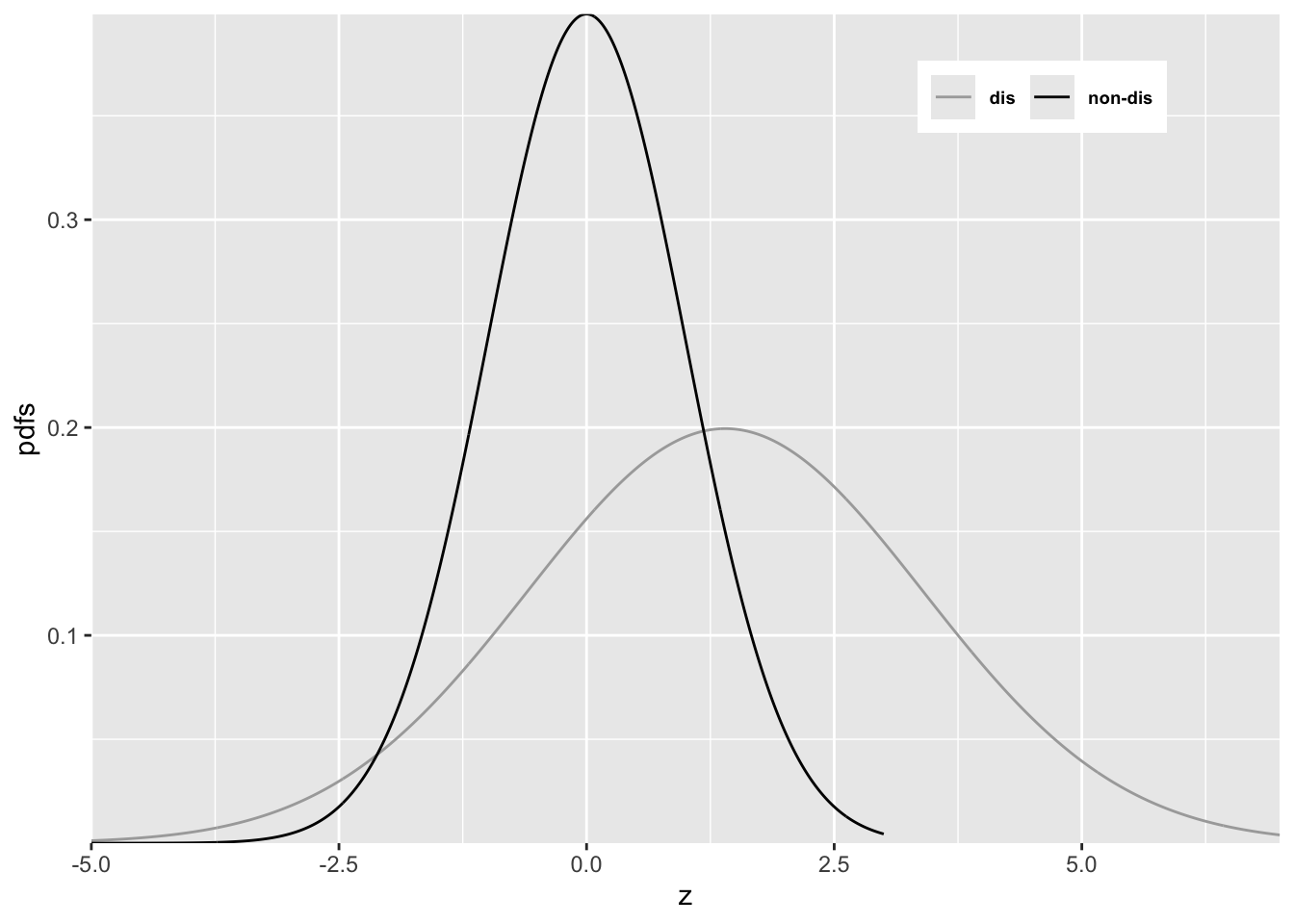

FIGURE 6.1: The pdfs of the two binormal model distributions for \(\mu = 1.5\) and \(\sigma = 1.5\). Four thresholds \(\zeta_1, \zeta_2, \zeta_3, \zeta_4\) are shown corresponding to a five-rating ROC study. The rating assigned to a case is determined by its z-sample according to the binning rule.

The above figure, generated with \(\mu = 1.5\), \(\sigma = 1.5\), \(\zeta_1 = -2\), \(\zeta_2 = -0.5\), \(\zeta_3 = 1\) and \(\zeta_4 = 2.5\), illustrates how realized z-samples are converted to ratings, i.e., application of the binning rule (6.3). For example, a case with z-sample equal to -2.5 would be rated “1”, and one with z-sample equal to -1 would be rated “2”, cases with z-samples greater than 2.5 would be rated “5”.

6.3.2 Sensitivity and specificity

Let \(Z_t\) denote the random z-sample for truth state \(t\) (\(t\) = 1 for non-diseased and \(t\) = 2 for diseased cases). Since the distribution of z-samples from disease-free cases is \(N(0,1)\), the expression for specificity in Chapter 3 applies:

\[\begin{equation} \text{Sp}\left ( \zeta \right )=P\left ( Z_1 < \zeta \right )=\Phi\left ( \zeta \right ) \tag{6.4} \end{equation}\]

To obtain an expression for sensitivity, consider that for truth state \(t = 2\), the random variable \(\frac{Z_2-\mu}{\sigma}\) is distributed as \(N(0,1)\):

\[\begin{equation*} \frac{Z_2-\mu}{\sigma}\sim N\left ( 0,1 \right ) \end{equation*}\]

Sensitivity, abbreviated to \(\text{Se}\), is defined by \(\text{Se} \equiv P\left ( Z_2 > \zeta \right )\). It follows, because \(\sigma\) is positive, that:

\[\begin{equation*} \text{Se}\left ( \zeta | \mu, \sigma \right ) = P\left ( \frac{Z_2-\mu}{\sigma} > \frac{\zeta-\mu}{\sigma} \right ) \end{equation*}\]

The right-hand-side can be rewritten as follows:

\[\begin{equation} \left. \begin{aligned} \text{Se}\left ( \zeta | \mu, \sigma \right )&= 1 - P\left ( \frac{Z_2-\mu}{\sigma} \leq \frac{\zeta-\mu}{\sigma} \right )\\ &=1-\Phi\left ( \frac{\zeta-\mu}{\sigma}\right )=\Phi\left ( \frac{\mu-\zeta}{\sigma}\right ) \end{aligned} \right \} \tag{6.5} \end{equation}\]

Summarizing, the formulae for the specificity and sensitivity for the binormal model are:

\[\begin{equation} \left. \begin{aligned} \text{Sp}\left ( \zeta \right ) &= \Phi\left ( \zeta \right )\\ \text{Se}\left ( \zeta | \mu, \sigma \right ) &= \Phi\left ( \frac{\mu-\zeta}{\sigma}\right ) \end{aligned} \right \} \tag{6.6} \end{equation}\]

The coordinates of the operating point defined by \(\zeta\) are given by:

\[\begin{equation} \left. \begin{aligned} \text{FPF}\left ( \zeta \right ) &= 1 - \text{Sp}\left ( \zeta \right ) \\ &= 1 - \Phi\left ( \zeta \right ) \\ &= \Phi\left ( -\zeta \right ) \end{aligned} \right \} \tag{6.7} \end{equation}\]

\[\begin{equation} \text{TPF}\left ( \zeta | \mu, \sigma \right ) = \Phi\left ( \frac{\mu-\zeta}{\sigma} \right ) \tag{6.8} \end{equation}\]

An equation for a curve is usually expressed as \(y=f(x)\). An expression of this form for the ROC curve, i.e., the y-coordinate (TPF) expressed as a function of the x-coordinate (FPF), follows upon inversion of the expression for FPF, Eqn. (6.7):

\[\begin{equation} \zeta = -\Phi^{-1}\left ( \text{FPF} \right ) \tag{6.9} \end{equation}\]

Substitution of Eqn. (6.9) in Eqn. (6.8) yields:

\[\begin{equation} \text{TPF} = \Phi\left ( \frac{\mu + \Phi^{-1}\left (\text{FPF} \right )}{\sigma} \right ) \tag{6.10} \end{equation}\]

This equation will be put into conventional notation next.

6.3.3 Binormal model in conventional notation

The \((\mu,\sigma)\) notation makes sense when extending the binormal model to newer models described later, see Chapter 7. However, it was not the way the binormal model was originally parameterized. Instead the following notation is widely used in the literature:

\[\begin{equation} \left. \begin{aligned} a&=\frac{\mu}{\sigma}\\ b&=\frac{1}{\sigma} \end{aligned} \right \} \tag{6.11} \end{equation}\]

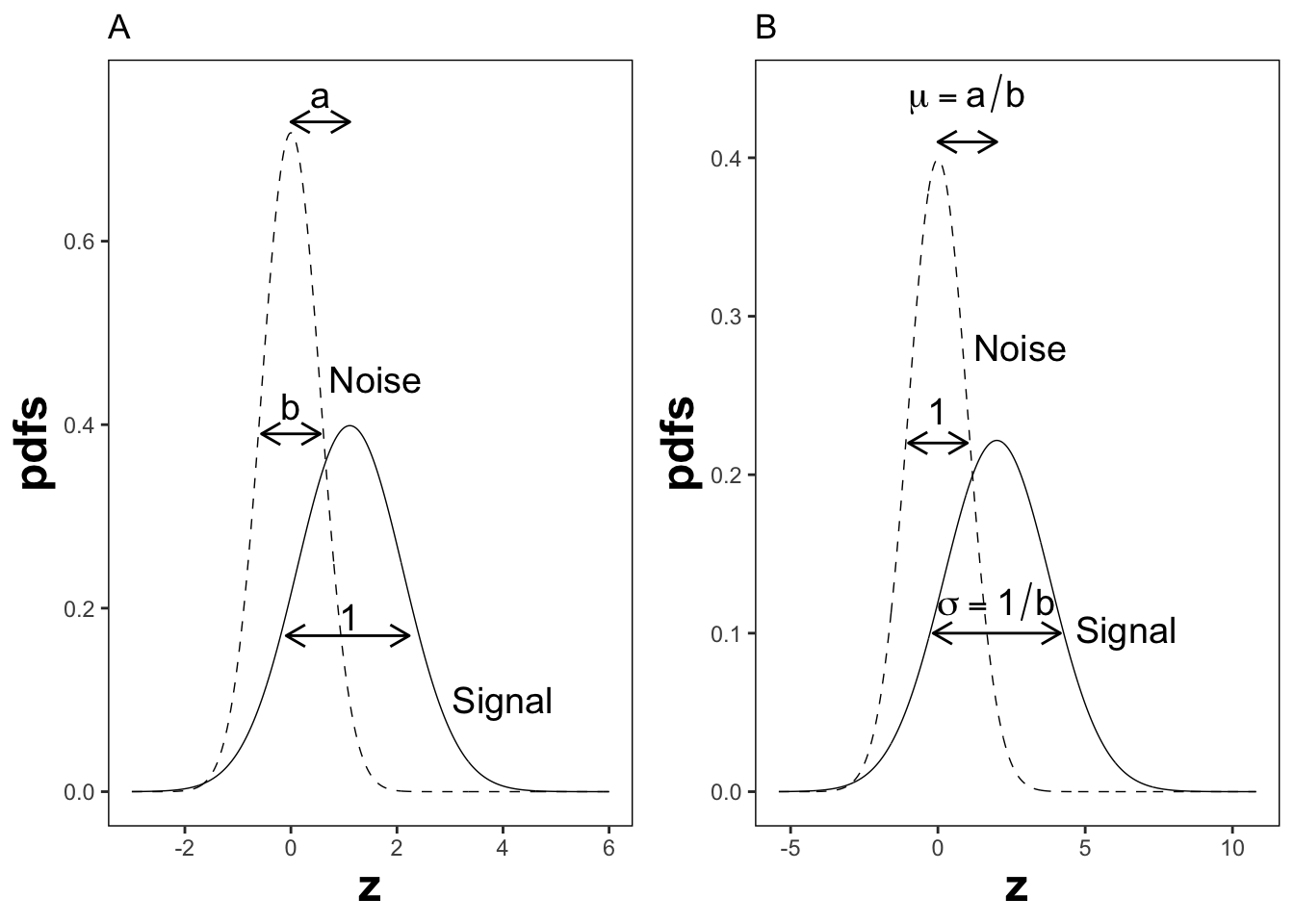

The reason for the \((a,b)\) instead of the \((\mu,\sigma)\) notation is historical. (Dorfman and Alf Jr 1969) assumed that the diseased distribution had unit variance, and the non-diseased distribution had standard deviation \(b\) and their separation was \(a\), see Plot A in Fig. 6.2.

By dividing the z-samples by \(b\), the variance of the distribution labeled “Noise” becomes unity, its mean stays at zero, and the variance of the distribution labeled “Signal” becomes \(1/b\), and its mean becomes \(a/b\), see plot B. Accordingly the inverses of Eqn. (6.11) are:

\[\begin{equation} \left. \begin{aligned} \mu&=\frac{a}{b}\\ \sigma&=\frac{1}{b} \end{aligned} \right \} \tag{6.12} \end{equation}\]

Eqns. (6.11) and (6.12) allow conversion from one notation to another.

FIGURE 6.2: Plot A shows the definitions of the (a,b) parameters of the binormal model. In plot B the x-axis has been rescaled so that the noise distribution has unit variance; this illustrates the difference between the (a,b) and the (\(\mu,\sigma\)) parameters. In this figure \(\mu = 2\) and \(\sigma = 1.8\) which correspond to \(a = 1.11\) and \(b = 0.556\).

6.4 ROC curve

Using the \((a,b)\) notation, Eqn. (6.10) for the ROC curve reduces to:

\[\begin{equation} \text{TPF}\left ( \text{FPF} \right ) = \Phi\left ( a+ b \Phi^{-1}\left (\text{FPF} \right ) \right ) \tag{6.13} \end{equation}\]

Since \(\Phi^{-1}(\text{FPF})\) is an increasing function of its argument \(\text{FPF}\), and \(b > 0\), the argument of the \(\Phi\) function is an increasing function of \(\text{FPF}\). Since \(\Phi\) is a monotonically increasing function of its argument, \(\text{TPF}\) is a monotonically increasing function of \(\text{FPF}\). This is true regardless of the sign of \(a\). If \(\text{FPF} = 0\), then \(\Phi^{-1}(0) = -\infty\) and \(\text{TPF} = 0\). If \(\text{FPF} = 1\), then \(\Phi^{-1}(1) = +\infty\) and \(\text{TPF} = 1\). Regardless of the value of \(a\), as long as \(b \ge 0\), the ROC curve starts at (0,0) and increases monotonically to (1,1).

From Eqn. (6.7) and Eqn. (6.8), the expressions for \(\text{FPF}\) and \(\text{TPF}\) in terms of model parameters \((a,b)\) are:

\[\begin{equation} \left. \begin{aligned} \text{FPF}\left ( \zeta \right ) &= \Phi\left ( -\zeta \right )\\ \text{TPF}\left (\zeta | a,b \right ) &= \Phi\left ( a - b \zeta \right ) \end{aligned} \right \} \tag{6.14} \end{equation}\]

Solve for \(\zeta\) from the equation for FPF:

\[\begin{equation} \zeta = - \Phi^{-1}\left ( \text{FPF} \right ) \tag{6.15} \end{equation}\]

6.5 Density functions

According to Eqn. (6.1) the probability that a non-diseased case z-sample is smaller than \(\zeta\), i.e., the cumulative distribution function (CDF) function for non-diseased cases, is:

\[\begin{equation*} P\left ( Z \le \zeta \mid Z\sim N\left ( 0,1 \right ) \right ) = 1-FPF\left ( \zeta \right ) = \Phi \left ( \zeta \right ) \end{equation*}\]

Likewise, the CDF for diseased case z-samples is:

\[\begin{equation*} P\left ( Z \le \zeta \mid Z\sim N\left ( \mu,\sigma^2 \right ) \right ) = 1-\text{TPF}\left ( \zeta \right ) = \Phi \left ( \frac{\zeta - \mu}{\sigma} \right ) \end{equation*}\]

Since the pdf is the derivative of the corresponding CDF function, it follows that (the superscripts N and D denote non-diseased and diseased cases, respectively):

\[\begin{equation} \left. \begin{aligned} pdf_N\left ( \zeta \right ) &= \frac{\partial \Phi\left ( \zeta \right )}{\partial \zeta} \\ &= \phi\left ( \zeta \right ) \\ &\equiv \frac{1}{\sqrt{2 \pi}}\exp\left ( -\frac{\zeta^2}{2} \right ) \end{aligned} \right \} \tag{6.16} \end{equation}\]

\[\begin{equation} \left. \begin{aligned} pdf_D\left ( \zeta \right ) &= \frac{\partial \Phi\left ( \frac{\zeta - \mu}{\sigma} \right )}{\partial \zeta} \\ &= \frac{1}{\sigma} \phi\left ( \frac{\zeta - \mu}{\sigma} \right ) \\ &\equiv \frac{1}{\sqrt{2 \pi}\sigma}\exp\left ( -\frac{\left (\zeta-\mu \right )^2}{2\sigma} \right ) \end{aligned} \right \} \tag{6.17} \end{equation}\]

The second equation can be written in \((a,b)\) notation as:

\[\begin{equation} \left. \begin{aligned} pdf_D\left ( \zeta \right ) &= b\phi\left ( b\zeta-a \right ) \\ &= \frac{b}{\sqrt{2 \pi}}\exp\left ( -\frac{\left (b\zeta - a \right )^2}{2} \right ) \end{aligned} \right \} \tag{6.18} \end{equation}\]

6.6 Invariance property of pdfs

The binormal model is not as restrictive as might appear at first sight. Any monotone increasing transformation \(Y=f(Z)\) applied to the observed z-samples, and the associated thresholds, will yield the same observed data, e.g., Table 4.1. This is because such a transformation leaves the ordering of the ratings unaltered and hence results in the same operating points. While the distributions for \(Y\) will not be binormal (i.e., two independent normal distributions), one can safely “pretend” that one is still dealing with an underlying binormal model. An alternative way of stating this is that any pair of distributions is allowed as long as they are reducible to a binormal model form by a monotonic increasing transformation of Y: e.g., \(Z=f^{-1}\). [If \(f\) is a monotone increasing function of its argument, so is \(f^{-1}\)}.] For this reason, the term “pair of latent underlying normal distributions” is sometimes used to describe the binormal model. The robustness of the binormal model has been investigated (Hanley 1988; Dorfman et al. 1997). The referenced paper by Dorfman et al has an excellent discussion of the robustness of the binormal model.

The robustness of the binormal model, i.e., the flexibility allowed by the infinite choices of monotonic increasing functions, application of each of which leaves the ordering of the data unaltered, is widely misunderstood. The non-Gaussian appearance of histograms of ratings in ROC studies can lead one to incorrect conclusions that the binormal model is inapplicable to these datasets. To quote a reviewer of one of my recent papers:

I have had multiple encounters with statisticians who do not understand this difference…. They show me histograms of data, and tell me that the data is obviously not normal, therefore the binormal model should not be used.

The reviewer is correct. The misconception is illustrated next.

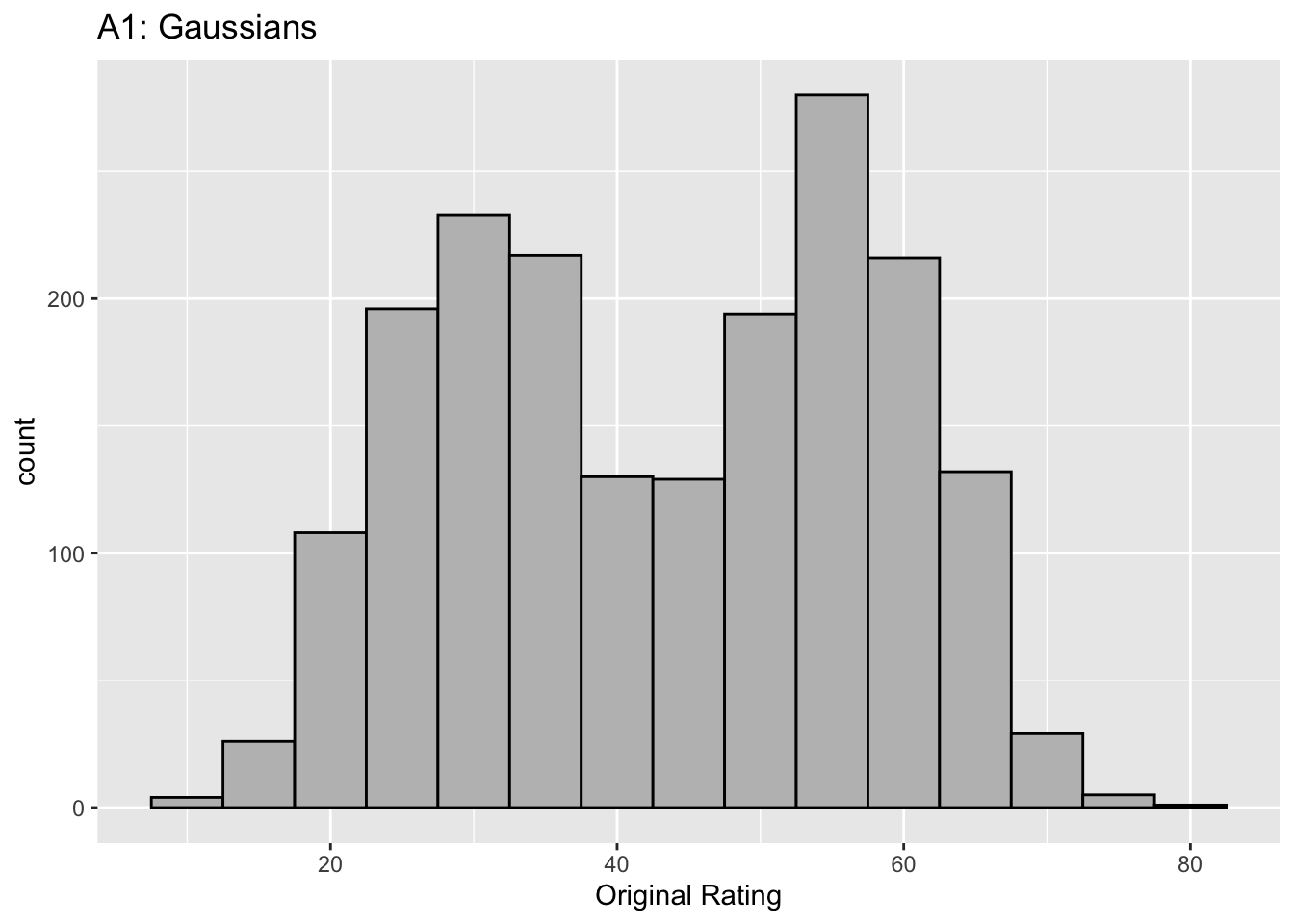

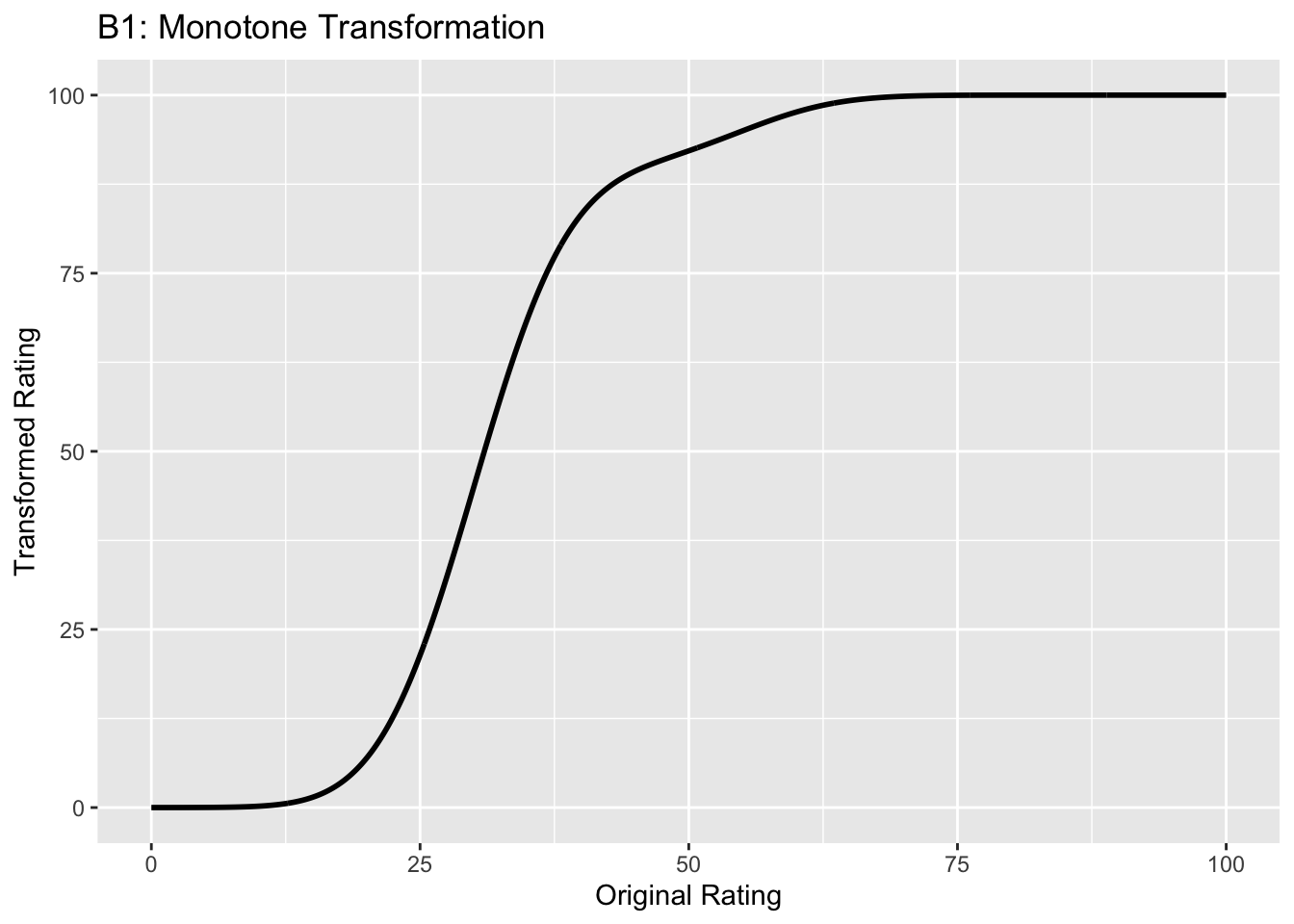

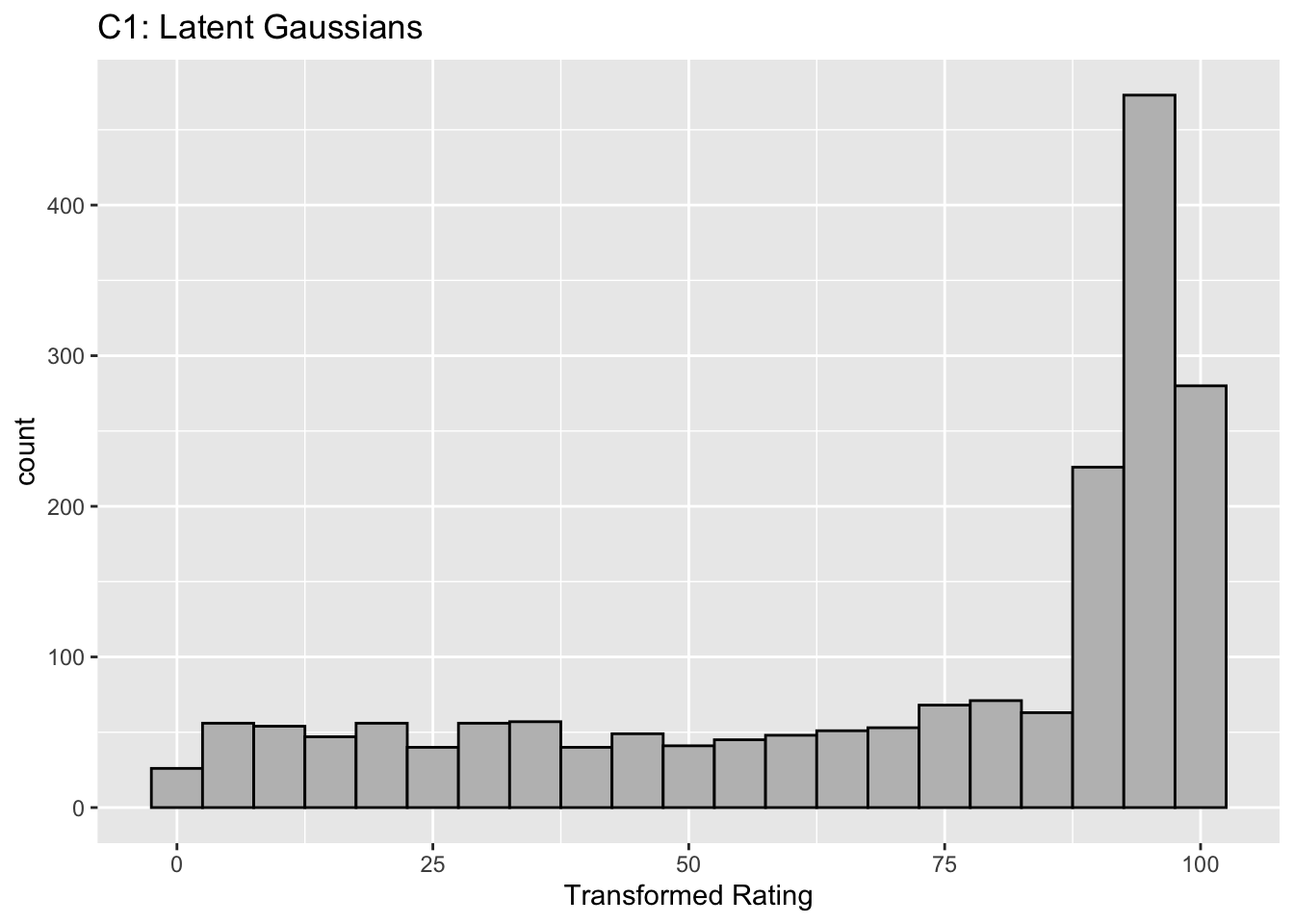

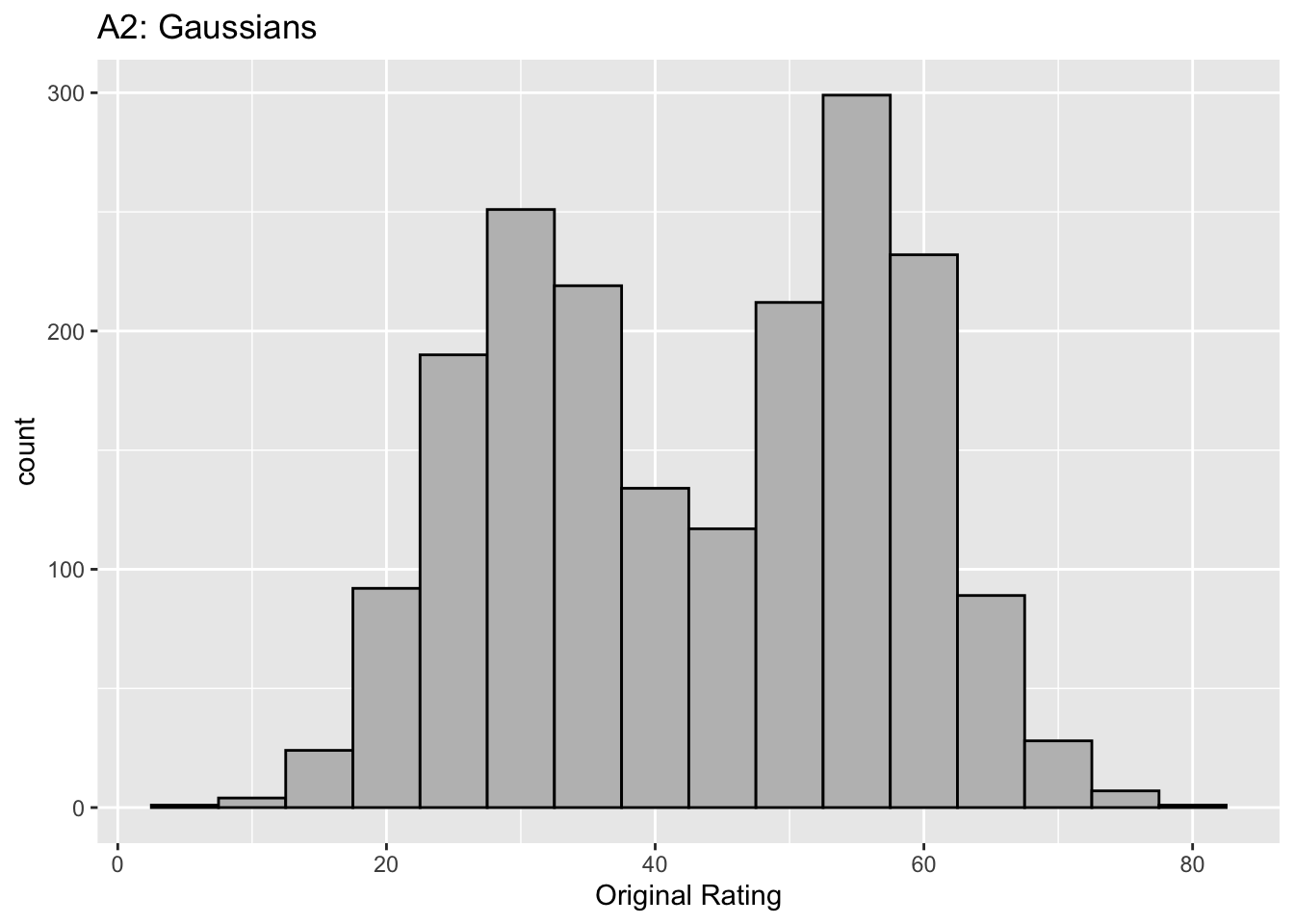

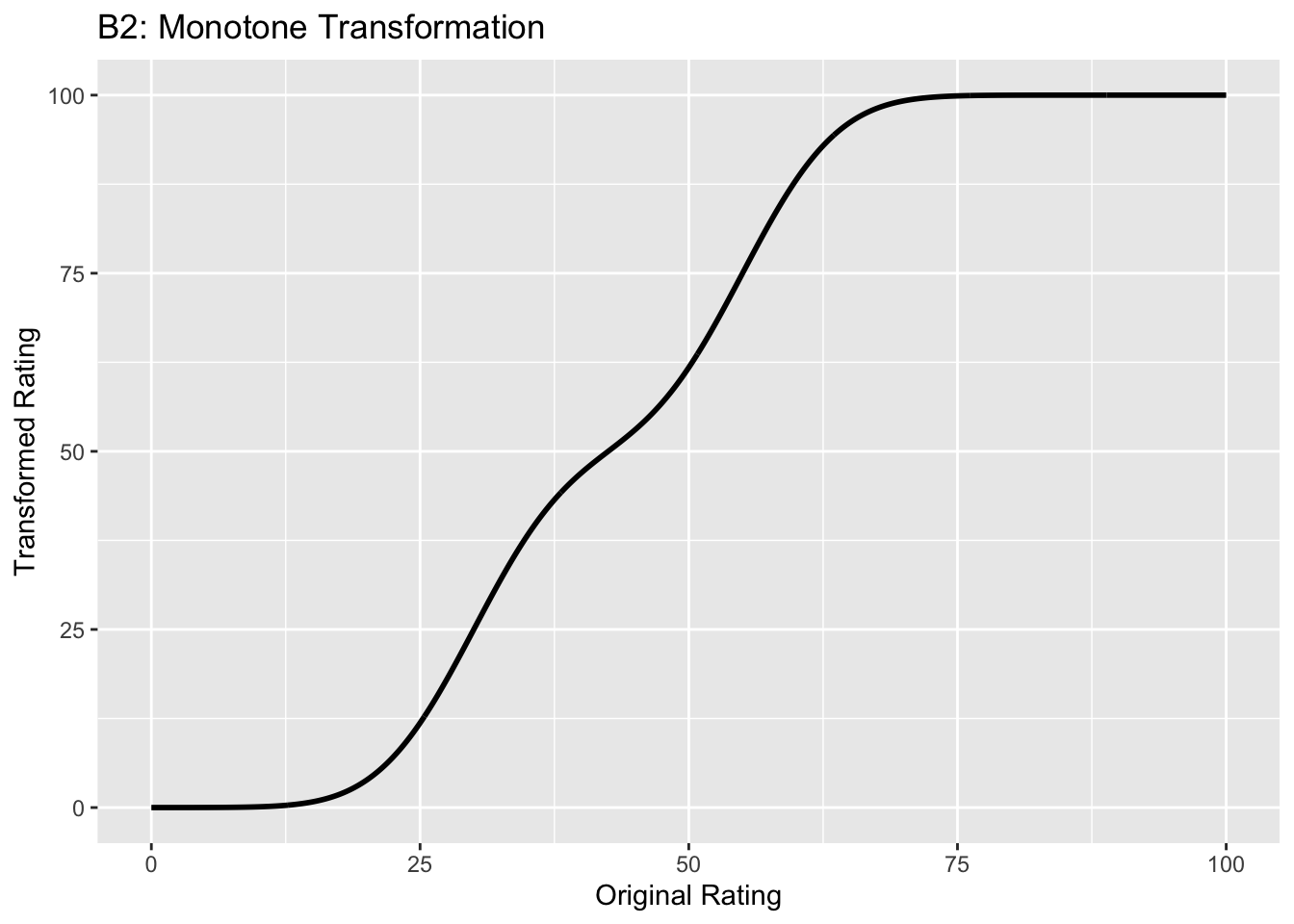

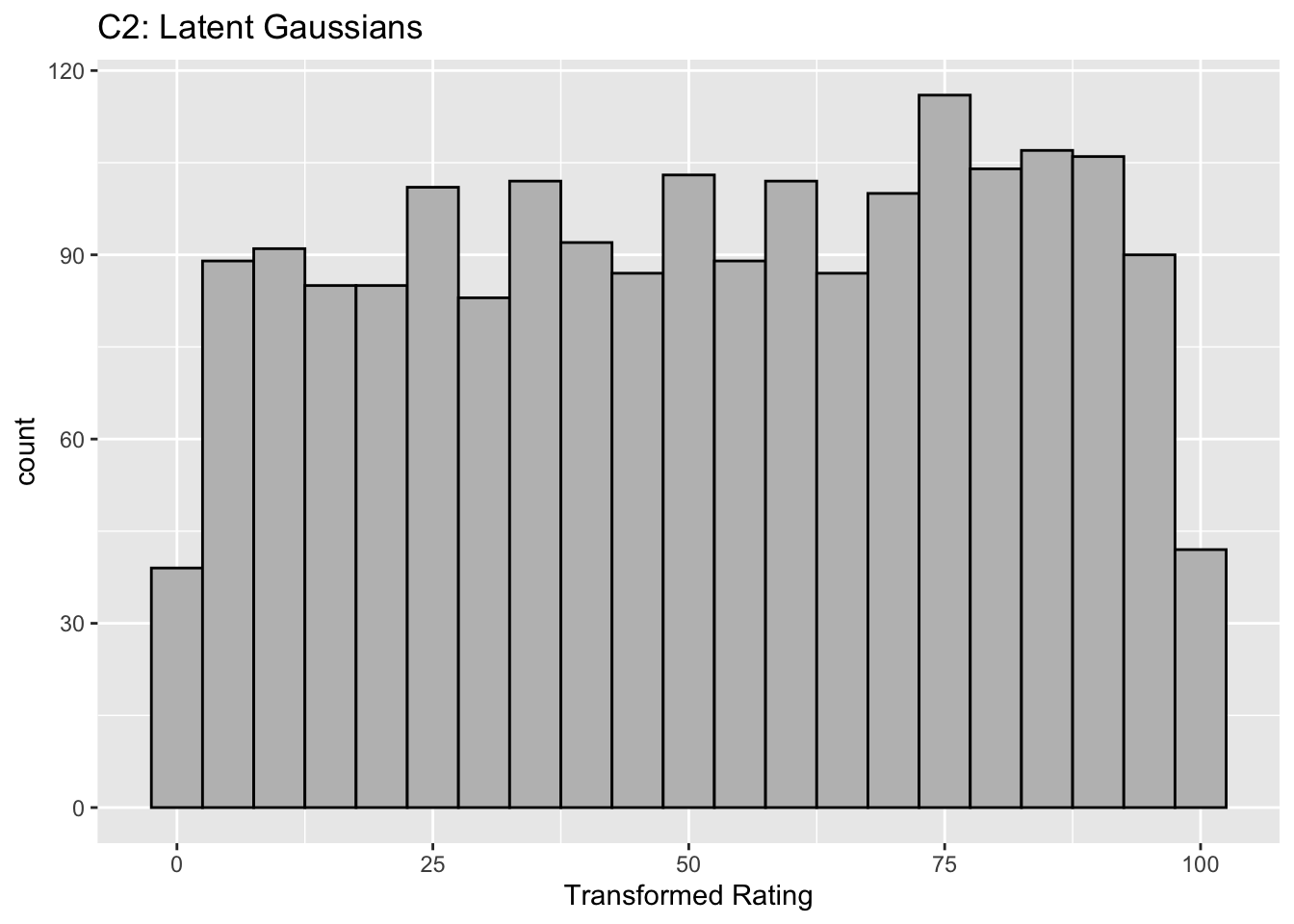

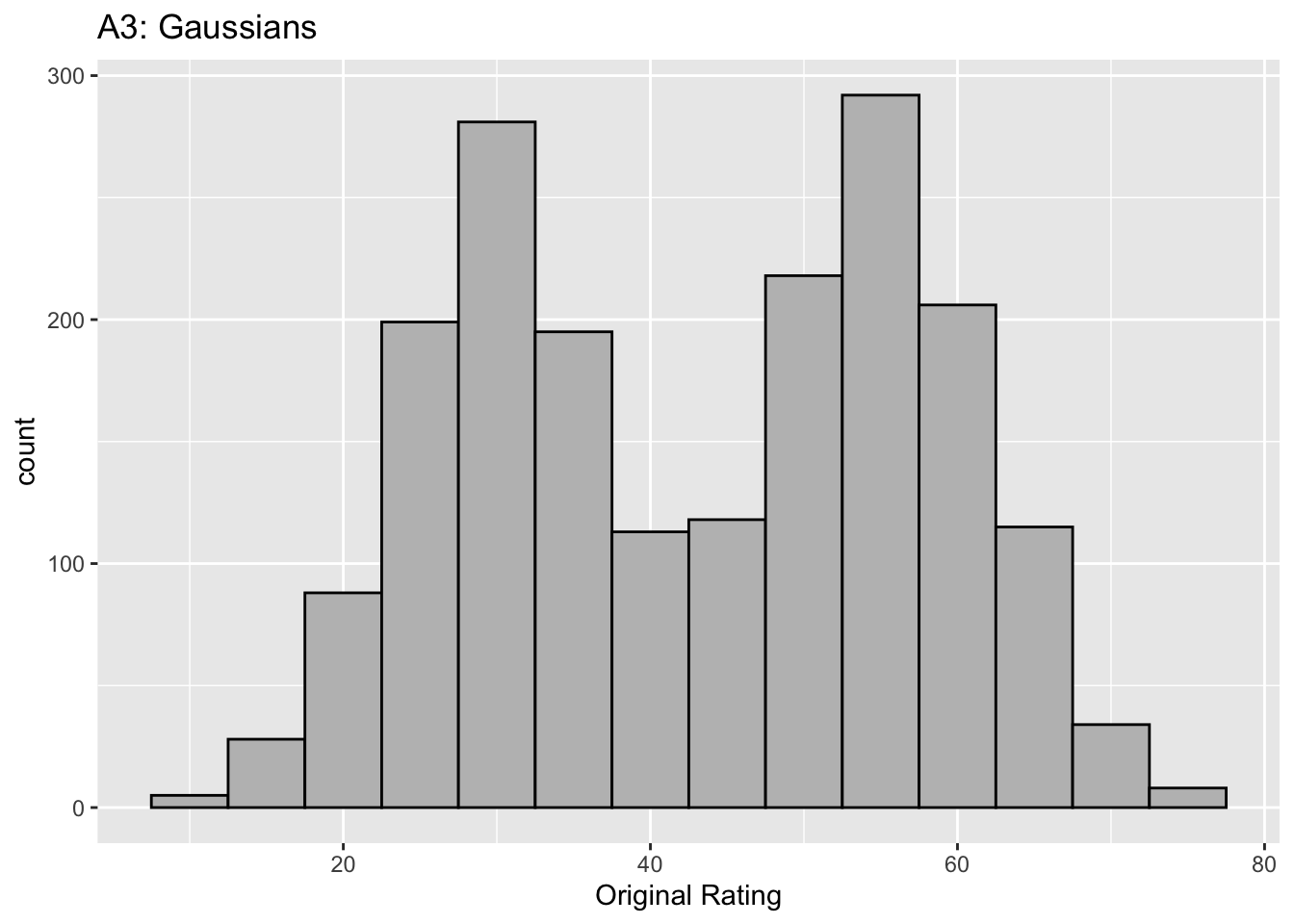

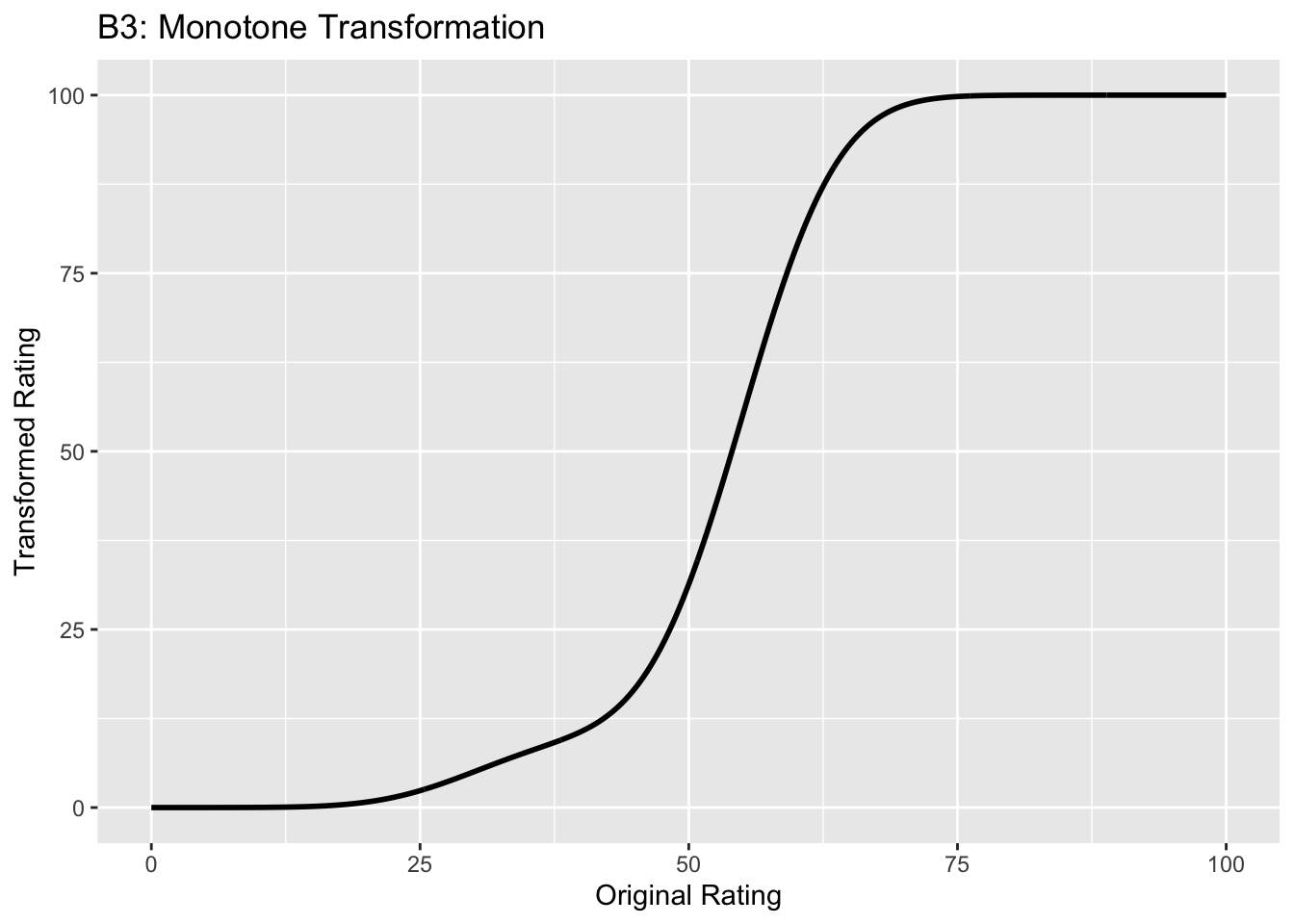

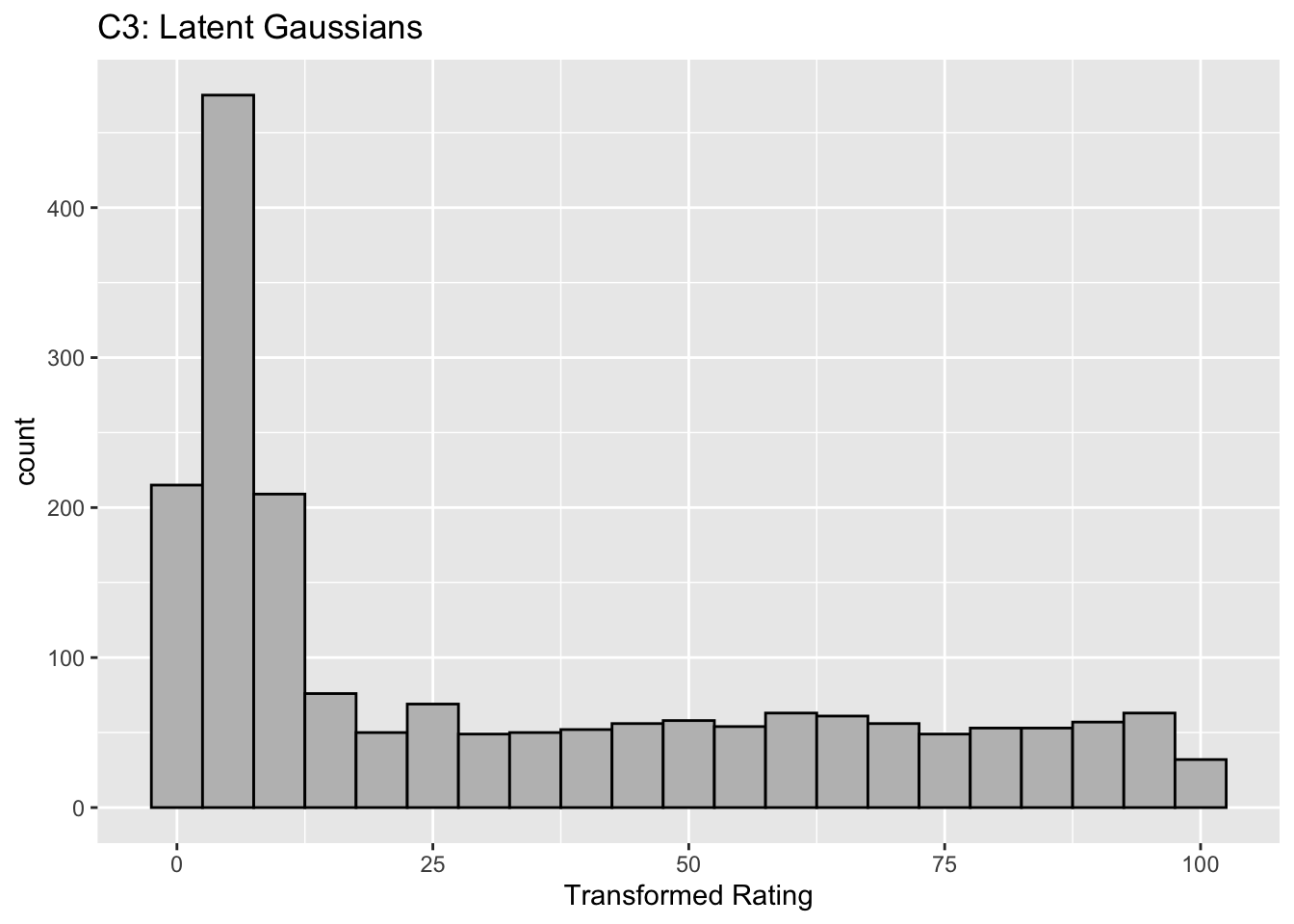

This figure illustrates the invariance of ROC analysis to arbitrary monotone transformations of the ratings.

Each row contains 3 plots: labeled 1, 2 and 3. Each column contains 3 plots labeled A, B and C. So, for example, plot C2 refers to the second row and third column. Each of the latent Gaussian plots C1, C2 and C3 appears to be not binormal. However, using the monotone transformations shown (B1, B2 and B3) they can be transformed to the binormal model histograms A1, A2 and A3.

Plot A1 shows the histogram of simulated ratings from a binormal model. Two peaks, one at 30 and the other at 55 are evident (by design, all ratings in this figure are in the range 0 to 100). Plot B1 shows the monotone transformation. Plot C1 shows the histogram of the transformed rating. The choice of \(f\) leads to a transformed rating histogram that is peaked near the high end of the rating scale. For A1 and C1 the corresponding AUCs are identical.

Plot A2 is for a different seed value, plot B2 is the transformation and now the transformed histogram is almost flat, plot C2. For plots A2 and C2 the corresponding AUCs are identical.

Plot A3 is for a different seed value, B3 is the transformation and the transformed histogram C3 is peaked near the low end of the transformed rating scale. For plots A3 and (C3) the corresponding AUCs are identical.

Visual examination of the shape of the histograms of ratings, or standard tests for normality, yield little, if any, insight into whether the underlying binormal model assumptions are being violated.

6.7 Az and d-prime measures

The (full) area under the ROC, denoted \(A_z\), is derived in (M. L. Thompson and Zucchini 1989):

\[\begin{equation} \left. \begin{aligned} A_z=&\Phi\left ( \frac{a}{\sqrt{1+b^2}} \right )\\ =&\Phi\left ( \frac{\mu}{\sqrt{1+\sigma^2}} \right ) \end{aligned} \right\} \tag{6.19} \end{equation}\]

The binormal fitted AUC increases as \(a\) increases or as \(b\) decreases. Equivalently, it increases as \(\mu\) increases or as \(\sigma\) decreases.

The reason for the name \(A_z\) is that historically (prior to maximum likelihood estimation) this quantity was estimated by converting the probabilities FPF and TPF to z-deviates (see TBA), which of-course assumes normal distributions. The z-subscript is meant to emphasize that this is a binormal model derived estimate.

The \(d'\) parameter is defined as the separation of two unit-variance normal distributions yielding the same AUC as that predicted by the \((a,b)\) parameter binormal model. It is defined by:

\[\begin{equation} d'=\sqrt{2}\Phi^{-1}\left ( A_z \right ) \tag{6.20} \end{equation}\]

The d’ index can be regarded as a perceptual signal-to-noise-ratio.

6.8 Fitting the binormal model

(Dorfman and Alf Jr 1969) were the first to fit ratings data to the binormal model. The details of the procedure are in Appendix 6.12. While historically very important in showing how statistically valid quantitative analysis is possible using ROC ratings data, the fitting procedure suffers from what are termed “degeneracy issues” and “fitting artifacts” discussed in Appendix 6.14. Degeneracy is when the fitting procedure yields unreasonable parameter values. Fitting artifacts occur when the fitted curve predicts worse than chance level performance in some region of the fitted ROC curve. Because of these issues usage of this method is now discouraged as it has largely been supplanted by other software such as the CBM fitting method, the proper ROC fitting method implemented in PROPROC and the RSM (radiological search model) based fitting method. These are discussed in later chapters.

6.9 Partial AUC measures

Two partial AUC measures have been defined. The idea is to have an AUC-like measure that emphasizes some region of the ROC curve, one that is argued to be clinically more significant, instead of \(A_z\) which characterizes the whole curve. In the following two definitions are considered, one that emphasizes the high specificity region of the ROC curve and one which emphasizes the high sensitivity region of the curve.

Shorthand: denote \(A \equiv A_z\), \(x \equiv \text{FPF}\) and \(y \equiv \text{TPF}\). The two partial AUC measures correspond to a partial integral along the x-axis starting from the origin (high specificity) and the other to a partial integral along the y-axis ending at (1,1) corresponding to high sensitivity. These are denoted by X and Y superscripts.

6.9.1 Measure emphasizing high specificity

The partial area under the ROC, \(A_c^{X}\), is defined as that extending from \(x = 0\) to \(x = c\), where \(0 \le c \le 1\) (in our notation \(c\) always means a cutoff on the x-axis of the ROC):

\[\begin{equation} \left. \begin{aligned} A_c^{X} &= \int_{x=0}^{x=c} y \, dx \\&= \int_{x=0}^{x=c} \Phi\left ( a + b \; \Phi^{-1} \left ( x \right ) \right ) \, dx \end{aligned} \right \} \tag{6.21} \end{equation}\]

The second form follows from Eqn. (6.13).

(M. L. Thompson and Zucchini 1989) derive a formula for the partial-area in terms of the binormal model parameters \(a\) and \(b\):

\[\begin{equation} A_c^{X} = \int_{z_2=-\infty}^{\Phi^{-1}\left ( c \right )} \int_{z_1=-\infty}^{\frac{a}{\sqrt{1+b^2}}} \phi\left ( z_1,z_2;\rho \right ) dz_1dz_2 \tag{6.22} \end{equation}\]

On the right hand side the integrand \(\phi\left ( z_1,z_2;\rho \right )\) is the standard bivariate normal density function with correlation coefficient \(\rho\). It is defined by:

\[\begin{equation} \left. \begin{aligned} \phi\left (z_1,z_2;\rho \right ) &= \frac{1}{2 \pi \sqrt{1-\rho^2}} \exp\left ( -\frac{z_1^2 -2\rho z_1 z_2 +z_2^2}{2\left ( 1-\rho^2 \right )} \right ) \\ \rho &= - \frac{b}{\sqrt{1+b^2}} \end{aligned} \right \} \tag{6.23} \end{equation}\]

As demonstrated later the integrals occurring on the right hand side of Eqn. (6.22) can be evaluated numerically.

As an area measure the partial AUC \(A_c^{X}\) has a simple geometric meaning. A physical meaning is as follows:

An ROC curve10 can be defined over the truncated dataset where all z-samples smaller than \(-\Phi^{-1}(c)\) are ignored. The maximum area of this curve is that defined by the rectangle with corners at \((0,0)\) and \((c,\text{TPF}\left ( c \right ))\): \(c\) is the abscissa at the upper limit of the integration interval along the x-axis and \(\text{TPF}\left ( c \right )\) is the corresponding ordinate: see Eqn. (6.13). Dividing \(A_c^{X}\) by \(\text{TPF}\left ( c \right ) \times c\) yields a normalized partial area measure, denoted \(A_c^{XN}\), where \(0 \le A_c^{XN} \le 1\). This is the classification accuracy between diseased and non-diseased cases measured over the truncated dataset. If \(a \ge 0\) it is constrained to (0.5, 1).

\[\begin{equation} A_c^{XN} = \frac{A_c^{X}}{\text{TPF}\left ( c \right ) \times c} \tag{6.24} \end{equation}\]

6.9.2 Measure emphasizing high sensitivity

Since the integral in Eqn. (6.21) is from \(x = 0\) to \(x = c\) this partial AUC measure emphasizes the high specificity region of the ROC curve (since \(x = 0\) corresponds to unit, i.e. highest, specificity).

An alternative partial AUC measure has been defined (Jiang, Metz, and Nishikawa 1996) that emphasizes the high sensitivity region of the ROC as follows:

\[\begin{equation} A_c^{Y} = \int_{y=\text{TPF}(c)}^{y=1} \left (1-x \right ) \, dy \tag{6.25} \end{equation}\]

\(A_c^{Y}\) is the (un-normalized) area below the ROC extending from \(y = \text{TPF}(c)\) to \(y = 1\). The superscript Y denotes that the integral is over part of the y-axis. The maximum value of this integral is the area of the rectangle defined by the corner points \((c,\text{TPF}(c))\) and \((1,1)\). Therefore the normalized area is defined by (our normalization differs from that in the cited reference):

\[\begin{equation} A_c^{YN} = \frac{A_c^{Y}}{\left (1 - \text{TPF}(c) \right ) \times \left (1-c \right )} \tag{6.26} \end{equation}\]

A physical meaning is as follows:

An ROC curve can be defined over the truncated dataset where all z-samples greater than \(-\Phi^{-1}(c)\) are ignored. \(A_c^{YN}\) is the classification accuracy between diseased and non-diseased cases measured over the truncated dataset. By definition the normalized area ranges between 0 and 1.

6.9.3 Numerical examples

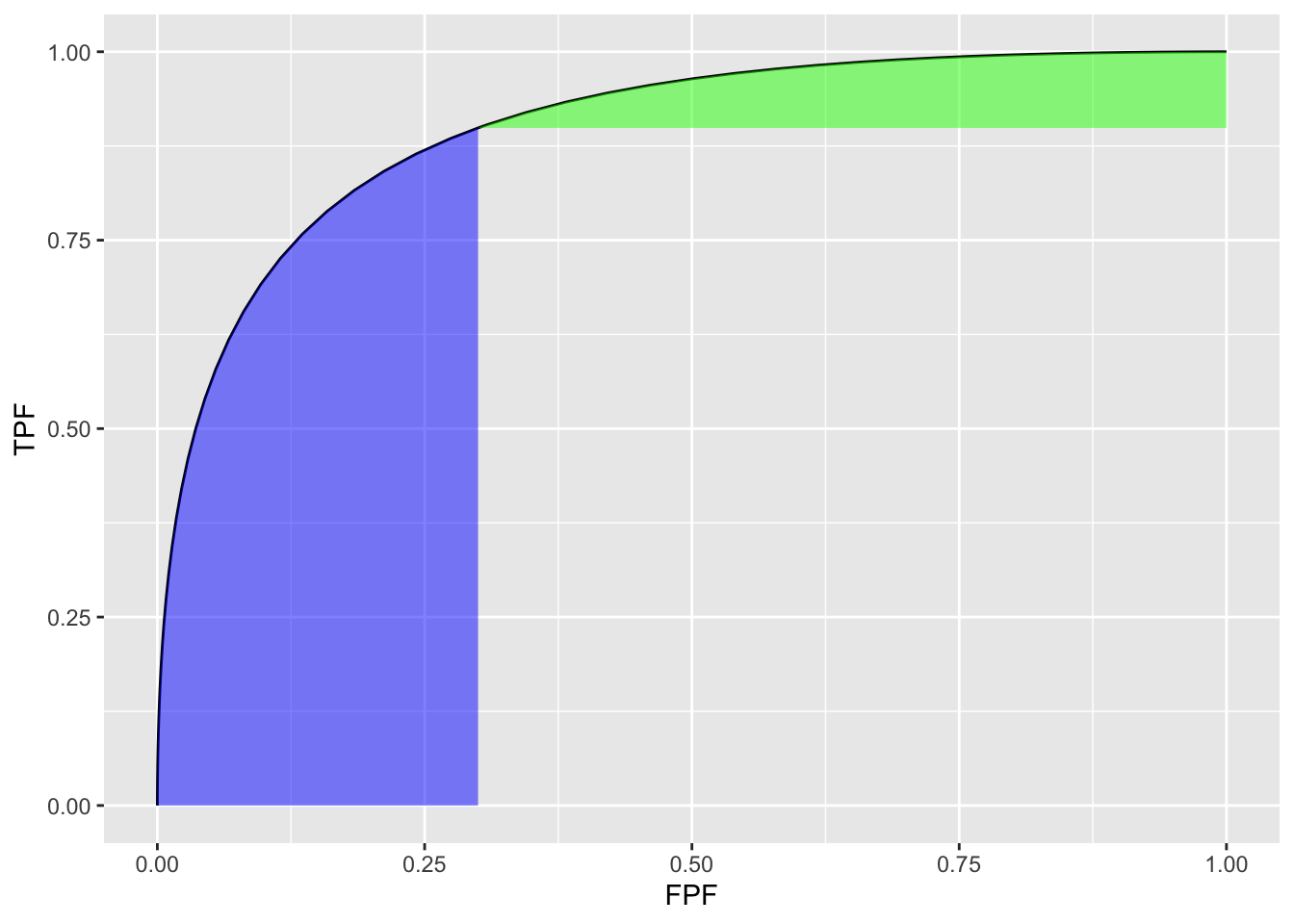

Fig. 6.3 shows the two un-normalized areas.

FIGURE 6.3: Un-normalized partial AUC measures: the blue shaded area is \(A_c^{X}\), the partial area below the ROC; the green shaded area is \(A_c^{Y}\) the partial area above the ROC. Parameters are \(a = 1.8\), \(b = 1\) and \(c = 0.3\).

The following code illustrates calculation of the partial-area measure using the function pmvnorm in the R package mvtnorm (Genz et al. 2023). The parameter values were: \(a = 1.8\), \(b = 1\) and \(c = 0.3\) (see lines 1-3 below).

a <- 1.8

b <- 1

fpf_c <- 0.3 # cannot use c as variable name

tpf_c <- pnorm(a + b * qnorm(fpf_c))

A_z <- pnorm(a/sqrt(1+b^2))

rho <- -b/sqrt(1+b^2)

Lower1 <- -Inf

Upper1 <- qnorm(fpf_c)

Lower2 <- -Inf

Upper2 <- a/sqrt(1+b^2)

sigma <- rbind(c(1, rho), c(rho, 1))

A_x <- as.numeric(pmvnorm(

c(Lower1, Lower2),

c(Upper1, Upper2),

sigma = sigma))

# divide by area of rectangle

A_xn <- A_x/fpf_c/tpf_cThe function pmvnorm is called at line 12. The un-normalized partial-area measure \(A_c^{X}\) = 0.216. The corresponding full AUC measure is \(A_z\) = 0.898. The normalized measure is \(A_c^{XN}\) = 0.802. This is the classification accuracy between non-diseased and diseased cases in the truncated dataset defined by ignoring cases with z-samples smaller than \(-\Phi^{-1}(c)\) = 0.524. This measure emphasizes specificity.

\(A_c^{Y}\) can be calculated using geometry. One subtracts \(A_c^{X}\) from \(A_z\) to get the area under the ROC to the right of \(\text{FPF}=c\). Next one subtracts from this quantity the area of the rectangle with base \((1 - c)\) and height \(\text{TPF}_c\). This yields the area if the green shaded region \(A_c^{Y}\). To normalize it one divides by the area of the rectangle defined by the corner points \((c,\text{TPF}_c)\) and (1,1).

The un-normalized partial-area measure \(A_c^{Y}\) = 0.053. The normalized measure is \(A_c^{YN}\) = 0.748. This is the classification accuracy between non-diseased and diseased cases in the truncated dataset defined by ignoring cases with z-samples greater than \(-\Phi^{-1}(c)\) = 0.524. This measure emphasizes sensitivity.

The variation with \(a\) of the two normalized AUC measures is shown next. The function normalizedAreas encapsulates the above calculations and is called for different values of \(a\).

a_arr = seq(0,8)

A_xn_arr <- array(dim = length(a_arr))

A_yn_arr <- array(dim = length(a_arr))

for (i in 1:length(a_arr)) {

x <- normalizedAreas(a_arr[i], 1, 0.1) # c = 0.1

A_xn_arr[i] <- x$A_xn

A_yn_arr[i] <- x$A_yn

}| \(a\) | \(A^{XN}_c\) | \(A^{YN}_c\) |

|---|---|---|

| 0 | 0.5000 | 0.5000 |

| 1 | 0.6260 | 0.7015 |

| 2 | 0.7785 | 0.8208 |

| 3 | 0.9144 | 0.8842 |

| 4 | 0.9822 | 0.9189 |

| 5 | 0.9981 | 0.9393 |

| 6 | 0.9999 | 0.9521 |

| 7 | 1.0000 | 0.9608 |

| 8 | 1.0000 | 0.9670 |

Table 6.1 shows \(A_c^{XN}\) and \(A_c^{YN}\) partial AUCs for different values of the \(a\) parameter for \(b = 1\) and \(c = 0.1\). It demonstrates that the normalized areas are constrained between 0.5 and 1 (as long as \(a\) in non-negative). For numerical reasons (basically a zero-divided-by-zero condition) it is difficult to show that \(A_c^{YN}\) approaches 1 in the limit of very large a-parameter (since the green shaded area shrinks to zero).

6.10 Comments on partial AUC measures

There are several issues with the adoption of either partial AUC measure.

Since a partial area measure corresponds to classification accuracy measured over a truncated dataset a fundamental correspondence between \(A_z\) and classification accuracy measured over the entire dataset is lost. A basic statistical principle of the desirability of an estimate valid for the entire population is being violated.

The choice of the truncation cutoff is arbitrary and subject to bias on the part of the investigator. This is similar to the type of bias that is inherent in a single point (sensitivity-specificity) based approach to analysis: this was the very reason for adoption of a measure such as \(A_z\) that averages over the whole curve, as argued so eloquently in (C. E. Metz 1978).

Then there is the issue of possible loss of statistical power. If \(A_z\) is estimated from the whole dataset and either Eqn. (6.24) or Eqn. (6.26) is used to estimate partial AUC, then one expects no loss in statistical power, as these equations represent noiseless mathematical transformations using the \((a,b)\) parameters estimated over the entire dataset. However, if an empirical partial AUC measure is used there will surely be loss of statistical power resulting from ignoring some of the data. Due to degeneracy issues usage of the empirical partial AUC is often unavoidable. This is because performing significance testing requires that the dataset be re-sampled many times and the parametric fit may not work every time.

The second point is illustrated by the study reported in (Jiang, Metz, and Nishikawa 1996). The ROC curves of a developmental-stage CAD system and that of radiologists cross each other: at high specificity the radiologists were better but the reverse was true at high sensitivity. By choosing the latter region the authors demonstrated statistically significant superiority of CAD over radiologists. Analysis using \(A_z\) failed to reach statistical significance.

Two very large clinical studies Fenton et al. (2011) using 222,135 and 684,956 women, respectively, showed that a commercial CAD can actually have a detrimental effect on patient outcome(Philpotts 2009). A more recent study has confirmed the negative view of the efficacy of CAD(Lehman et al. 2015) and there has even been a call for ending Medicare reimbursement for CAD interpretations(Fenton 2015). I have not followed the field since ca. 2016 and it is likely that newer versions of CAD now being used in the clinic are better than those evaluated in the cited studies. But the point is that even using a ca. 1996 developmental-stage CAD the authors were able to claim, using a partial AUC measure, that CAD outperformed radiologists, a result clearly not borne out by later large clinical studies while the \(A_z\) measure did not allow this conclusion.

6.11 Discussion

The binormal model is historically very important and the contribution by Dorfman and Alf (Dorfman and Alf Jr 1969) was seminal. Prior to their work, there was no statistically valid way of estimating AUC from observed ratings counts. Their work and a key paper (Lusted 1971) accelerated research using ROC methods. The number of publications using their algorithm, and the more modern versions developed by Metz and colleagues, is probably well in excess of 500. Because of its key role, I have endeavored to take out some of the mystery about how the binormal model parameters are estimated. In particular, a common misunderstanding that the binormal model assumptions are violated by real datasets, when in fact it is quite robust to apparent deviations from normality, is addressed (details are in Section 6.6).

A good understanding of this chapter should enable the reader to better understand alternative ROC models, discussed later.

To this day the binormal model is widely used to fit ROC datasets. In spite of its limitations, the binormal model has been very useful in bringing a level of quantification to this field that did not exist prior to 1969.

6.12 Appendix: Fitting an ROC curve

One aim of this chapter is to demystify statistical curve fitting. With the passing of Profs. Donald Dorfman, Charles Metz and Richard Swensson, parametric modeling is much neglected. Researchers have instead focused on non-parametric analysis using the empirical AUC defined in Chapter 5. A claimed advantage (overstated in my opinion, see Section 6.6) of non-parametric analysis is the absence of distributional assumptions. Non-parametric analysis yields no insight into what is limiting performance. Binormal model based curve fitting described in this chapter will allow the reader to appreciate a later chapter (see RSM fitting chapter in RJafrocFrocBook) that describes a more complex fitting method which yields important insights into the factors limiting human observer (or artificial intelligence algorithm) performance.

6.12.1 JAVA fitted ROC curve

This section, described in the physical book, has been abbreviated to a relevant website.

6.12.2 Simplistic straight line fit to the ROC curve

To be described next is a method for fitting data such as in Table 4.1 to the binormal model, i.e., determining the parameters \((a,b)\) and the thresholds \(\zeta_r , \quad r = 1, 2, ..., R-1\), to best fit, in some to-be-defined sense, the observed cell counts. The most common method uses an algorithm called maximum likelihood. But before getting to that, I describe the least-square method, which is conceptually simpler, but not really applicable, as will be explained shortly.

6.12.2.1 Least-squares estimation

By applying the function \(\Phi^{-1}\) to both sides of Eqn. (6.10), one gets (the “inverse” function cancels the “forward” function on the right hand side):

\[\begin{equation*} \Phi^{-1}\left ( \text{TPF} \right ) = a + b \Phi^{-1}\left ( FPF \right ) \end{equation*}\]

This suggests that a plot of \(y = \Phi^{-1}\left ( \text{TPF} \right )\) vs. \(x=\Phi^{-1}\left ( FPF \right )\) is expected to follow a straight line with slope \(b\) and intercept \(a\). Fitting a straight line to such data is generally performed by the method of least-squares, a capability present in most software packages and spreadsheets. Alternatively, one can simply visually draw the best straight line that fits the points, memorably referred to (Press et al. 2007) as “chi-by-eye”. This was the way parameters of the binormal model were estimated prior to Dorfman and Alf’s work (Dorfman and Alf Jr 1969). The least-squares method is a quantitative way of accomplishing the same aim. If \(\left ( x_t,y_t \right )\) are the data points, one constructs \(S\), the sum of the squared deviations of the observed ordinates from the predicted values (since \(R\) is the number of ratings bins, the summation runs over the \(R-1\) operating points):

\[\begin{equation*} S = \sum_{i=1}^{R-1}\left ( y_i - \left ( a + bx_i \right ) \right )^2 \end{equation*}\]

The idea is to minimize S with respect to the parameters \((a,b)\). One approach is to differentiate this with respect to \(a\) and \(b\) and equate each resulting derivate expression to zero. This yields two equations in two unknowns, which are solved for \(a\) and \(b\). If the reader has never done this before, one should go through these steps at least once, but it would be smarter in future to use software that does all this. In R the least-squares fitting function is lm(y~x), which in its simplest form fits a linear model lm(y~x) using the method of least-squares (in case you are wondering lm stands for linear model, a whole branch of statistics in itself; in this example one is using its simplest capability).

# ML estimates of a and b (from Eng JAVA program)

# a <- 1.3204; b <- 0.6075

# # these are not used in program; just here for comparison

FPF <- c(0.017, 0.050, 0.183, 0.5)

# this is from Table 6.11, last two rows

TPF <- c(0.440, 0.680, 0.780, 0.900)

# ...do...

PhiInvFPF <- qnorm(FPF)

# apply the PHI_INV function

PhiInvTPF <- qnorm(TPF)

# ... do ...

fit <- lm(PhiInvTPF~PhiInvFPF)

print(fit)

#>

#> Call:

#> lm(formula = PhiInvTPF ~ PhiInvFPF)

#>

#> Coefficients:

#> (Intercept) PhiInvFPF

#> 1.328844 0.630746

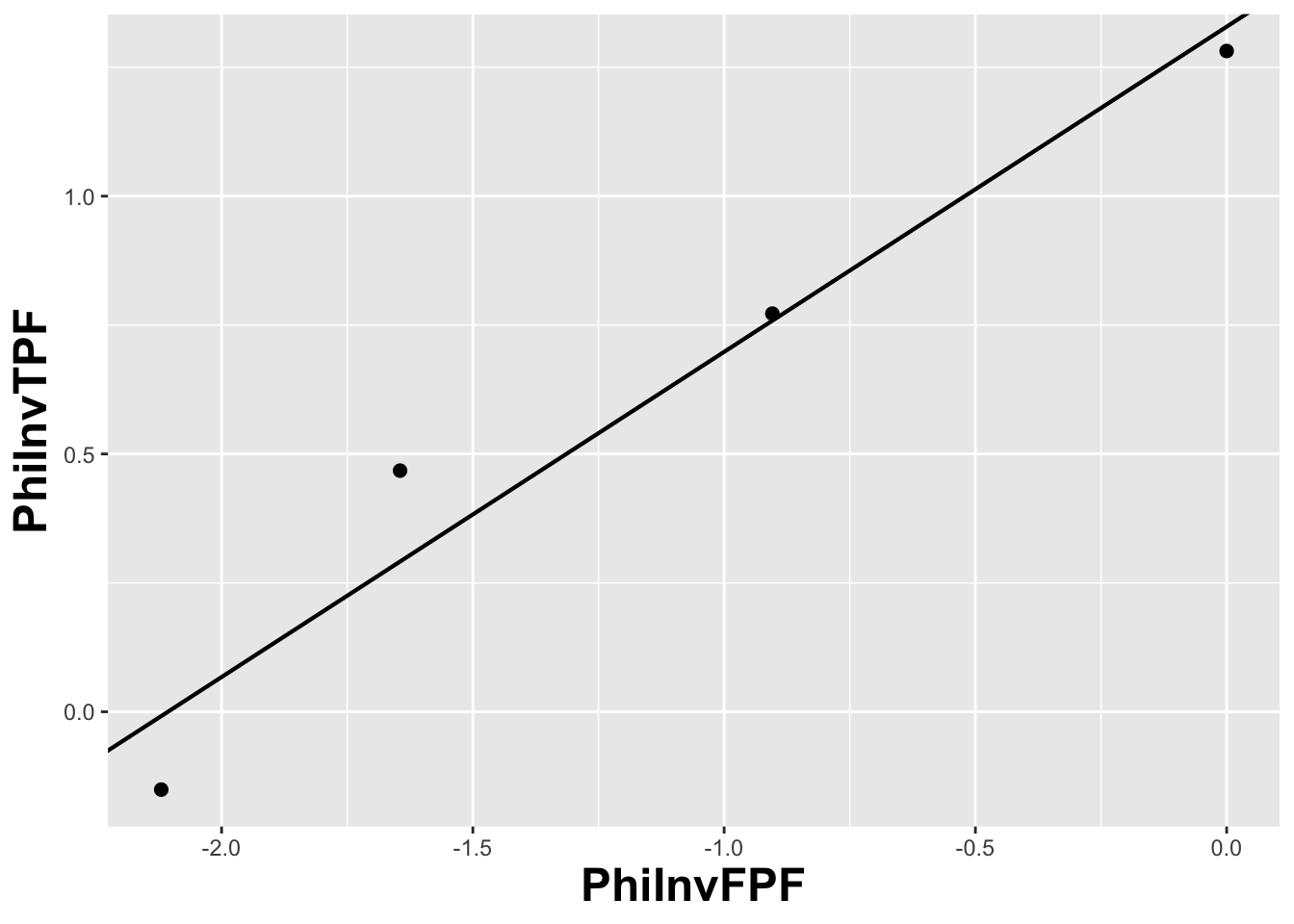

FIGURE 6.4: The straight line fit method of estimating parameters of the fitting model.

Fig. 6.4 shows operating points from Table 4.1, transformed by the \(\Phi^{-1}\) function; the slope of the line is the least-squares estimate of the \(b\) parameter and the intercept is the corresponding \(a\) parameter of the binormal model.

The last line contains the least squares estimated values, \(a\) = 1.3288 and \(b\) = 0.6307. The corresponding maximum likelihood estimates of these parameters, as yielded by the Eng web code, see Appendix, are listed in line 4 of the main program: \(a\) = 1.3204 and \(b\) = 0.6075. The estimates appear to be close, particularly the estimate of \(a\) , but there are a few things wrong with the least-squares approach. First, the method of least squares assumes that the data points are independent. Because of the manner in which they are constructed, namely by cumulating points, the independence assumption is not valid for ROC operating points. Cumulating the 4 and 5 responses constrains the resulting operating point to be above and to the right of the point obtained by cumulating the 5 responses only, so the data points are definitely not independent. Similarly, cumulating the 3, 4 and 5 responses constrains the resulting operating point to be above and to the right of the point obtained by cumulating the 4 and 5 responses, and so on. The second problem is the linear least-squares method assumes there is no error in measuring x; the only source of error that is accounted for is in the y-coordinate. In fact, both coordinates of an ROC operating point are subject to sampling error. Third, disregard of error in the x-direction is further implicit in the estimates of the thresholds, which according to Eqn. (6.2.19), is given by:

\[\begin{equation*} \zeta_r = - \Phi^{-1}\left ( FPF_r \right ) \end{equation*}\]

These are “rigid” estimates that assume no error in the FPF values. As was shown in Chapter 2, 95% confidence intervals apply to these estimates.

A historical note: prior to computers and easy access to statistical functions the analyst had to use a special plotting paper, termed “double probability paper”, that converted probabilities into x and y distances using the inverse function.

6.12.3 Maximum likelihood estimation (MLE)

The approach taken by Dorfman and Alf was to maximize the likelihood function instead of S. The likelihood function is the probability of the observed data given a set of parameter values, i.e.,

\[\begin{equation*} \text {L} \equiv P\left ( data \mid \text {parameters} \right ) \end{equation*}\]

Generally “data” is suppressed, so likelihood is a function of the parameters; but “data” is always implicit. With reference to Fig. 6.1, the probability of a non-diseased case yielding a count in the 2nd bin equals the area under the curve labeled “Noise” bounded by the vertical lines at \(\zeta_1\) and \(\zeta_2\). In general, the probability of a non-diseased case yielding a count in the \(r^\text{th}\) bin equals the area under the curve labeled “Noise” bounded by the vertical lines at \(\zeta_{r-1}\) and \(\zeta_r\). Since the area to the left of a threshold is the CDF corresponding to that threshold, the required probability is \(\Phi\left ( \zeta_r \right ) - \Phi\left ( \zeta_{r-1} \right )\); we are simply subtracting two expressions for specificity, Eqn. (6.2.5).

\[\begin{equation*} \text {count in non-diseased bin } r = \Phi\left ( \zeta_r \right ) - \Phi\left ( \zeta_{r-1} \right ) \end{equation*}\]

Similarly, the probability of a diseased case yielding a count in the rth bin equals the area under the curve labeled “Signal” bounded by the vertical lines at \(\zeta_{r-1}\) and \(\zeta_r\). The area under the diseased distribution to the left of threshold \(\zeta_r\) is the \(1 - \text{TPF}\) at that threshold:

\[\begin{equation*} 1 - \Phi\left ( \frac{\mu-\zeta_r}{\sigma} \right ) = \Phi\left ( \frac{\zeta_r - \mu}{\sigma} \right ) \end{equation*}\]

The area between the two thresholds is:

\[\begin{align*} P\left ( \text{count in diseased bin }r \right ) &= \Phi\left ( \frac{\zeta_r - \mu}{\sigma} \right ) - \Phi\left ( \frac{\zeta_{r-1} - \mu}{\sigma} \right ) \\ &= \Phi\left ( b\zeta_r-a \right ) - \Phi\left ( b\zeta_{r-1}-a \right ) \end{align*}\]

Let \(K_{1r}\) denote the number of non-diseased cases in the rth bin, and \(K_{2r}\) denotes the number of diseased cases in the rth bin. Consider the number of counts \(K_{1r}\) in non-diseased case bin \(r\). Since the probability of each count is \(\Phi\left ( \zeta_{r+1} \right ) - \Phi\left ( \zeta_r \right )\), the probability of the observed number of counts, assuming the counts are independent, is \({\left(\Phi\left ( \zeta_{r+1} \right ) - \Phi\left ( \zeta_r \right ) \right )}^{K_{1r}}\). Similarly, the probability of observing counts in diseased case bin \(r\) is \({\left (\Phi\left ( b\zeta_{r+1}-a \right ) - \Phi\left ( b\zeta_r-a \right ) \right )}^{K_{2r}}\), subject to the same independence assumption. The probability of simultaneously observing \(K_{1r}\) counts in non-diseased case bin r and \(K_{2r}\) counts in diseased case bin \(r\) is the product of these individual probabilities (again, an independence assumption is being used):

\[\begin{equation*} \left (\Phi\left ( \zeta_{r+1} \right ) - \Phi\left ( \zeta_r \right ) \right )^{K_{1r}} \left (\Phi\left ( b\zeta_{r+1}-a \right ) - \Phi\left ( b\zeta_r-a \right ) \right )^{K_{2r}} \end{equation*}\]

Similar expressions apply for all integer values of \(r\) ranging from \(1,2,...,R\). Therefore the probability of observing the entire data set is the product of expressions like Eqn. (6.4.5), over all values of \(r\):

\[\begin{equation} \prod_{r=1}^{R}\left [\left (\Phi\left ( \zeta_{r+1} \right ) - \Phi\left ( \zeta_r \right ) \right )^{K_{1r}} \left (\Phi\left ( b\zeta_{r+1}-a \right ) - \Phi\left ( b\zeta_r-a \right ) \right )^{K_{2r}} \right ] \tag{6.27} \end{equation}\]

We are almost there. A specific combination of \(K_{11},K_{12},...,K_{1R}\) counts from \(K_1\) non-diseased cases and counts \(K_{21},K_{22},...,K_{2R}\) from \(K_2\) diseased cases can occur the following number of times (given by the multinomial factor shown below):

\[\begin{equation} \frac{K_1!}{\prod_{r=1}^{R}K_{1r}!}\frac{K_2!}{\prod_{r=1}^{R}K_{2r}!} \tag{6.28} \end{equation}\]

The likelihood function is the product of Eqn. (6.27) and Eqn. (6.28):

\[\begin{equation} \begin{split} L\left ( a,b,\overrightarrow{\zeta} \right ) &= \left (\frac{K_1!}{\prod_{r=1}^{R}K_{1r}!}\frac{K_2!}{\prod_{r=1}^{R}K_{2r}!} \right ) \times \\ &\quad\prod_{r=1}^{R}\left [\left (\Phi\left ( \zeta_{r+1} \right ) - \Phi\left ( \zeta_r \right ) \right )^{K_{1r}} \left (\Phi\left ( b\zeta_{r+1}-a \right ) - \Phi\left ( b\zeta_r-a \right ) \right )^{K_{2r}} \right ] \end{split} \tag{6.29} \end{equation}\]

The left hand side of Eqn. (6.29) shows explicitly the dependence of the likelihood function on the parameters of the model, namely \(a,b,\overrightarrow{\zeta}\), where the vector of thresholds \(\overrightarrow{\zeta}\) is a compact notation for the set of thresholds \(\zeta_1,\zeta_2,...,\zeta_R\), (note that since \(\zeta_0 = -\infty\), and \(\zeta_R = +\infty\), only \(R-1\) free threshold parameters are involved, and the total number of free parameters in the model is \(R+1\)). For example, for a 5-rating ROC study, the total number of free parameters is 6, i.e., \(a\), \(b\) and 4 thresholds \(\zeta_1,\zeta_2,\zeta_3,\zeta_4\).

Eqn. (6.29) is forbidding but here comes a simplification. The difference of probabilities such as \(\Phi\left ( \zeta_r \right )-\Phi\left ( \zeta_{r-1} \right )\) is guaranteed to be positive and less than one [the \(\Phi\) function is a probability, i.e., in the range 0 to 1, and since \(\zeta_r\) is greater than \(\zeta_{r-1}\), the difference is positive and less than one]. When the difference is raised to the power of \(K_{1r}\) (a non-negative integer) a very small number can result. Multiplication of all these small numbers may result in an even smaller number, which may be too small to be represented as a floating-point value, especially as the number of counts increases. To prevent this we resort to a trick. Instead of maximizing the likelihood function \(L\left ( a,b,\overrightarrow{\zeta} \right )\) we choose to maximize the logarithm of the likelihood function (the base of the logarithm is immaterial). The logarithm of the likelihood function is:

\[\begin{equation} LL\left ( a,b,\overrightarrow{\zeta} \right )=\log \left ( L\left ( a,b,\overrightarrow{\zeta} \right ) \right ) \tag{6.30} \end{equation}\]

Since the logarithm is a monotonically increasing function of its argument, maximizing the logarithm of the likelihood function is equivalent to maximizing the likelihood function. Taking the logarithm converts the product symbols in Eqn. (6.4.8) to summations, so instead of multiplying small numbers one is adding them, thereby avoiding underflow errors. Another simplification is that one can ignore the logarithm of the multinomial factor involving the factorials, because these do not depend on the parameters of the model. Putting all this together, we get the following expression for the logarithm of the likelihood function:

\[\begin{equation} \begin{split} LL\left ( a,b,\overrightarrow{\zeta} \right ) \propto& \sum_{r=1}^{R} K_{1r}\log \left ( \Phi\left ( \zeta_{r+1} \right ) - \Phi\left ( \zeta_r \right ) \right ) \\ &+ \sum_{r=1}^{R} K_{2r}\log \left ( \Phi\left (b \zeta_{r+1} - a \right ) - \Phi\left ( b \zeta_r - a \right ) \right ) \end{split} \tag{6.31} \end{equation}\]

The left hand side of Eqn. (6.31) is a function of the model parameters \(a,b,\overrightarrow{\zeta}\) and the observed data, the latter being the counts contained in the vectors \(\overrightarrow{K_1}\) and \(\overrightarrow{K_2}\), where the vector notation is used as a compact form for the counts \(K_{11},K_{12},...,K_{1R}\) and \(K_{21},K_{22},...,K_{2R}\), respectively. The right hand side of Eqn. (6.31) is monotonically related to the probability of observing the data given the model parameters \(a,b,\overrightarrow{\zeta}\). If the choice of model parameters is poor, then the probability of observing the data will be small and log likelihood will be small. With a better choice of model parameters the probability and log likelihood will increase. With optimal choice of model parameters the probability and log likelihood will be maximized, and the corresponding optimal values of the model parameters are called maximum likelihood estimates (MLEs). These are the estimates produced by the programs RSCORE and ROCFIT.

6.12.4 Code implementing MLE

# ML estimates of a and b (from Eng JAVA program)

# a <- 1.3204; b <- 0.6075

# these are not used in program; just stated here for comparison

K1t <- c(30, 19, 8, 2, 1)

K2t <- c(5, 6, 5, 12, 22)

# convert data table to an RJafroc dataset

dataset <- Df2RJafrocDataset(K1t, K2t, InputIsCountsTable = TRUE)

# fit the dataset to the binormal model

retFit <- FitBinormalRoc(dataset)

# display the results

print(data.frame(retFit[1:5]))

#> a b zetas AUC StdAUC

#> zetaFwd1 1.32045261 0.607492932 0.00768054684 0.870452157 0.0379042263

#> zetaFwd2 1.32045261 0.607492932 0.89627306766 0.870452157 0.0379042263

#> zetaFwd3 1.32045261 0.607492932 1.51564784964 0.870452157 0.0379042263

#> zetaFwd4 1.32045261 0.607492932 2.39672209849 0.870452157 0.0379042263

# display the plot

print(retFit$fittedPlot)

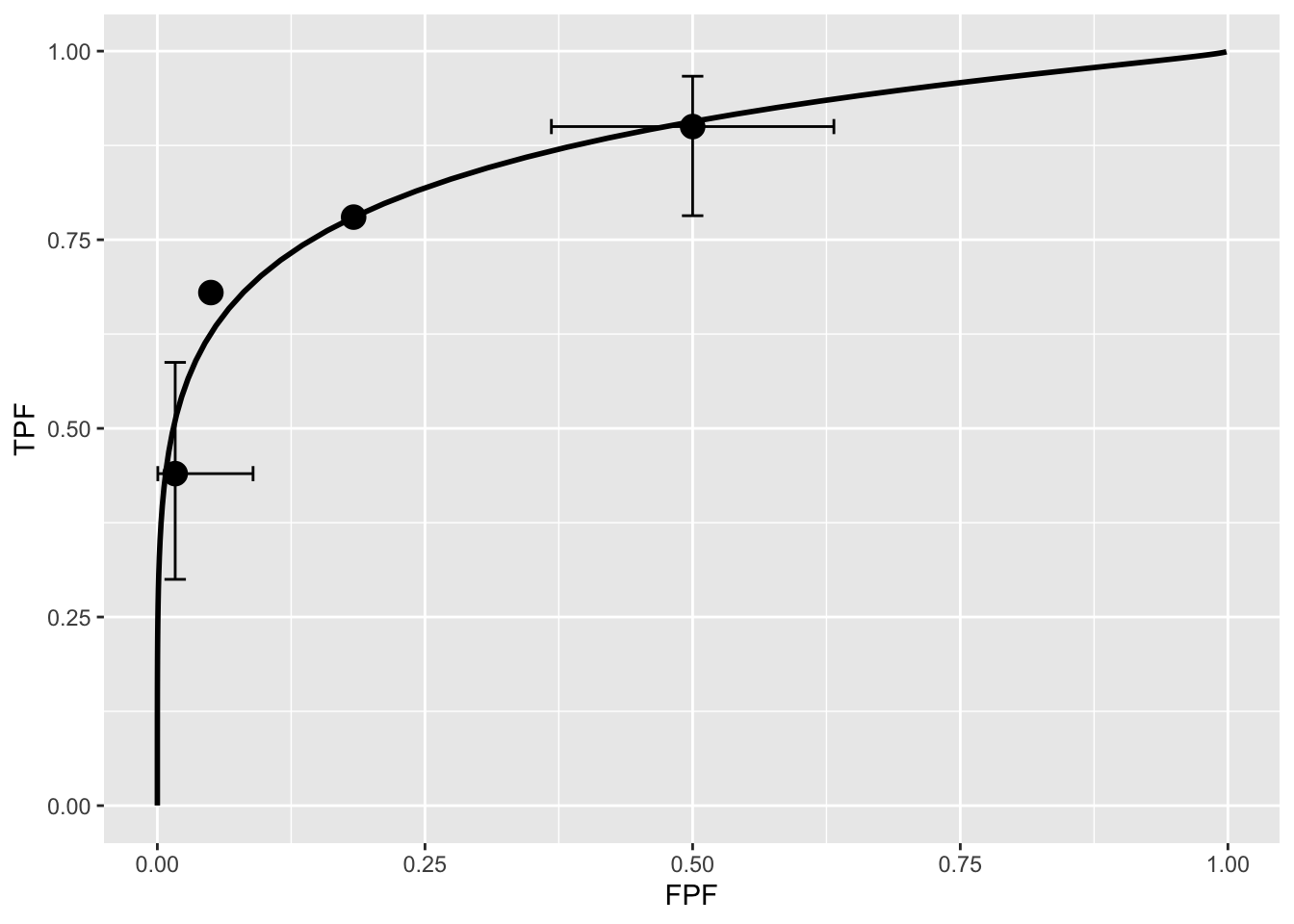

FIGURE 6.5: Operating points and fitted binormal ROC curve. The fitting values are \(a = 1.3205\) and \(b = 0.6075\). Confidence intervals are shown for the lowest and uppermost non-trivial points.

Note the usage of the R-package RJafroc (Dev Chakraborty and Zhai 2023). Specifically, the function FitBinormalRoc() is used. The ratings table is converted to an RJafroc dataset object, followed by application of the fitting function. The results, contained in retFit should be compared to those obtained from the website implementation of ROCFIT.

6.12.5 Validating the fit

The above ROC curve is a good visual fit to the observed operating points. Quantification of the validity of the fitting model is accomplished by calculating the Pearson goodness-of-fit test (Pearson 1900), also known as the chi-square test, which uses the statistic defined by (Larsen and Marx 2005):

\[\begin{equation} C^2=\sum_{t=1}^{2}\sum_{r=1}^{R}\frac{\left (K_{tr}-\left \langle K_{tr} \right \rangle \right )^2}{\left \langle K_{tr} \right \rangle}\\ K_{tr} \geq 5 \tag{6.32} \end{equation}\]

The expected values are given by:

\[\begin{equation} \begin{split} \left \langle K_{1r} \right \rangle &=K_1\left ( \Phi\left ( \zeta_{r+1} \right ) - \Phi\left ( \zeta_r \right ) \right ) \\ \left \langle K_{2r} \right \rangle &=K_2\left ( \Phi\left ( a\zeta_{r+1}-b \right ) - \Phi\left ( a\zeta_r - b\right ) \right ) \end{split} \tag{6.33} \end{equation}\]

These expressions should make sense: the difference between the two CDF functions is the probability of a count in the specified bin, and multiplication by the total number of relevant cases should yield the expected counts (a non-integer).

It can be shown that under the null hypothesis that the assumed probability distribution functions for the counts equals the true probability distributions, i.e., the model is valid, the statistic \(C^2\) is distributed as:

\[\begin{equation} C^2\sim \chi_{df}^{2} \tag{6.34} \end{equation}\]

Here \(C^2\sim \chi_{df}^{2}\) is the chi-square distribution with degrees of freedom df defined by:

\[\begin{equation} df=\left ( R-1 \right )+\left ( R-1 \right )-\left (2+ R-1 \right )=\left ( R-3 \right ) \tag{6.35} \end{equation}\]

The right hand side of the above equation has been written in an expansive form to illustrate the general rule: for \(R\) non-diseased cells in the ratings table, the degree of freedom is \(R-1\): this is because when all but one cells are specified, the last is determined, because they must sum to \(K_1\) . Similarly, the degree of freedom for the diseased cells is also \(R-1\). Last, we need to subtract the number of free parameters in the model, which is \((2+R-1)\), i.e., the \(a,b\) parameters and the \(R-1\) thresholds. It is evident that if \(R = 3\) then \(df = 0\). In this situation, there are only two non-trivial operating points and the straight-line fit shown will pass through both of them. With two basic parameters, fitting two points is trivial, and goodness of fit cannot be calculated.

Under the null hypothesis (i.e., model is valid) \(C^2\) is distributed as \(\chi_{df}^{2}\). Therefore, one computes the probability that this statistic is larger than the observed value, called the p-value. If this probability is very small, that means that the deviations of the observed values of the cell counts from the expected values are so large that it is unlikely that the model is correct. The degree of unlikeliness is quantified by the p-value. Poor fits lead to small p values.

At the 5% significance level, one concludes that the fit is not good if \(p < 0.05\). In practice one occasionally accepts smaller values of \(p\), \(p > 0.001\) before completely abandoning a model. It is known that adoption of a stricter criterion, e.g., \(p > 0.05\), can occasionally lead to rejection of a retrospectively valid model (Press et al. 2007).

6.13 Appendix: Improper ROCs

The binormal model has two parameters. The \(a\) parameter is the separation of the two distributions. The diseased case distribution has unit standard deviation. The non-diseased case distribution has standard deviation \(b\). Binormal model fits invariably lead to ROC curves that inappropriately cross the chance diagonal, i.e., it predicts a region of the curve where performance is worse than chance even for expert observers. Such curves are termed improper. This occurs whenever \(b \ne 1\).

The following code illustrates improper curves predicted by the binormal model.

aArray <- c(0.7, 0.7, 1.5, 2)

bArray <- c(0.5, 1.5, 0.5, 0.5)

chance_diag <- data.frame(x = c(0,1), y = c(0,1))

p <- PlotBinormalFit(aArray, bArray) +

scale_x_continuous(expand = c(0, 0)) +

scale_y_continuous(expand = c(0, 0)) +

theme(legend.position = c(0.85, 0.2))

p <- p + geom_line(data = chance_diag, aes(x = x, y = y), linetype="dotted")

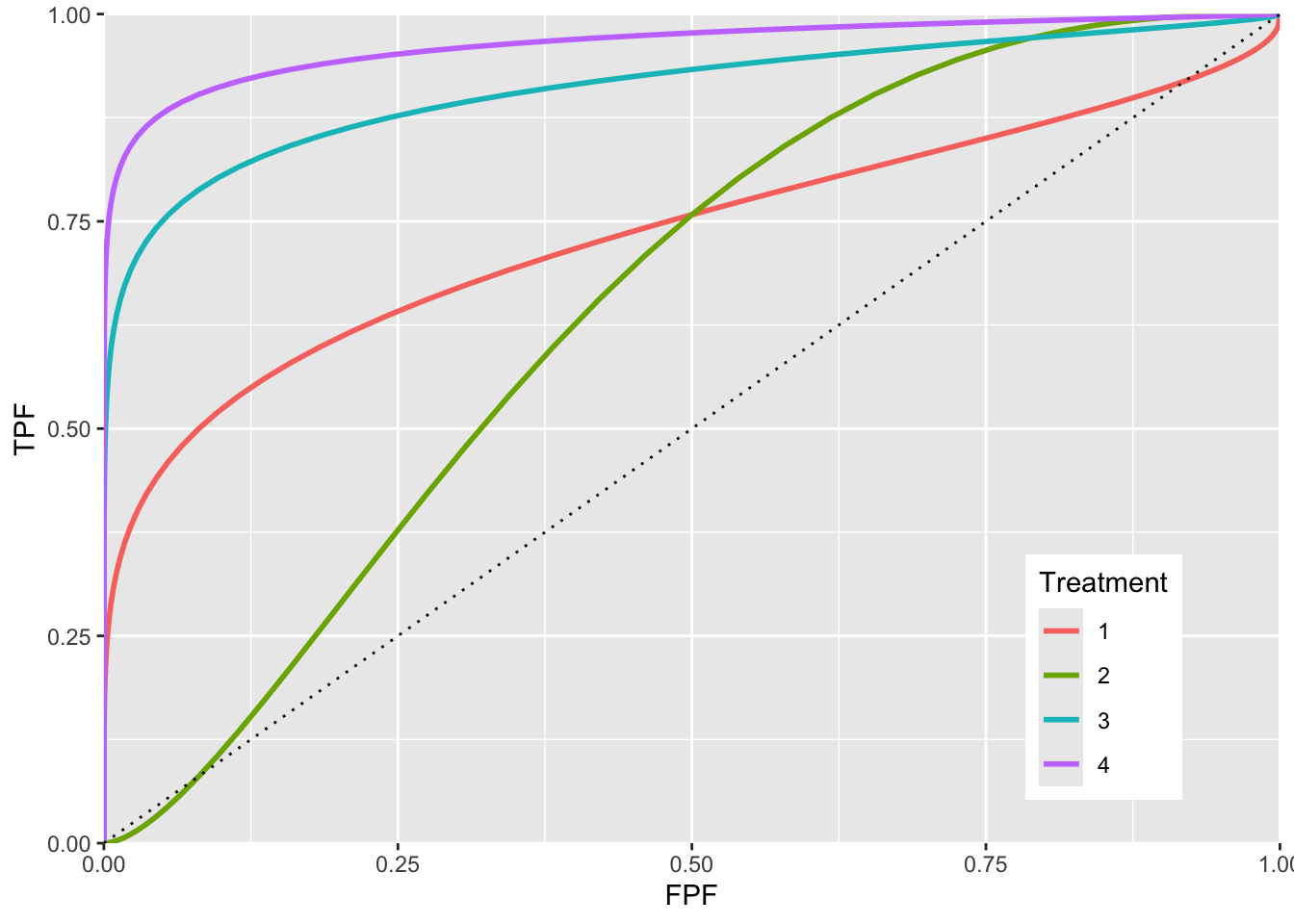

FIGURE 6.6: ROC curves for different parameters. The curve 1 corresponds to \(a = 0.7, b = 0.5\) and shows the clearest example of chance line crossing. Curve 2 corresponds to \(a = 0.7, b = 1.5\) and it shows chance line crossing near the origin. Curve 3 line corresponds to \(a = 1.5, b = 0.5\). Curve 4 corresponds to \(a = 2, b = 0.5\). All curves exhibit chance line crossings even though they may not be clearly evident.

The red plot (curve 1) is the clearest example of an improper ROC. The chance line crossing near the upper right corner, around (0.919,0.919) and the fact that the ROC curve must eventually reach (1, 1) implies that the curve must turn upwards as one approaches (1, 1) thereby displaying a “hook”. Whenever \(b \ne 1\) the hook is there regardless of whether it is easily visible or not. If \(b < 1\) the hook is near the upper right corner. If \(b > 1\) the hook is near the origin (see green line, curve 2, corresponding to \(a = 0.7, b = 1.5\)). With increasing \(a\) the hook is less prominent (see blue line corresponding to \(a = 1.5, b = 0.5\) and purple line corresponding to \(a = 2, b = 0.5\). But it is there.

6.13.1 Reason for improper ROCs

The reason for the “hook” becomes apparent upon examination of the pdfs.

FIGURE 6.7: Reason for improper ROC

In Figure 6.7 \(a = 0.7\) and \(b = 0.5\). Since \(b < 1\) the diseased pdf is broader and has a lower peak (since the integral under each distribution is unity) than the non-diseased pdf. Starting from the extreme right and then sliding an imaginary threshold to the left one sees that initially, just below \(z = 7\), the diseased distribution starts contributing while the non-diseased distribution is not contributing, causing the ROC to start with infinite slope near the origin (because TPF is increasing while FPF is not). Around \(z = 2.5\) the non-diseased distribution starts contributing, causing the ROC slope to decrease (since FPF is now increasing). Around \(z = -3\) almost all of the non-diseased distribution has contributed which means FPF is near unity, but since not all of the broader diseased distribution has contributed TPF is less than unity. Here is a region where \(TPF < FPF\), meaning that the operating point is below the chance diagonal. As the threshold is lowered further, TPF continues to increase, as the rest of the diseased distribution contributes while FPF stays almost constant at unity. In this region, the ROC curve is approaching the upper right corner with almost infinite slope (because TPF is increasing but FPF is not).

Usually the hook is not readily visible. For example, in Fig. 6.5 one would have to “zoom-in” on the upper right corner to see. An example from a clinical study is Fig. 1 in (Pisano et al. 2005) where each of the film modality ROC curves show the “hook”. Ways of fitting proper ROC curves are described in Chapter 7.

One reason for for \(b < 1\), namely location uncertainty, follows from the Radiological Search Model (RSM) presented in RJafrocFrocBook. If the location of the lesion is unknown to the observer, then z-samples from diseased cases are of two types: samples from lesion localizations centered at \(\mu\) or samples from non-lesion localizations centered at 0. The resulting mixture-distribution will have larger variance than samples from non-diseased regions centered at 0 occurring on non-diseased cases. The mixing need not be restricted to location uncertainty. Even is location is known, if the lesions are non-homogenous (e.g., they contain a range of contrasts or sizes) then a similar mixture-distribution induced broadening is expected. The contaminated binormal model (CBM) – see Chapter 7 – also predicts that the diseased distribution is wider than the non-diseased one.

6.14 Degenerate datasets

The unphysical nature of the “hook” is not the only reason for seeking alternate ROC models. The binormal model is also highly susceptible to degeneracy problems.

Metz defined binormal degenerate data sets as those that result in exact-fit binormal ROC curves of inappropriate shape consisting of a series of horizontal and/or vertical line segments in which the ROC “curve” crosses the chance line.

6.14.1 Understanding degenerate datasets

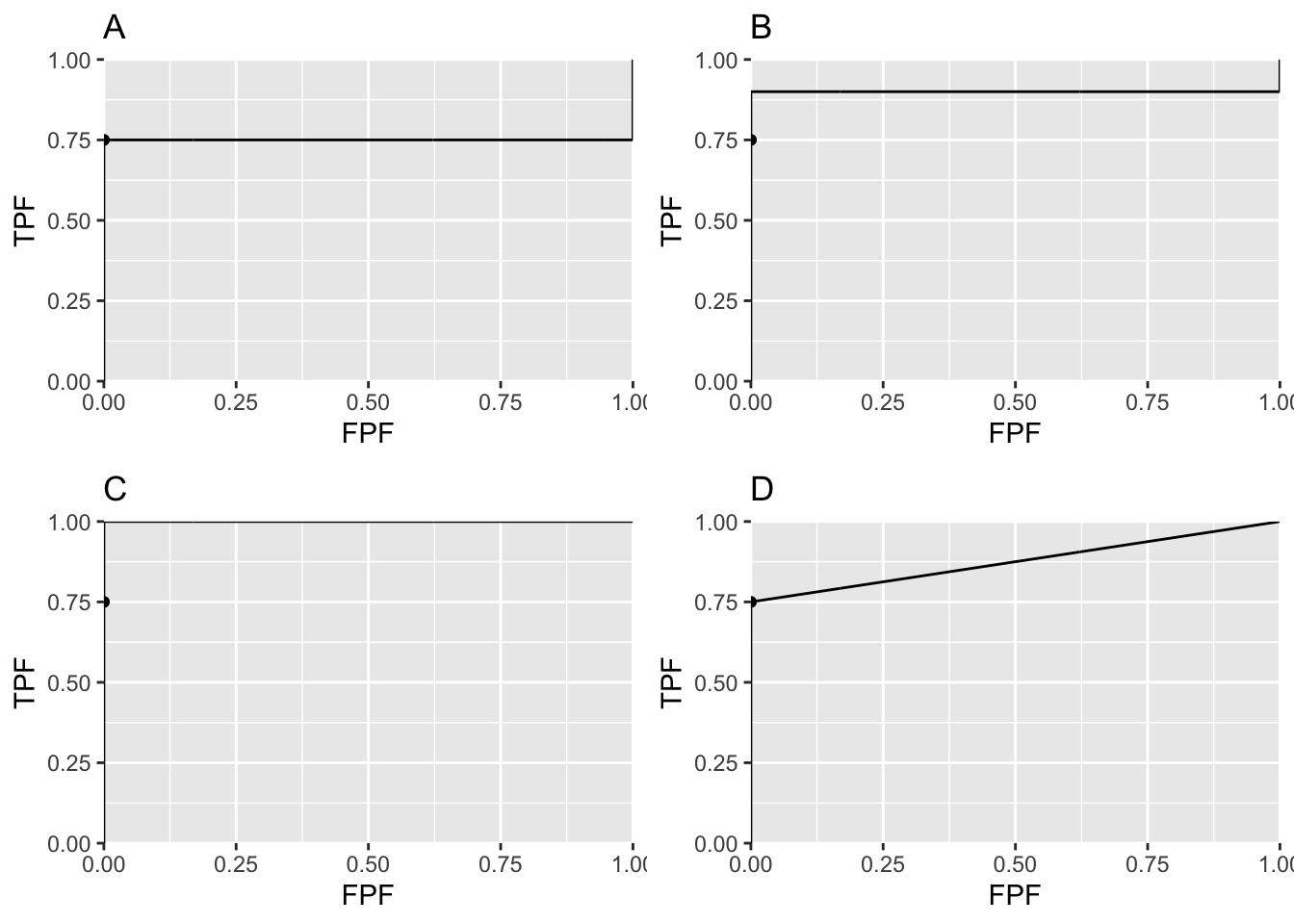

To understand this, consider that the non-diseased distribution pdf is a Dirac delta function centered at zero (this function integrates to unity) and the unit variance diseased distribution is centered at 0.6744898. In other words this model is characterized by \(a = 0.6744898\) and \(b = 0\). As the threshold \(\zeta\) is moved leftward starting from the far right TPF will increase but \(\text{FPF}=0\) until \(\zeta\) reaches zero. Just before reaching this value the coordinates of the ROC operating point are (0, 0.75). The 0.75 is due to the fact that \(z = 0\) is -0.6744898 units relative to the center of the diseased distribution, so the area under the diseased distribution to the left of \(z = 0\) is pnorm(-0.6744898), i.e., 0.250. Since pnorm is the probability to the left of the threshold, TPF is its complement, namely 1-pnorm(-0.6744898), i.e., 0.750000016.

As the threshold crosses the delta function FPF jumps from 0 to 1 but TPF stays constant. The operating point has jumped from (0, 0.75) to (1, 0.75). When the threshold is reduced further, the operating point moves up vertically along the right side of the ROC plot, until the threshold is so small that virtually all of diseased distribution exceeds it and the operating point reaches (1, 1). The ROC curve is illustrated in Fig. 6.8 panel A, which is an extreme example of an ROC curve with a “hook”. Since the fit passes through the sole operating point provided by the observer, i.e., (0,0.75), it is an exact fit to the operating point.

6.14.2 The exact fit is not unique

Given only one observed operating point (0, 0.75) the preceding fit is not unique. If the diseased distribution is shifted appropriately to the right, by varying \(a\), see below, then the ROC curve will be the vertical line segment from the origin to (0, 0.9) followed by a horizontal line segment to (1, 0.9) and a vertical line segment to (1, 1), see Fig. 6.8 panel B. If the diseased distribution is shifted well to the right, i.e., \(a\) is very large, then the ROC curve will be the vertical line segment from the origin to (0,1) followed by the horizontal line segment to (1, 1) as in panel C.

FIGURE 6.8: Panels A, B and C illustrate that the exact fit is not unique. Panel D illustrates a reasonable unique fit to the data.

Panels A, B and C in Fig. 6.8 represent exact fits to the observed operating point with \(b = 0\) and different values of \(a\). None of them is reasonable. Panel D is discussed below.

6.14.3 On instructing an observer to “spread their ratings”

Degeneracy occurs if the observer does not provide any interior operating points. Perhaps one has a non-cooperating observer who is not heeding the instructions to spread the ratings, use all the bins. The observer could in fact be cooperating fully and still be unable to provide any interior data points.

Consider 100 diseased cases consisting of 75 easy cases and 25 difficult cases and 100 non-diseased cases of varying degrees of difficulty but less suspicious than the “five” category. The observer is expected to rate the 75 easy diseased cases as “fives” and the remaining cases as less than “fives” yielding the operating point (0, 0.75). No amount of coaxing to “spread their ratings” is going to convince the observer to rate with “twos”, “threes” and “fours” any of the 75 easy diseased cases. If the cases are obviously diseased, and that is what is meant by easy diseased cases, they are supposed to be rated fives, i.e., definitely diseased. Forcing them to rate some of them as “probably diseased” or “possibly diseased” would be like altering the reading paradigm to fit the convenience of the researcher, never a good idea.

6.14.4 A reasonable fit to the degenerate dataset

Theorem: given an observed operating point the line connecting that point to (1, 1) represents a lower bound on achievable performance by the observer.

The observer achieves the lower bound by guessing to classify the remaining cases, i.e., those not contributing to the observed operating point. This is how it works: having rated the 75 easy diseased cases as “fives” and the rest of the cases in lower bins the guessing observer randomly rates 1/5th of the remaining cases in the “four” bin. This would add 5 diseased cases (25/5) and 20 non-diseased cases (100/5) in the “four” bin yielding the interior point (0.20, 0.80). If the observer randomly rates 2/5th of the cases to the “four” bin rating category one obtains the interior point (0.40, 0.85). By simply increasing the fraction of cases that are randomly rated “fours” the observer can move the operating point along the straight line connecting (0, 0.75) and (1, 1), as in plot D in Fig. 6.8. Since it involves guessing this must represent a lower bound on performance.

The lowest possible performance yields a unique ROC curve: any higher ROC curve would not be unique.

REFERENCES

A more complicated version of this model would allow the mean of the non-diseased distribution to be non-zero and its variance different from unity. The resulting 4-parameter model is no more general than the 2-parameter model. The reason is that one is free to transform the decision variable, and associated thresholds, by applying arbitrary monotonic increasing function transformation, which do not change the ordering of the ratings and hence do not change the ROC curve. So if the mean of the noise distribution were non-zero, subtracting this value from all Z-samples would shift the effective mean of the non-diseased distribution to zero (the shifted Z-values are monotonically related to the original values) and the mean of the shifted diseased distribution becomes \(\mu_2-\mu_1\). Next, one scales or divides (division by a positive number is also a monotonic transformation) all the Z-samples by \(\sigma_1\), resulting in the scaled non-diseased distribution having unit variance, and the scaled diseased distribution has mean \(\frac{\mu_2-\mu_1}{\sigma_1}\) and variance \((\frac{\sigma_2}{\sigma_1})^2\). Therefore, if one starts with 4 parameters then one can, by simple shifting and scaling operations, reduce the model to 2 parameters, as in Eqn. (6.1).↩︎

This curve is not binormal as the truncation destroys the normality of the two distributions↩︎