Chapter 11 CAD optimal operating point

11.2 Introduction

A familiar problem for the computer aided detection or artificial intelligence (CAD/AI) algorithm designer is how to set the reporting threshold of the algorithm. Assuming designer level mark-rating FROC data is available for the algorithm a decision needs to be made as to the optimal reporting threshold, i.e., the minimum rating of a mark before it is shown to the radiologist (or the next stage of the AI algorithm – in what follows references to CAD apply equally to AI algorithms).

The problem has been solved in the context of ROC analysis (C. E. Metz 1978), namely, the optimal operating point on the ROC corresponds to where its slope equals a specific value determined by disease prevalence and the cost of decisions in the four basic binary paradigm categories: true and false positives and true and false negatives. In practice the costs are difficult to quantify. However, for equal numbers of diseased and non-diseased cases and equal costs it can be shown that the slope of the ROC curve at the optimal operating point is unity. For a proper ROC curve this corresponds to the point that maximizes the Youden-index (Youden 1950). Typically this index is maximized at the point that is closest to the (0,1) corner of the ROC.

Lacking a procedure for determining it analytically currently CAD designers (in consultation with radiologists) set imaging site-specific reporting thresholds. For example, if radiologists at an imaging site are comfortable with more false marks as the price of potentially greater lesion-level sensitivity, the reporting threshold for them is adjusted downward.

This chapter describes an analytic method for finding the optimal reporting threshold based on maximizing AUC (area under curve) of the wAFROC curve. For comparison the Youden-index based method was also used.

11.3 Methods

Terminology

- Non-lesion localizations = NLs, i.e., location level “false positives”.

- Lesion localizations = LLs, i.e., location level “true positives”.

- Latent marks = perceived suspicious regions that are not necessarily marked. There is a distinction, see below, between perceived and actual marks.

Background on the radiological search model (RSM) is provided in Chapter 6. The model predicts ROC, FROC and wAFROC curves and is characterized by the three parameters – \(\mu, \lambda, \nu\) – with the following meanings:

The \(\mu\) parameter, \(\mu \ge 0\), is the perceptual signal-to-noise-ratio of lesions. Higher values of \(\mu\) lead to increasing separation of two unit variance normal distributions determining the ratings of perceived NLs and LL. As \(\mu\) increases performance of the algorithm increases.

The \(\lambda\) parameter, \(\lambda \ge 0\), determines the mean number of latent NLs per case. Higher values lead to more latent NL marks per case and decreased performance.

The \(\nu\) parameter, \(0 \le \nu \le 1\), determines the probability of latent LLs, i.e., the probability that any present lesion will be perceived. Higher values of \(\nu\) lead to more latent LL marks and increased performance.

Additionally, there is a threshold parameter \(\zeta_1\) with the property that only if the rating of a latent mark exceeds \(\zeta_1\) the latent mark is actually marked. Therefore higher values of \(\zeta_1\) correspond to more stringent reporting criteria and fewer actual marks. As will be shown next net performance as measured by \(\text{wAFROC}_\text{AUC}\) or the Youden-index peaks at an optimal value of \(\zeta_1\). The purpose of this chapter is to investigate this effect, i.e., given the 3 RSM parameters and the figure of merit to be optimized (i.e., \(\text{wAFROC}_\text{AUC}\) or the Youden-index), to determine the optimal value of \(\zeta_1\).

In the following sections the RSM \(\lambda\) parameter is varied (for fixed \(\mu\) and \(\nu\)) and the corresponding optimal \(\zeta_1\) determined by maximizing either \(\text{wAFROC}_\text{AUC}\) or the Youden-index.

For organizational reasons only the summary results for varying \(\mu\) or \(\nu\) are shown in the body of this chapter. Detailed results are in Appendix 11.10 which also has results for limiting cases of high and low ROC performance.

The \(\text{wAFROC}_\text{AUC}\) figure of merit is implemented in the RJafroc function UtilAnalyticalAucsRSM. The Youden-index is defined as sensitivity plus specificity minus 1. Sensitivity is implemented in function RSM_TPF and specificity is the complement of RSM_FPF.

11.4 Varying \(\lambda\) optimizations

muArr <- c(2)

lambdaArr <- c(1, 2, 5, 10)

nuArr <- c(0.9)

lesDistr <- c(0.5, 0.5)

relWeights <- c(0.5, 0.5)For \(\mu = 2\) and \(\nu = 0.9\) \(\text{wAFROC}_\text{AUC}\) and Youden-index optimizations were performed for \(\lambda = 1, 2, 5, 10\). Half of the diseased cases contained one lesion and the rest contained two lesions. On cases with two lesions the lesions were assigned equal weights (i.e., equal clinical importance).

The following quantities were calculated:

\(\zeta_1\): the optimal threshold;

\(\text{wAFROC}_\text{AUC}\); the wAFROC figure of merit;

\(\text{ROC}_\text{AUC}\); the ROC figure of merit;

\(\text{NLF}\) and \(\text{LLF}\): the coordinates of the operating point on the FROC curve corresponding to \(\zeta_1\).

11.4.1 Summary table

Table 11.1: The FOM column lists the quantity being maximized, the \(\lambda\) column lists the values of \(\lambda\), the \(\zeta_1\) column lists the optimal values that maximize the chosen figure of merit. The \(\text{wAFROC}_\text{AUC}\) column lists the AUCs under the wAFROC curves, the \(\text{ROC}_\text{AUC}\) column lists the AUCs under the ROC curves, and the \(\left( \text{NLF}, \text{LLF}\right)\) column lists the operating point on the FROC curves.

| FOM | \(\lambda\) | \(\zeta_1\) | \(\text{wAFROC}_\text{AUC}\) | \(\text{ROC}_\text{AUC}\) | \(\left( \text{NLF}, \text{LLF}\right)\) |

|---|---|---|---|---|---|

| \(\text{wAFROC}_\text{AUC}\) | 1 | -0.007 | 0.864 | 0.929 | (0.503, 0.880) |

| 2 | 0.474 | 0.809 | 0.900 | (0.636, 0.843) | |

| 5 | 1.272 | 0.715 | 0.840 | (0.509, 0.690) | |

| 10 | 1.856 | 0.645 | 0.774 | (0.317, 0.502) | |

| Youden-index | 1 | 1.095 | 0.831 | 0.899 | (0.137, 0.735) |

| 2 | 1.362 | 0.781 | 0.865 | (0.173, 0.664) | |

| 5 | 1.695 | 0.705 | 0.811 | (0.225, 0.558) | |

| 10 | 1.934 | 0.644 | 0.766 | (0.265, 0.474) |

Inspection of this table reveals the following:

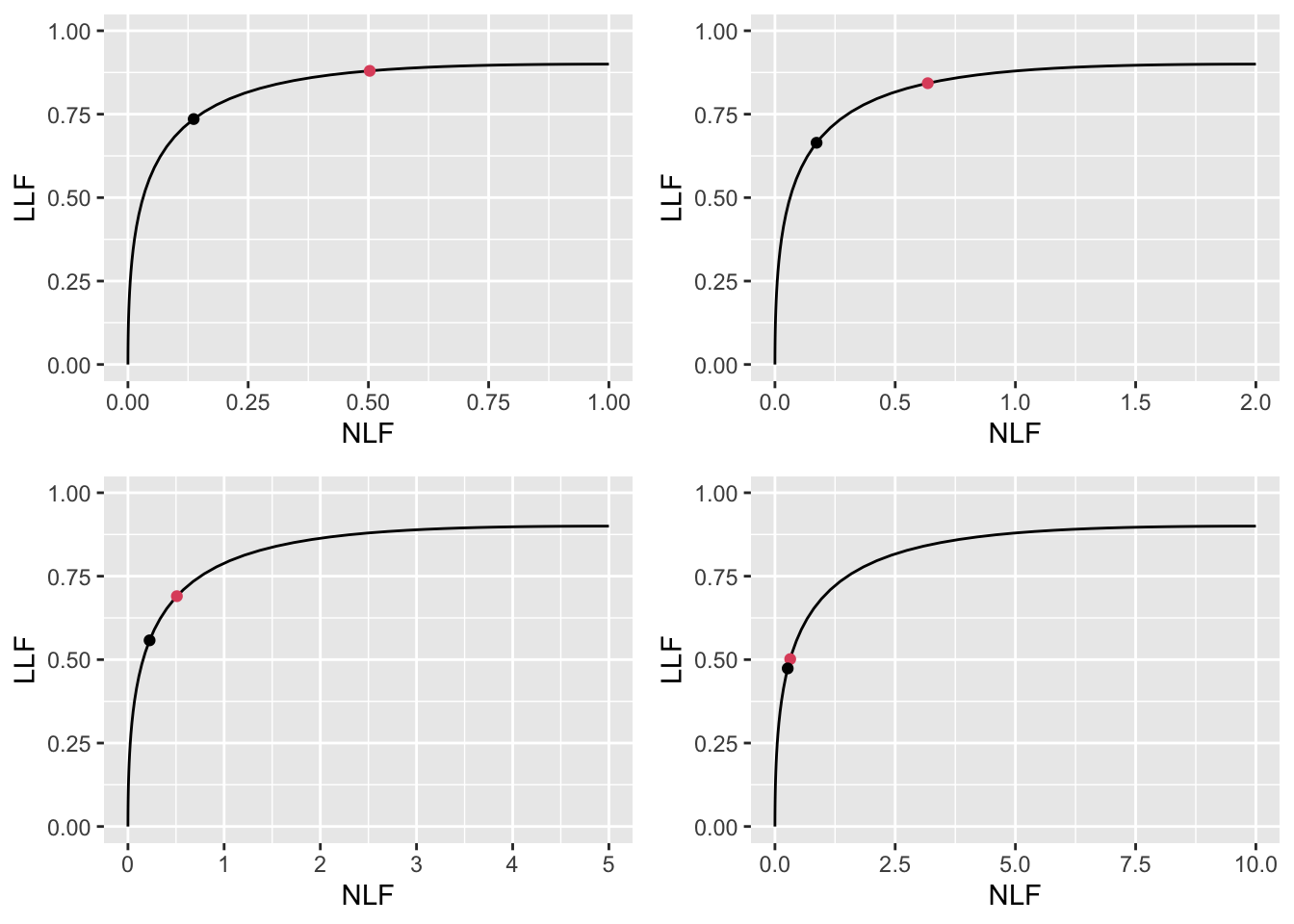

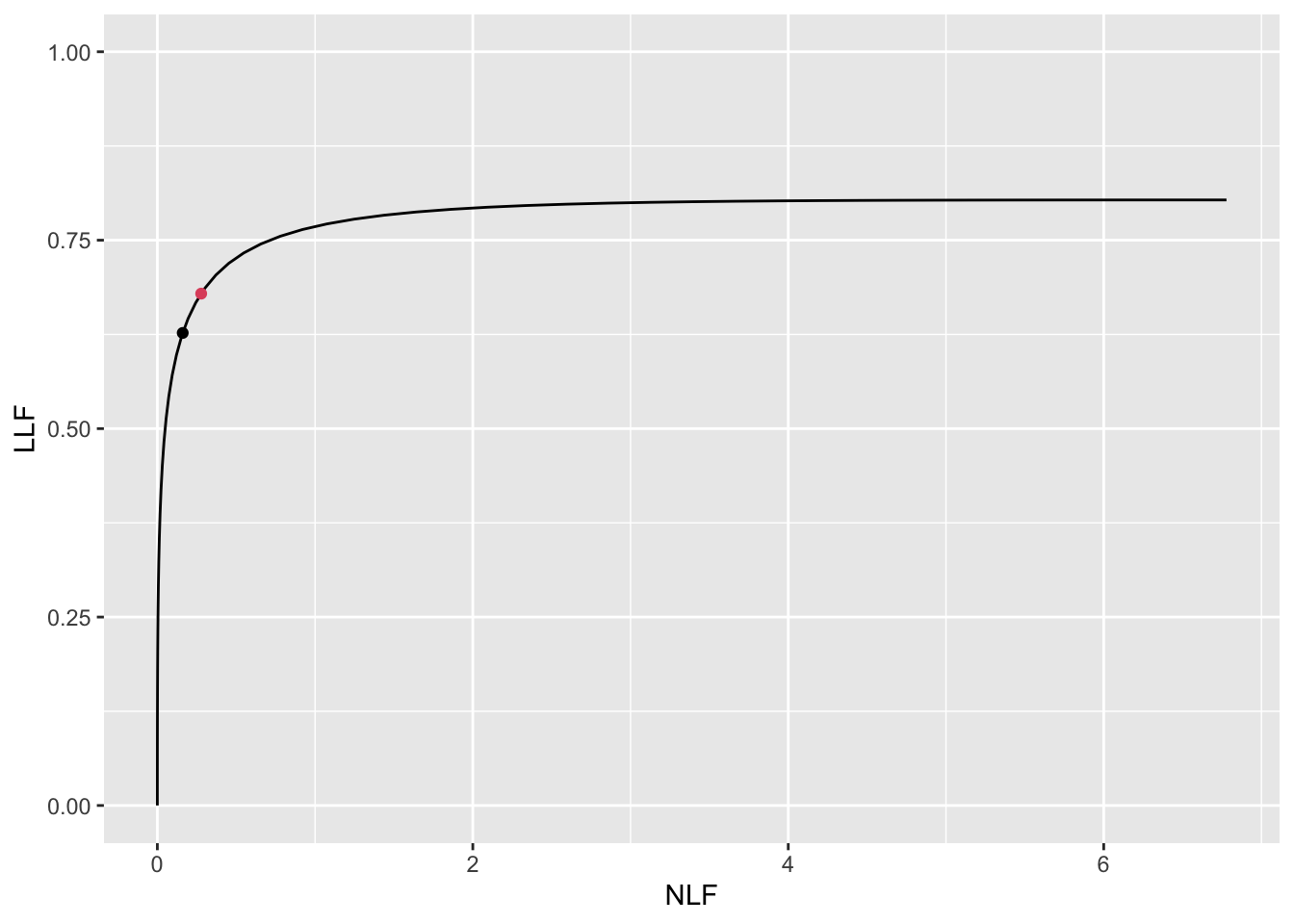

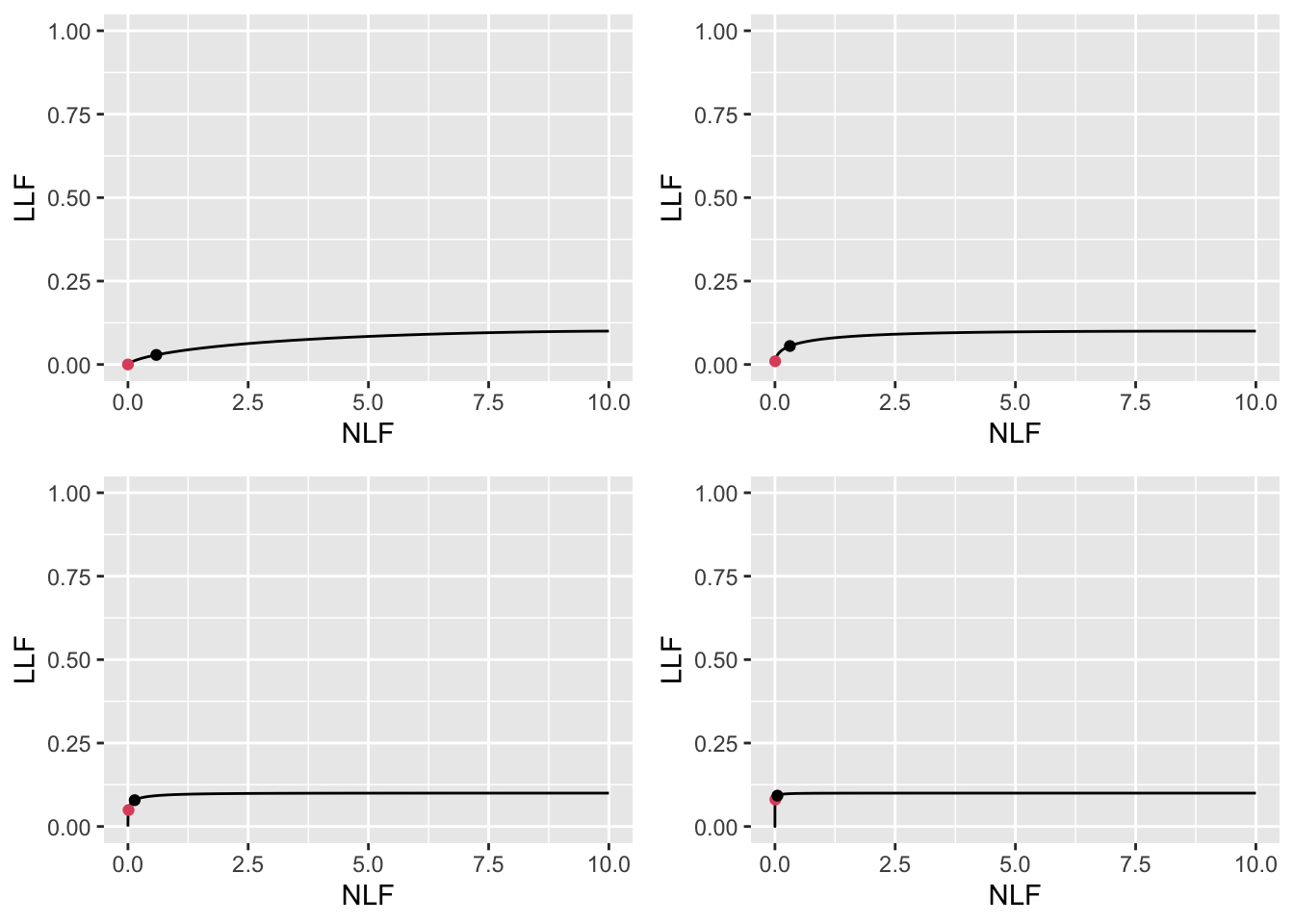

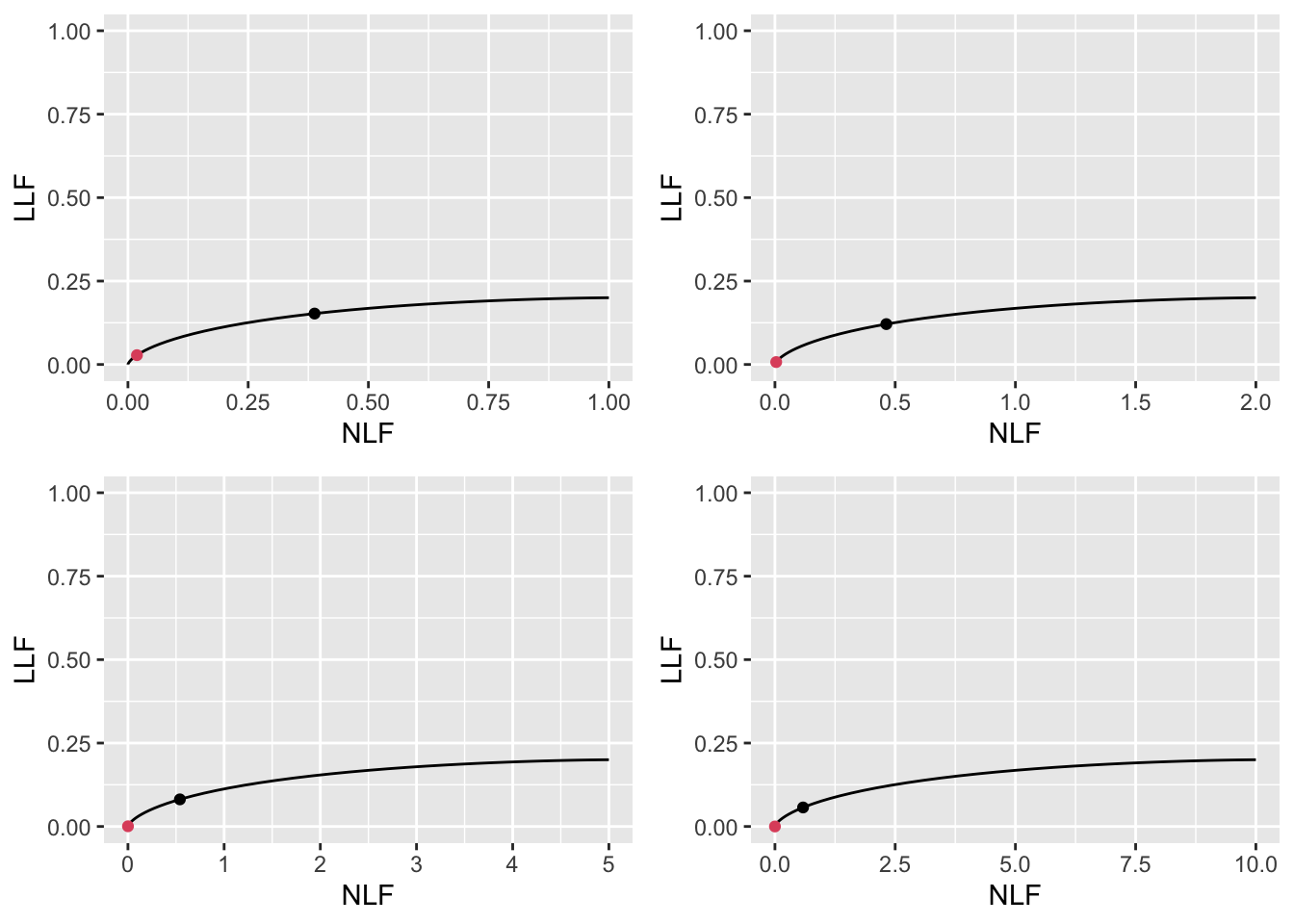

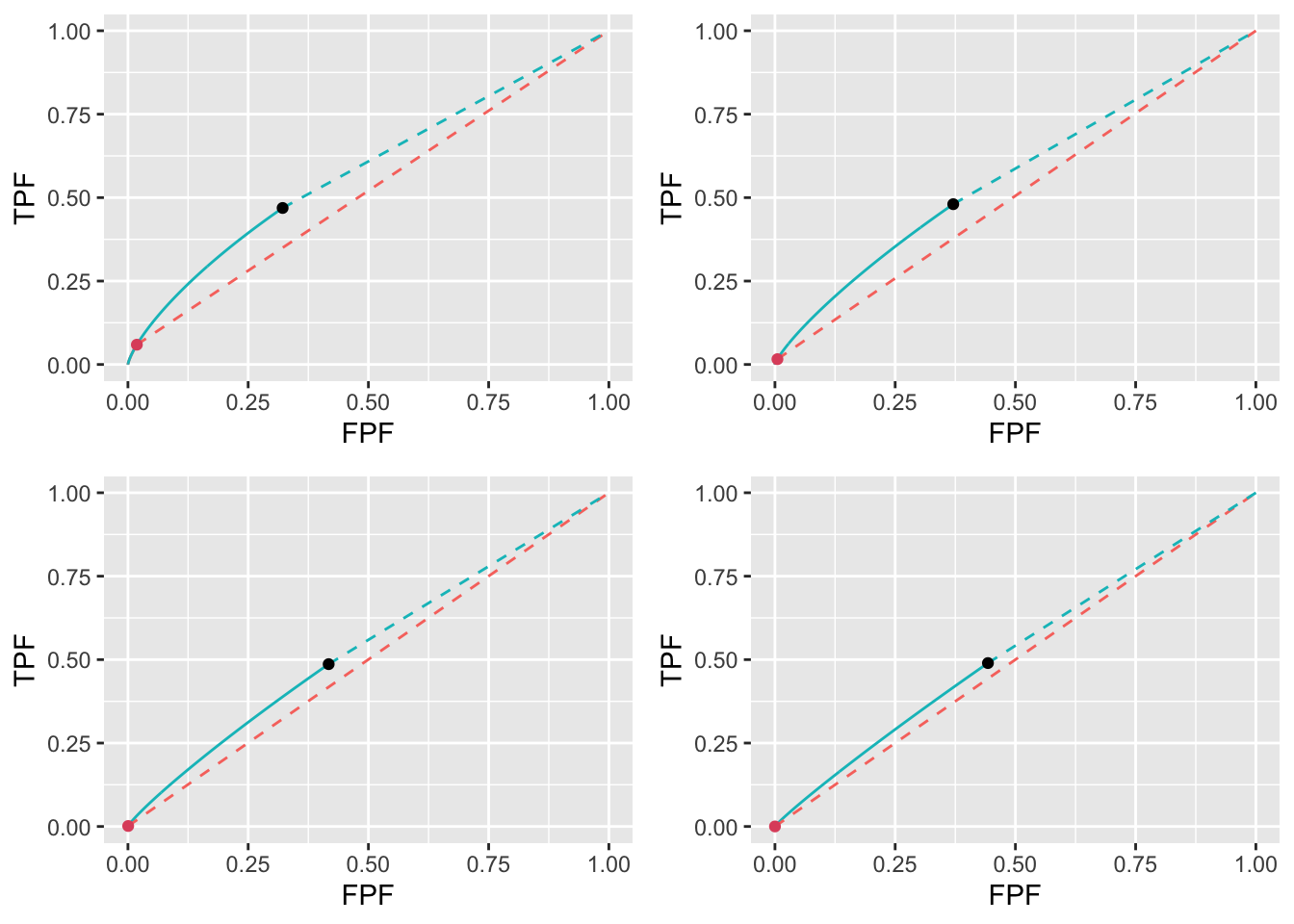

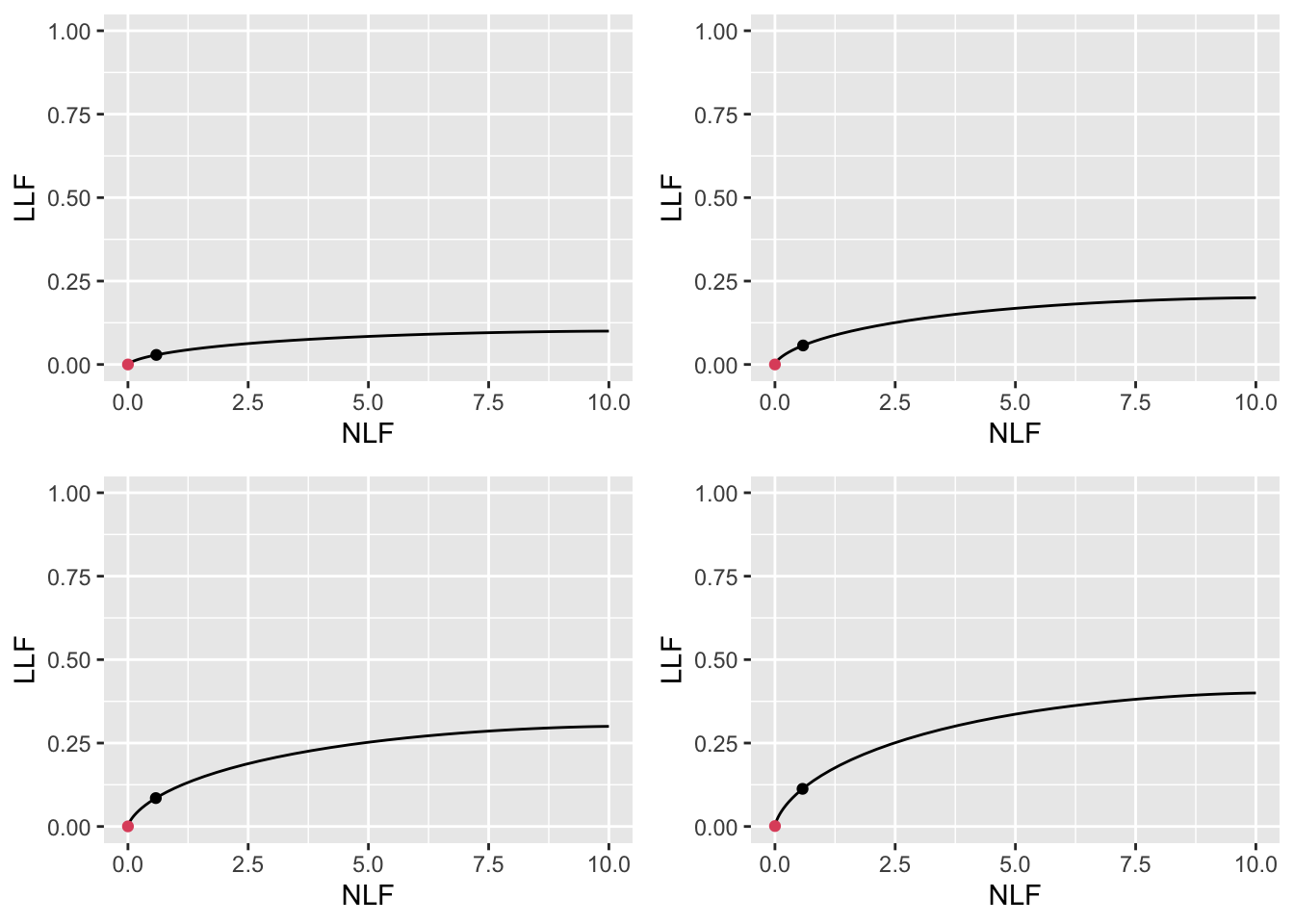

FROC plots, Fig. 11.1: The \(\text{wAFROC}_\text{AUC}\) based optimal thresholds are smaller (i.e., corresponding to laxer reporting criteria) than the corresponding Youden-index based optimal thresholds. The Youden-index based operating point (black dot) is left of the \(\text{wAFROC}_\text{AUC}\) based FROC operating point (red dot). The abscissa difference between the two points decreases with increasing \(\lambda\).

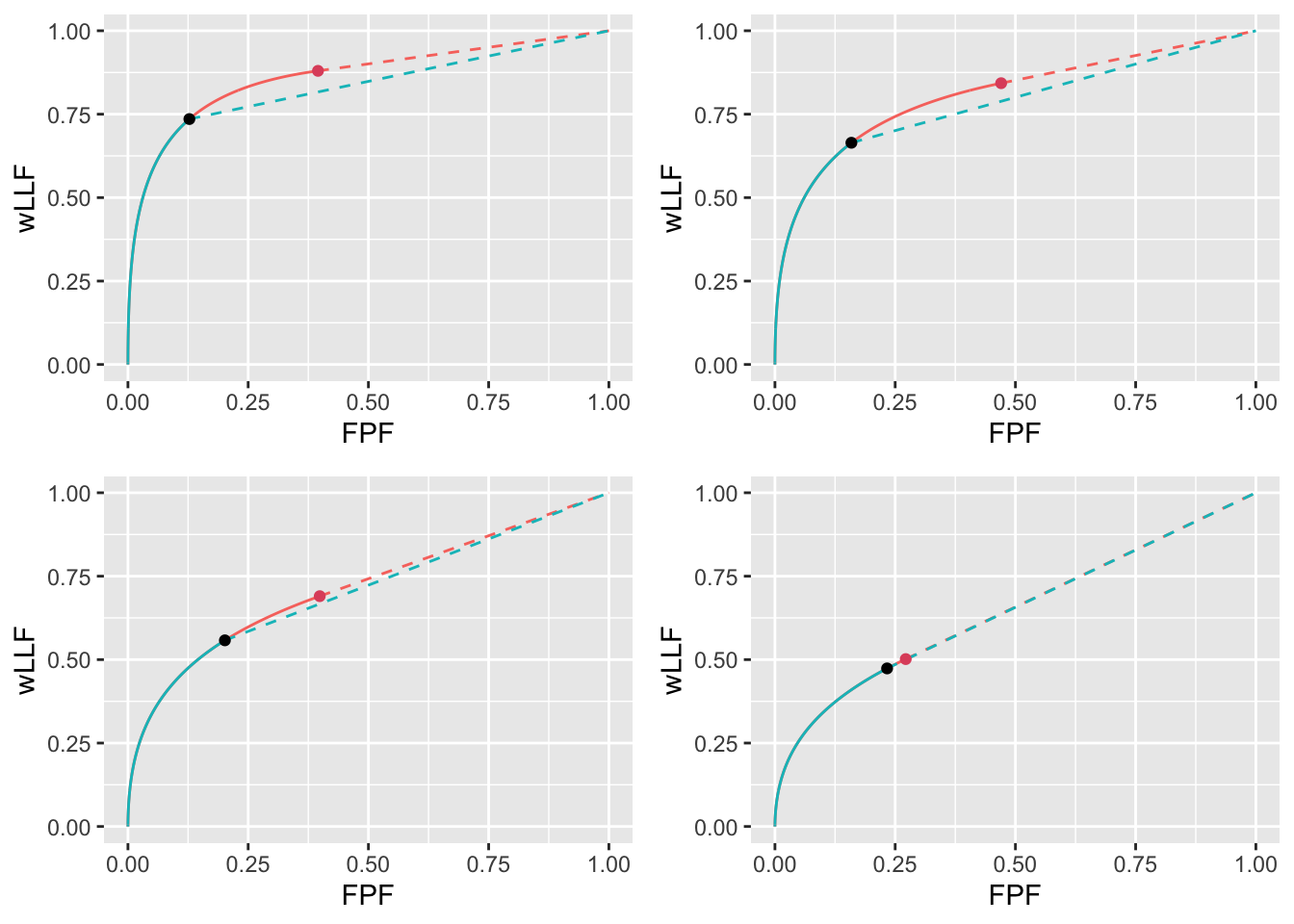

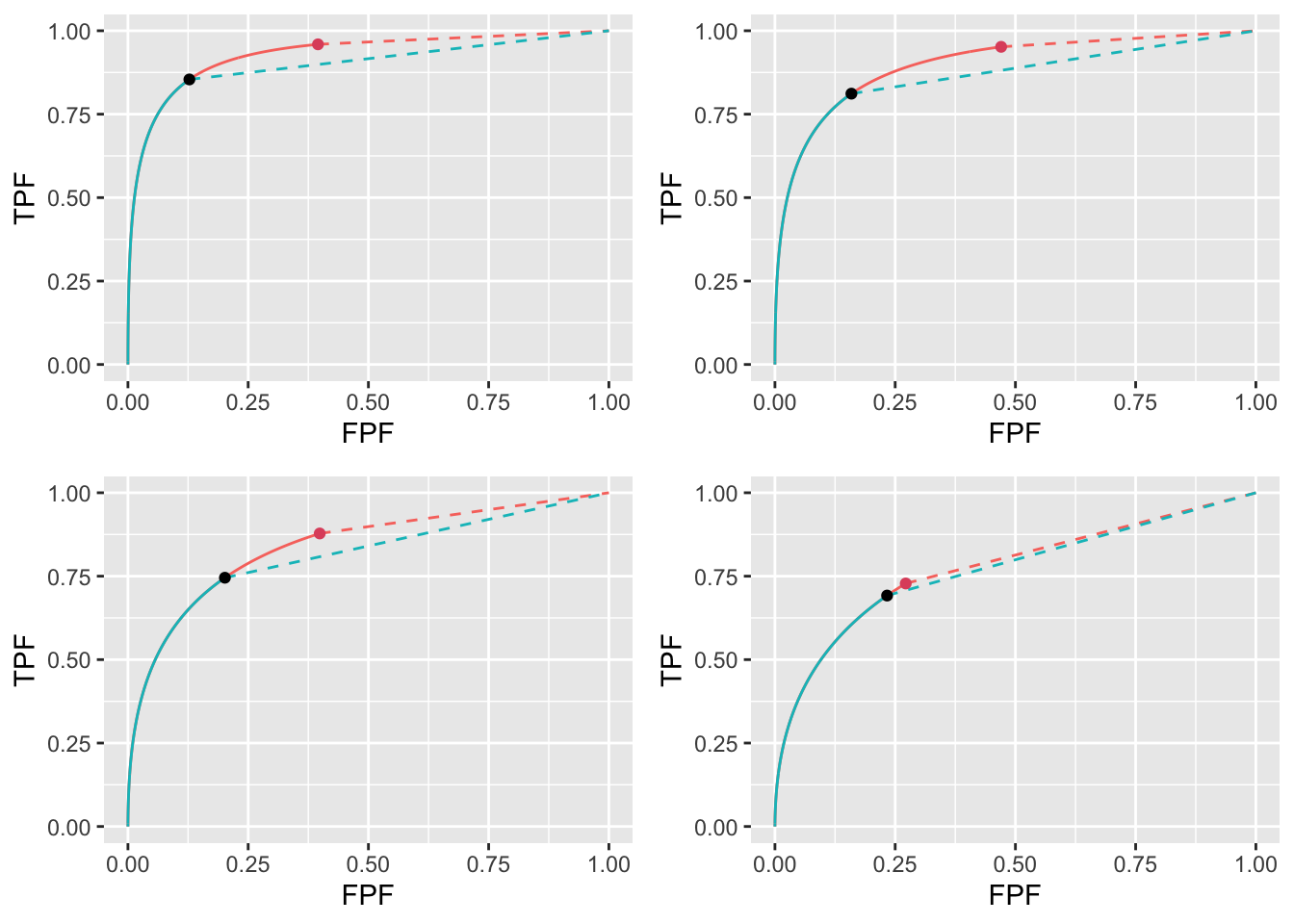

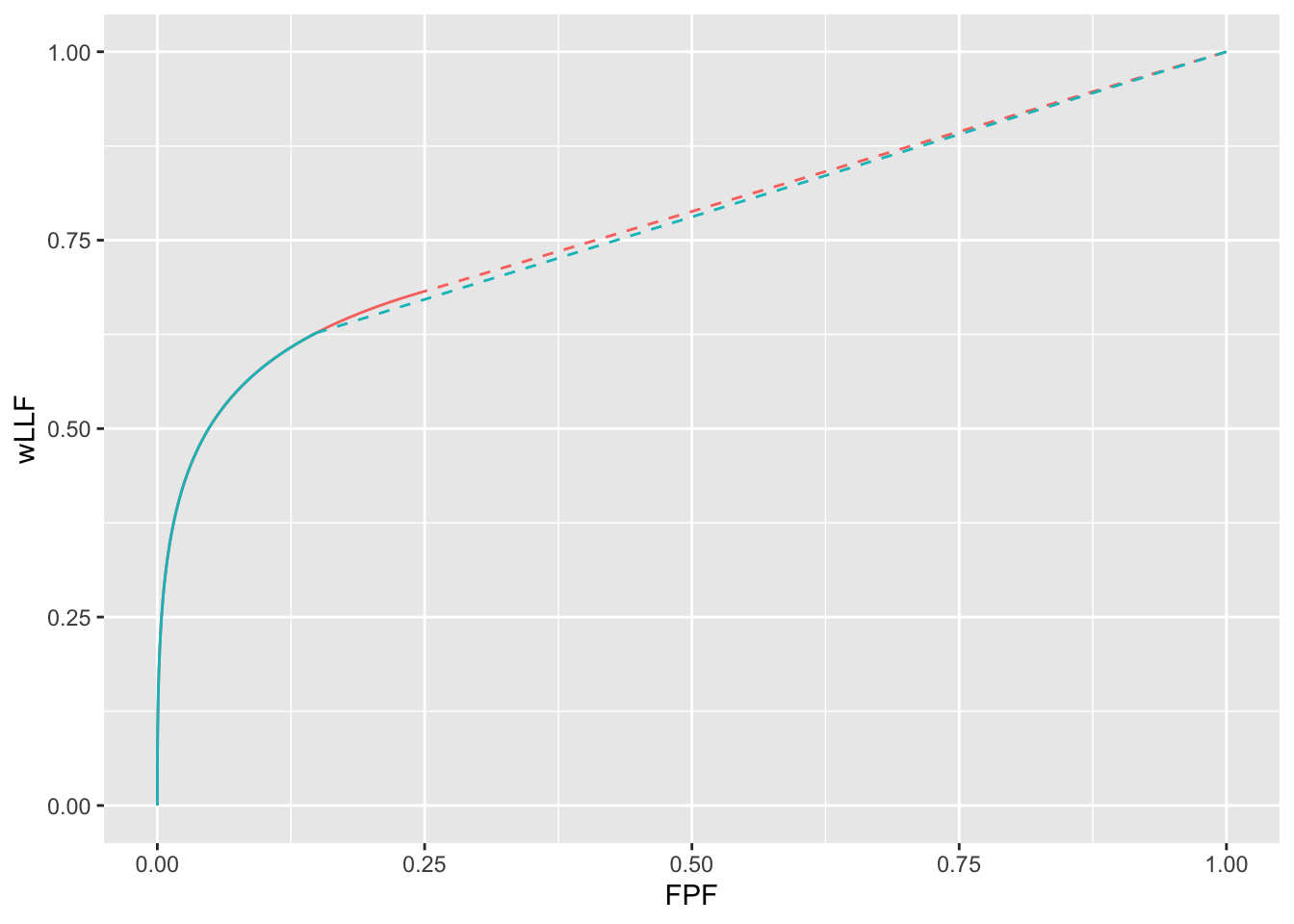

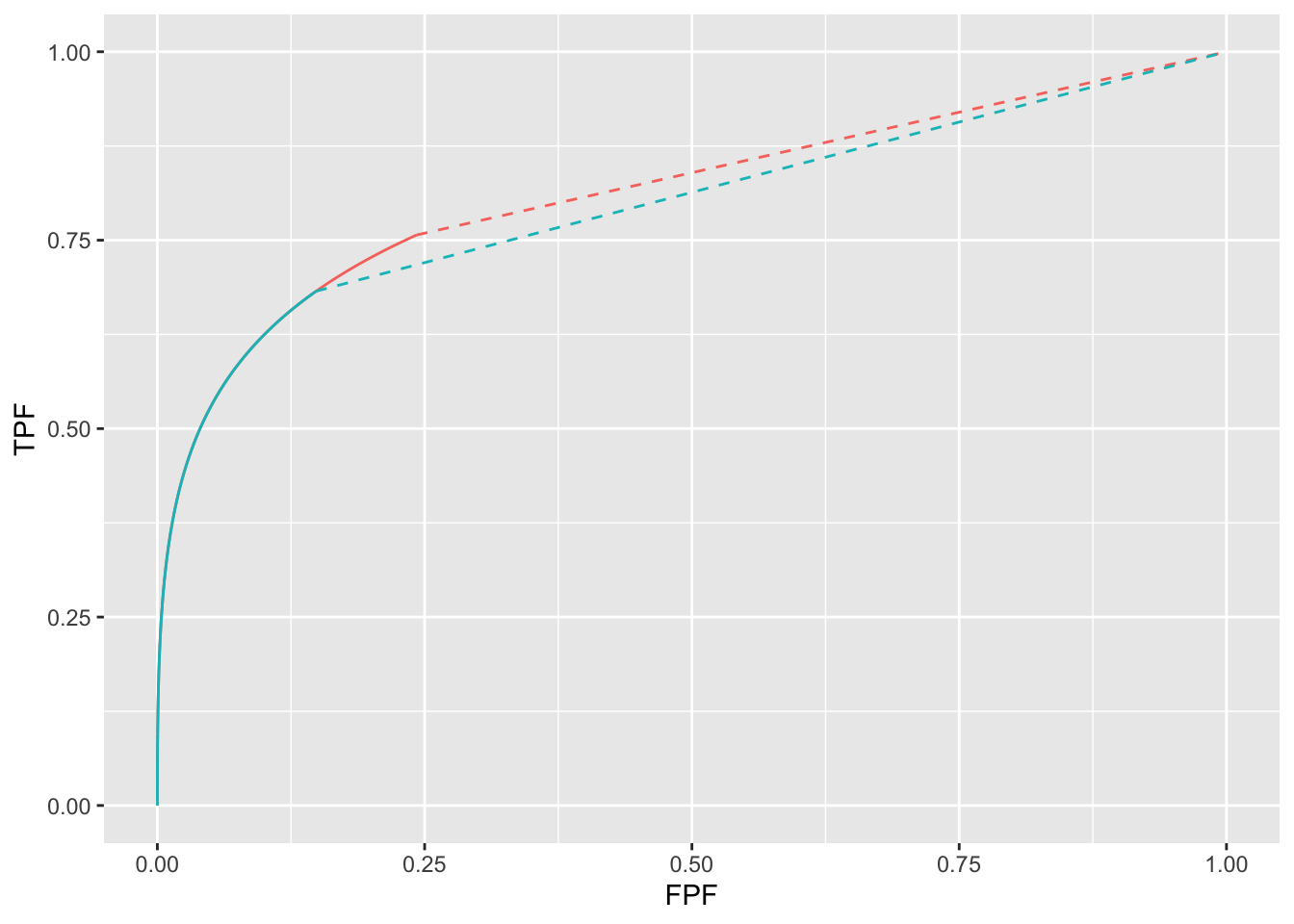

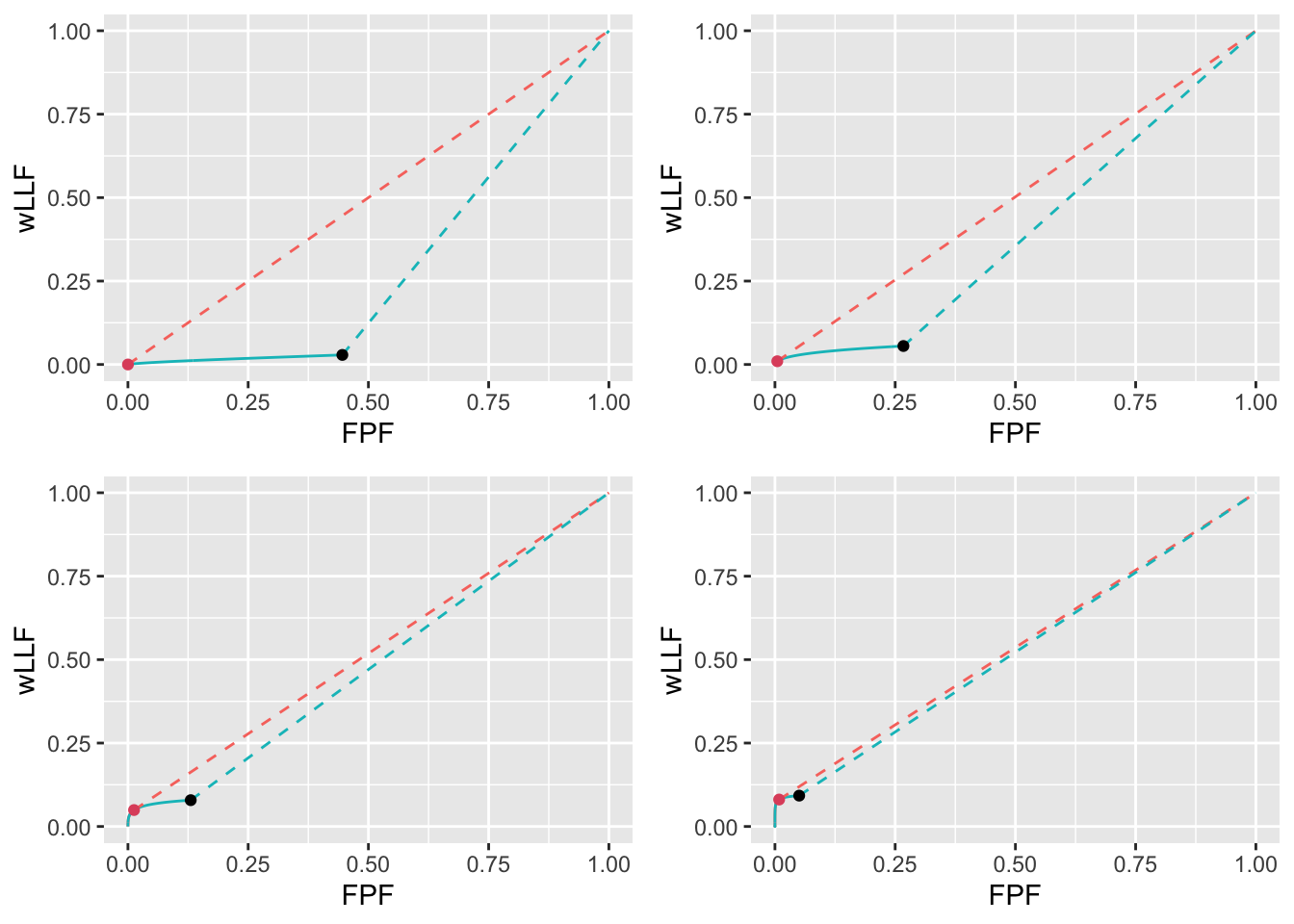

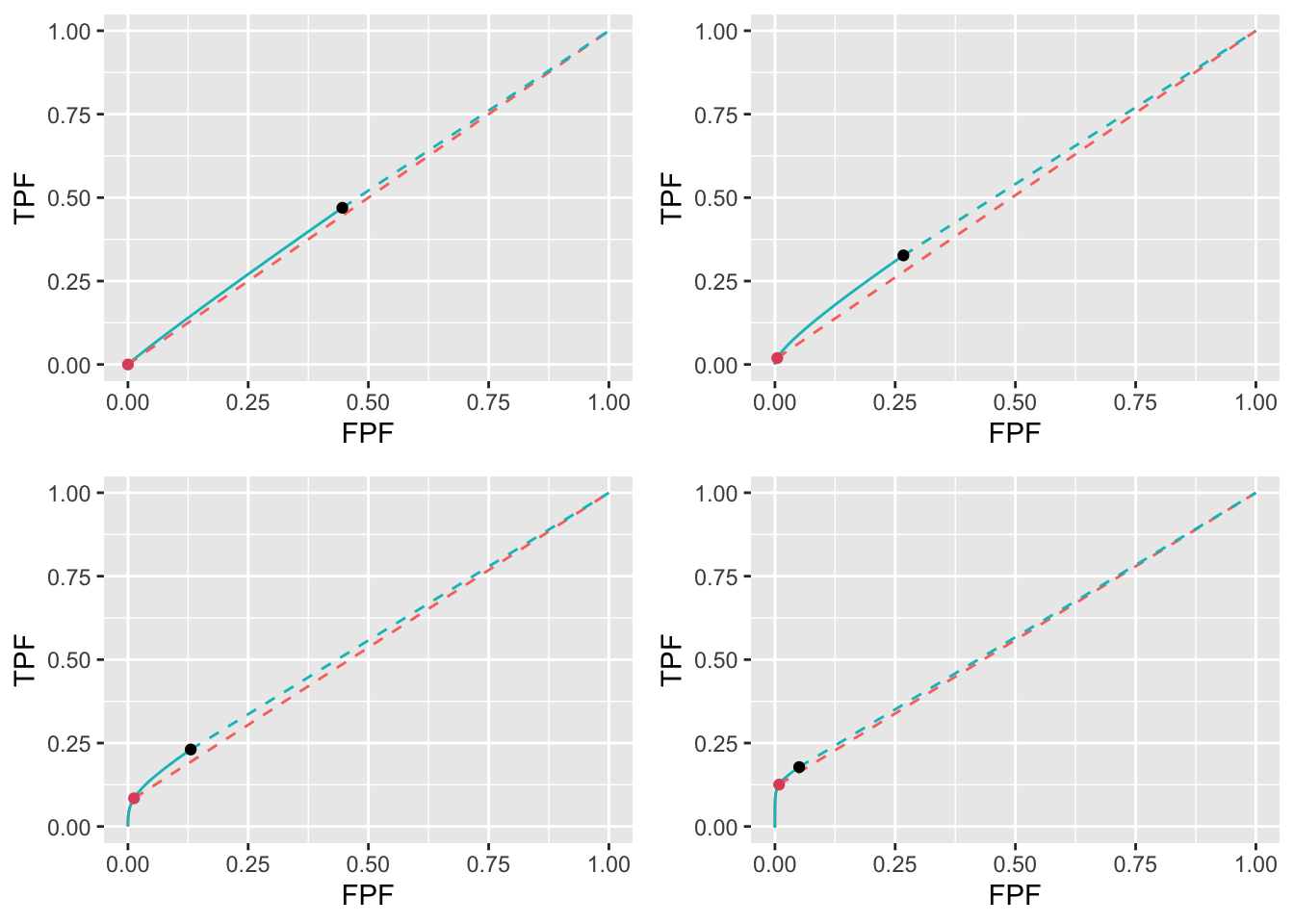

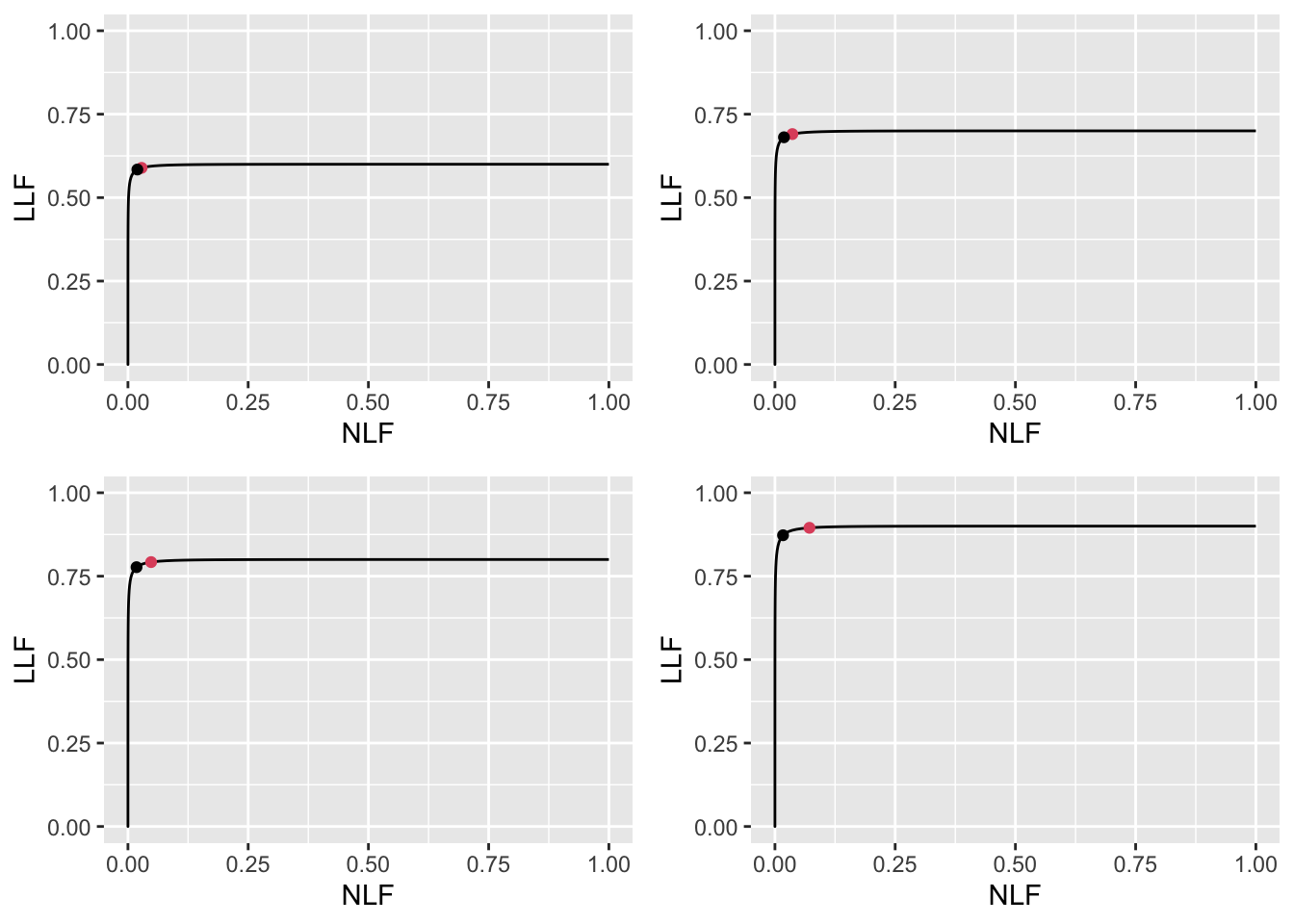

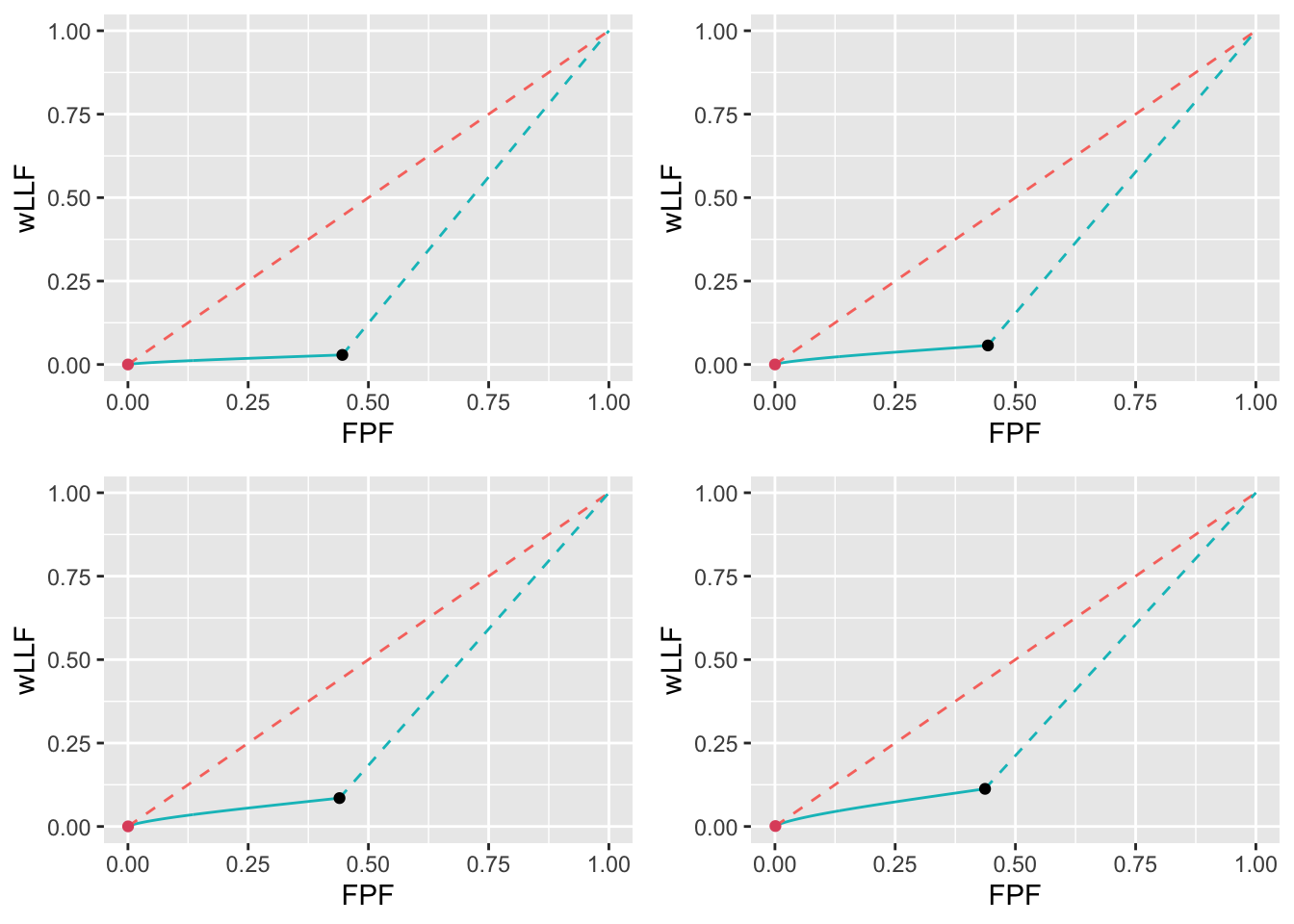

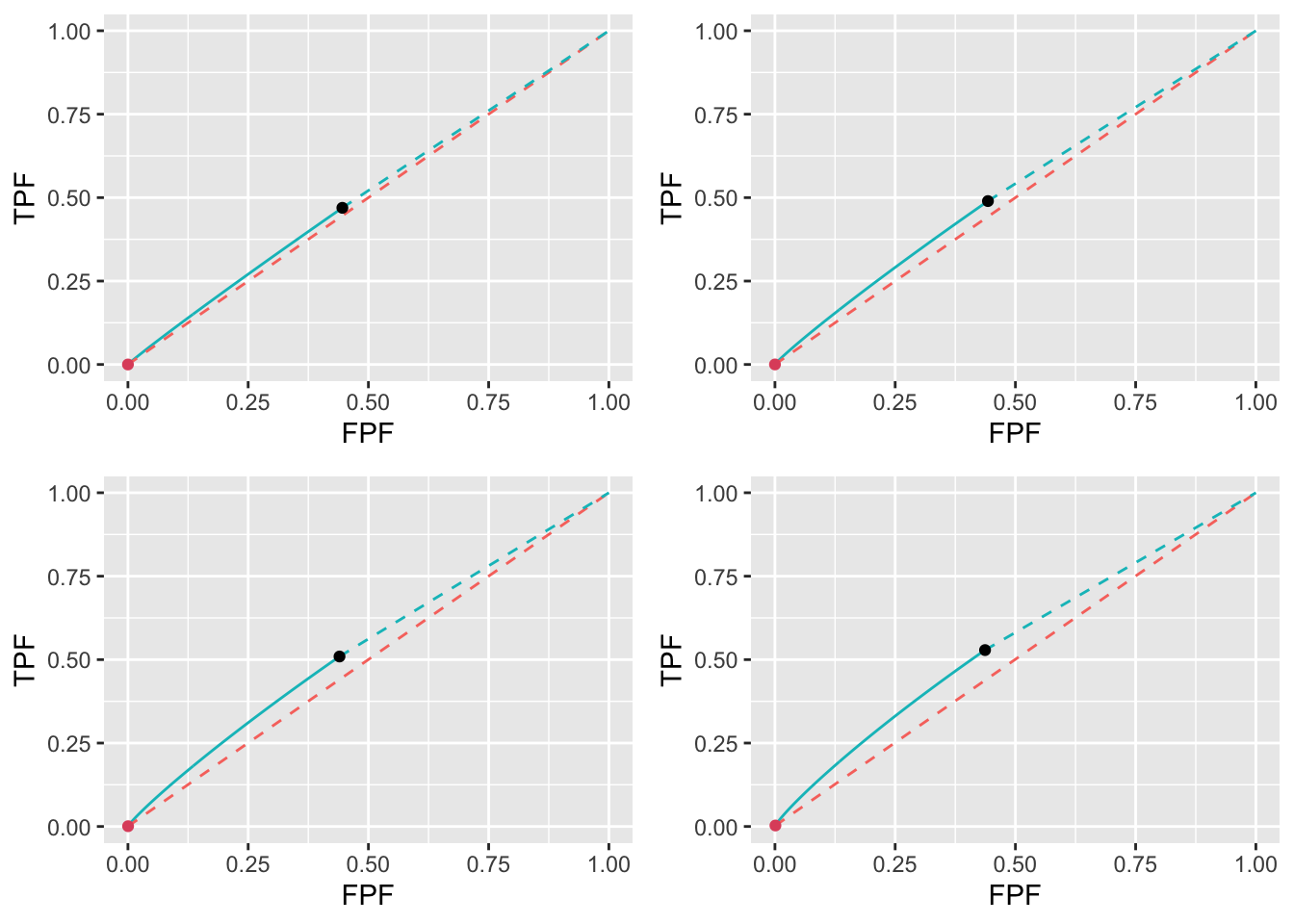

wAFROC, Fig. 11.2, and ROC plots, Fig. 11.3: The Youden-index based optimizations yield lower performance than the corresponding \(\text{wAFROC}_\text{AUC}\) based optimizations and the difference decreases with increasing \(\lambda\).

For either FOM as \(\lambda\) increases \(\zeta_1\) increases (i.e., stricter reporting threshold). When CAD performance decreases the algorithms adopt stricter reporting criteria. This should make sense to the CAD algorithm designer: with decreasing performance one has to be more careful about showing CAD generated marks to the radiologist.

11.4.2 FROC

FIGURE 11.1: FROC plots with superimposed operating points for varying \(\lambda\). The red dot corresponds to \(\text{wAFROC}_\text{AUC}\) optimization and the black dot to Youden-index optimization.

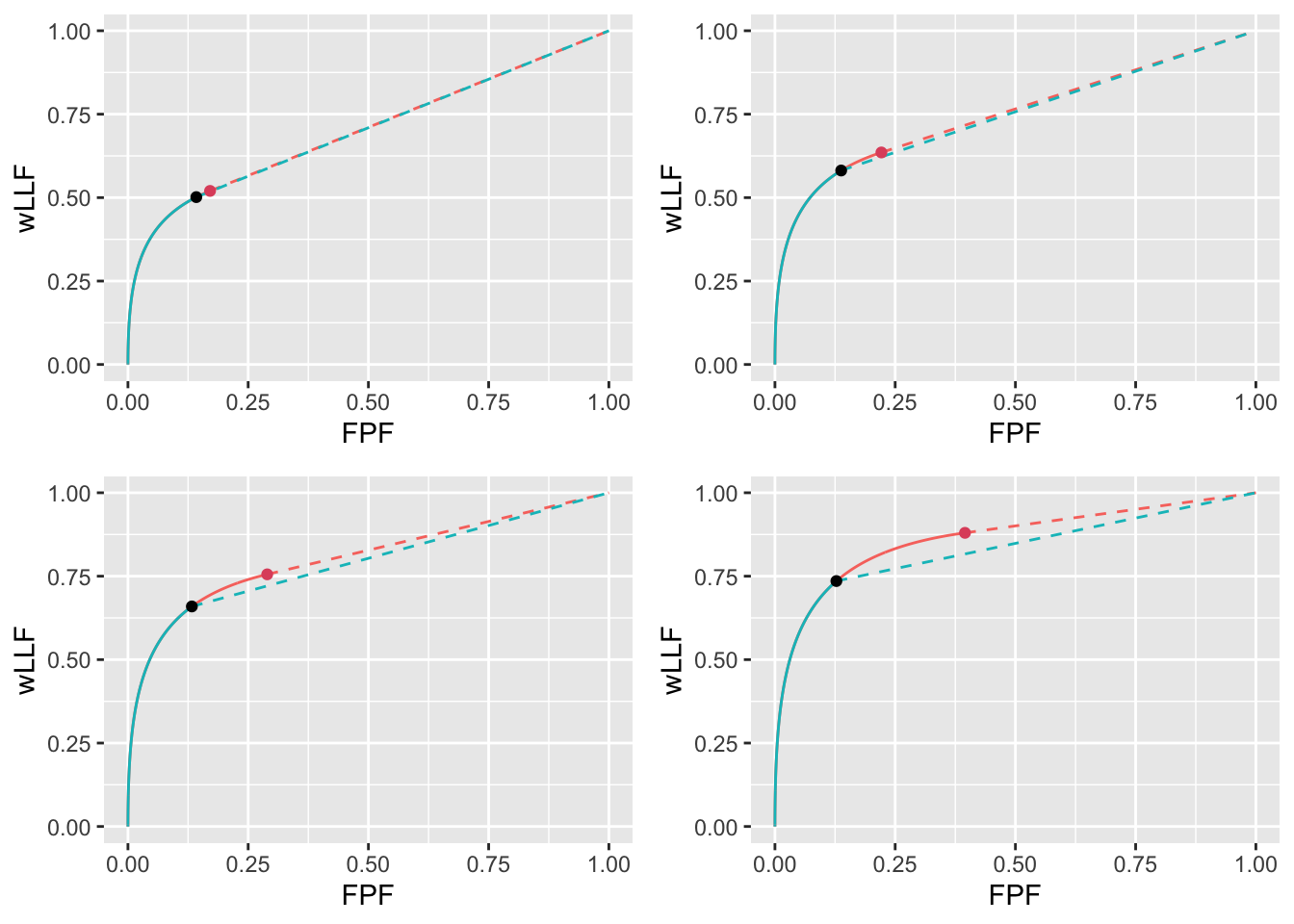

11.4.3 wAFROC

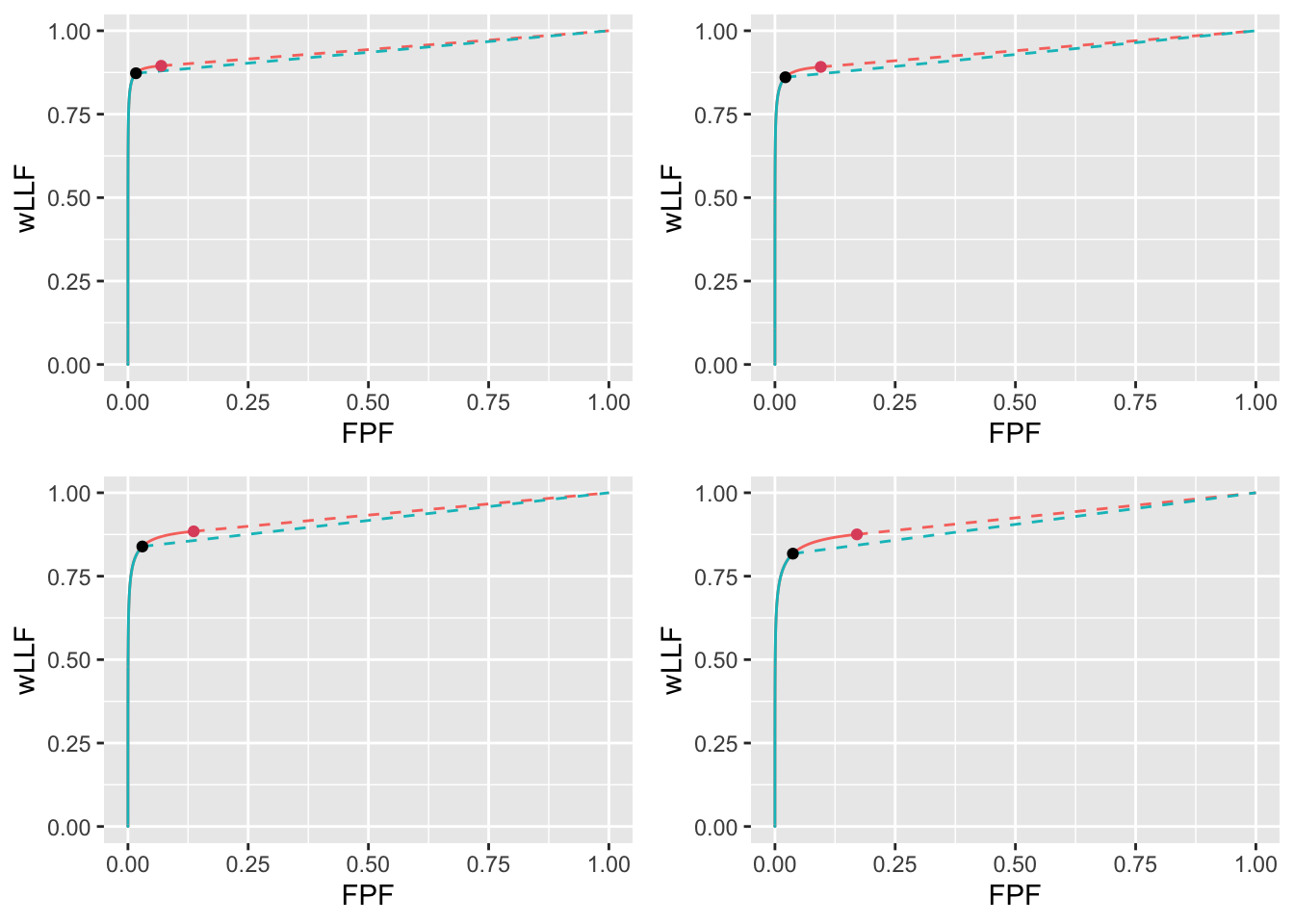

Each wAFROC plot consists of a continuous curve followed by a dashed line. The “red” curve, corresponding to \(\text{wAFROC}_\text{AUC}\) optimization, appears as a “solid-green solid-red dashed-red” curve (the curve is in fact a true red curve complicated by superposition of the green curve over part of its traverse). The “solid-green dashed-green” curve corresponds to Youden-index optimization. As before the black dot denotes the Youden-index based operating point and the red dot denotes the \(\text{wAFROC}_\text{AUC}\) based operating point.

The transition from continuous to dashed is determined by the value of \(\zeta_1\). It occurs at a higher value of \(\zeta_1\) (lower transition point) for the Youden-index optimization. In other words the stricter Youden-index based threshold sacrifices some of the area under the wAFROC resulting in lower performance, particularly for the lower values of \(\lambda\). At the highest value of \(\lambda\) the values of optimal \(\zeta_1\) are similar and both methods make similar predictions.

FIGURE 11.2: wAFROC plots for the two optimization methods: the “solid-green solid-red dashed-red” curve corresponds to \(\text{wAFROC}_\text{AUC}\) optimization and the “solid-green dashed-green” curve corresponds to Youden-index optimization. The \(\text{wAFROC}_\text{AUC}\) optimizations yield greater performance than do Youden-index optimizations and the difference decreases with increasing \(\lambda\).

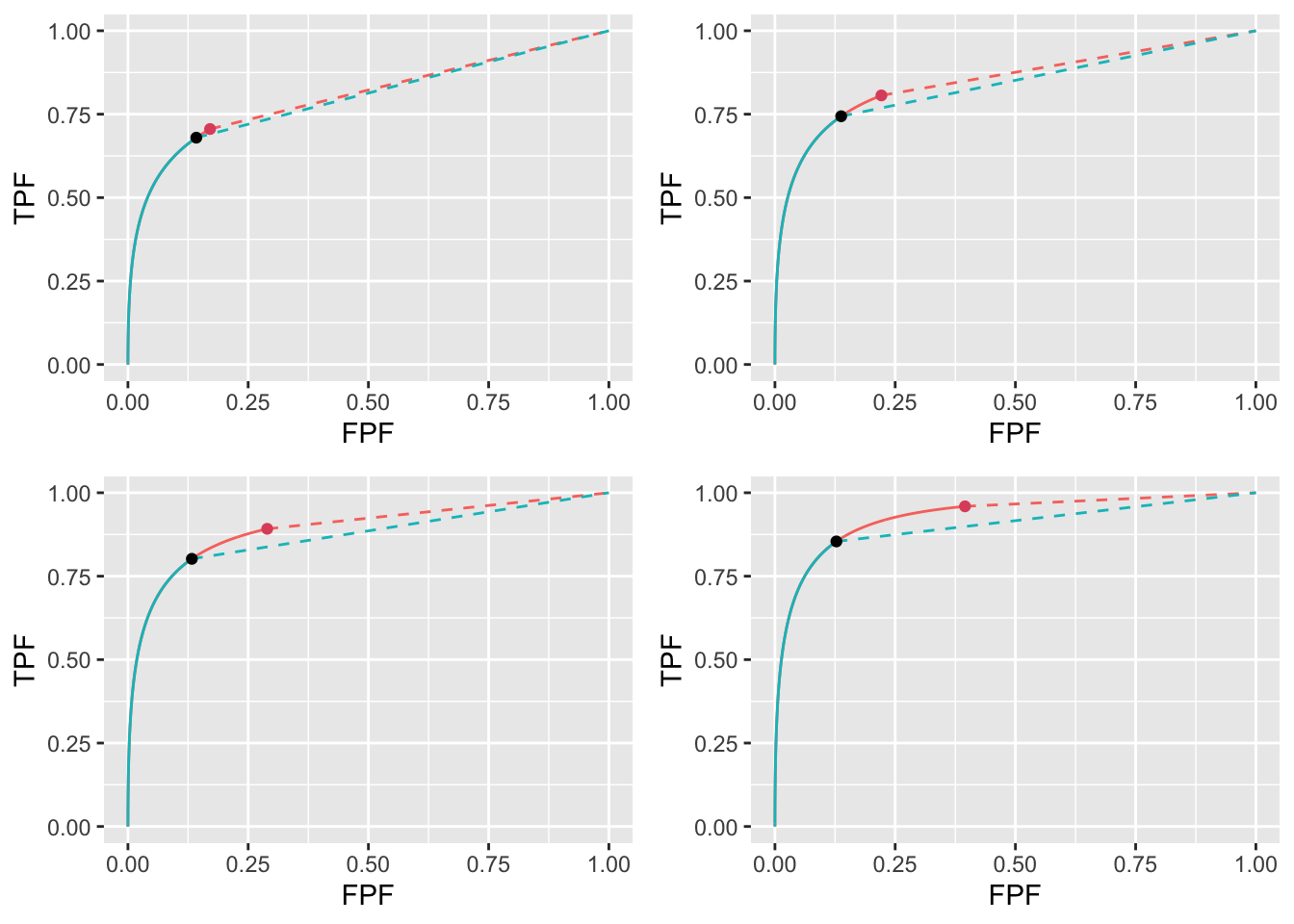

11.4.4 ROC

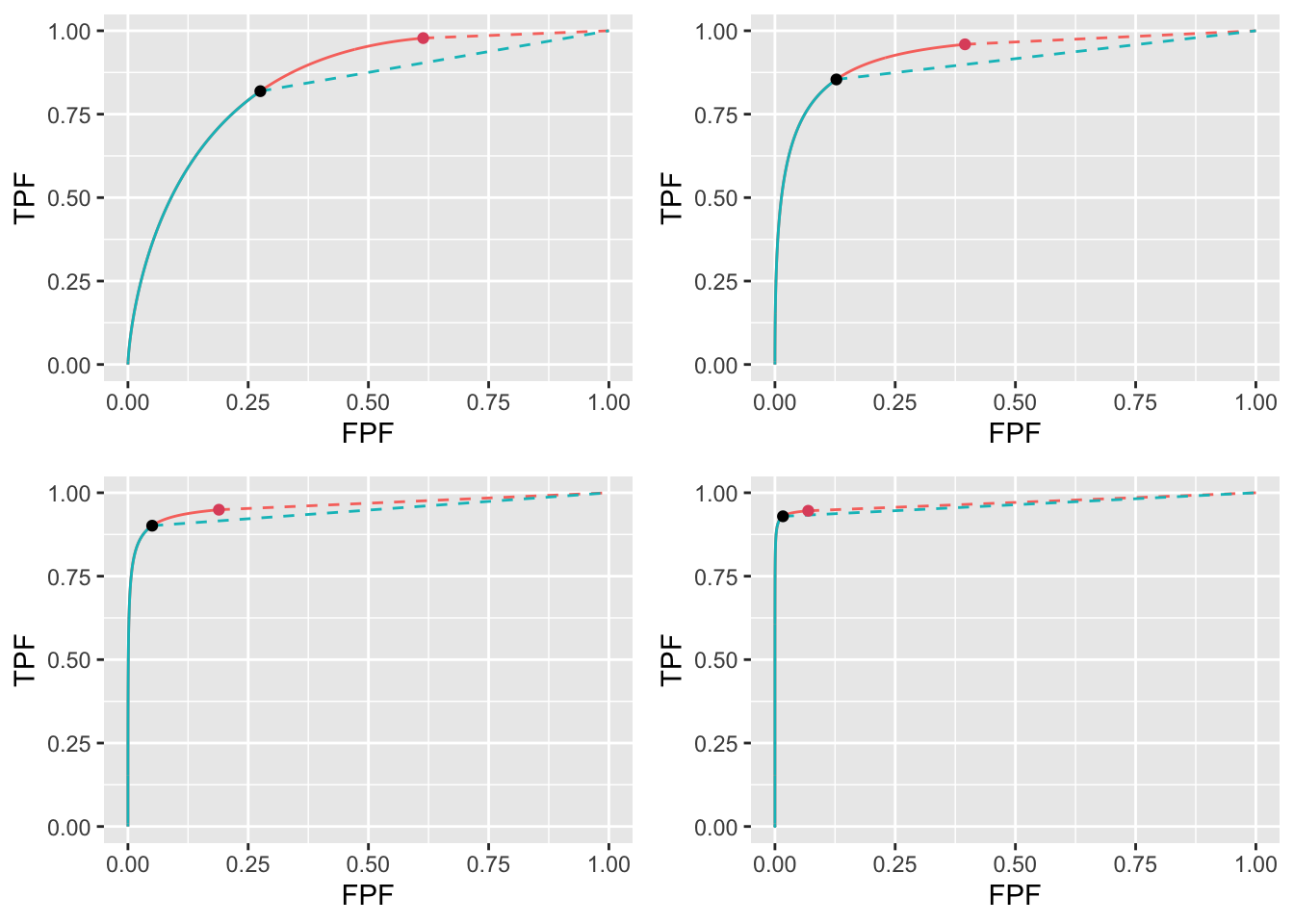

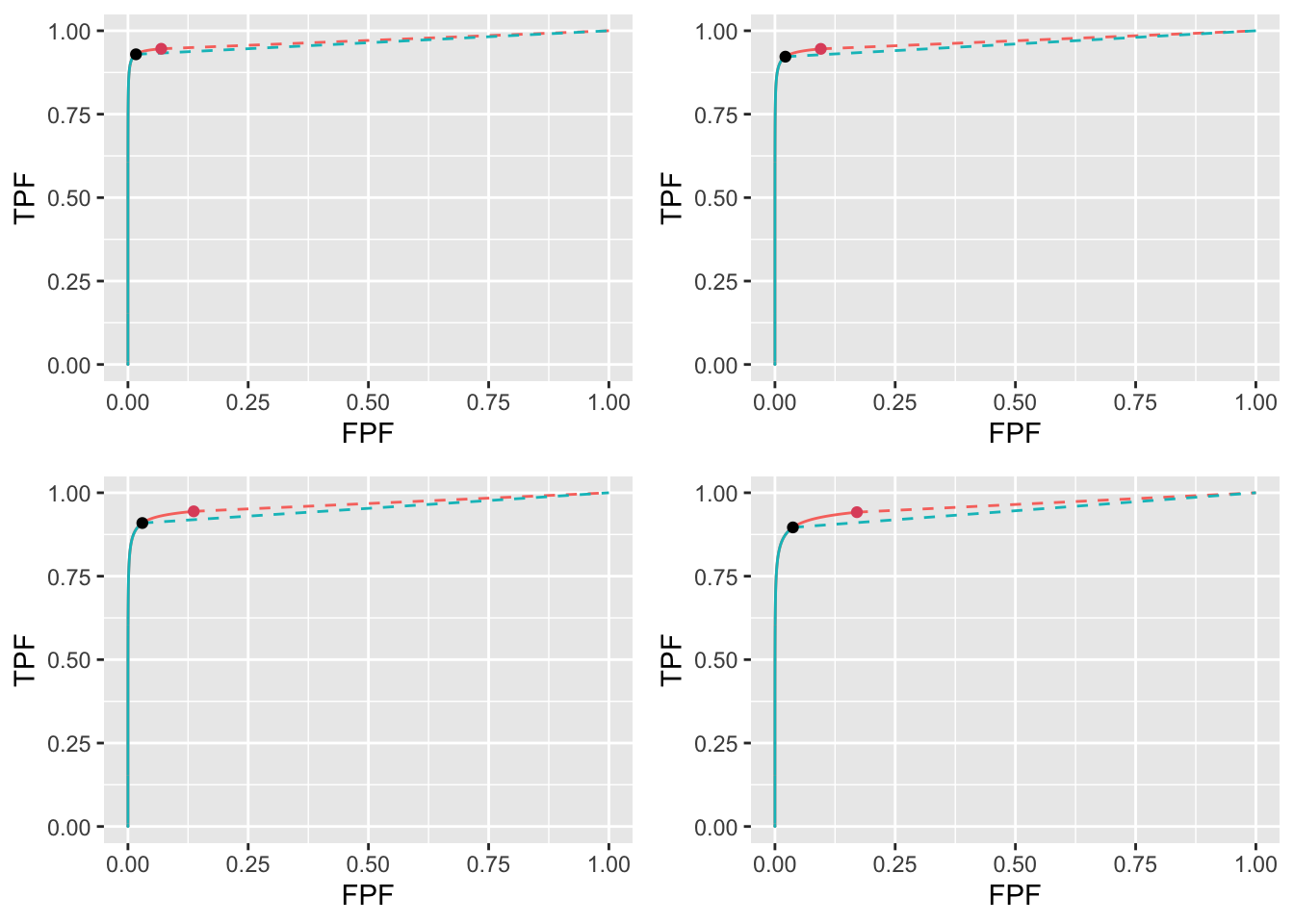

The decrease in \(\text{ROC}_\text{AUC}\) with increasing \(\lambda\) is illustrated in Fig. 11.3 which shows RSM-predicted ROC plots for the two optimization methods for the 4 values of \(\lambda\). Again, each plot consists of a continuous curve followed by a dashed curve and a similar color-coding convention is used as in Fig. 11.2. The ROC plots show similar dependencies as the wAFROC plots: the stricter Youden-index based reporting thresholds sacrifice some of the area under the ROC resulting in lower performance, particularly for the lower values of \(\lambda\).

FIGURE 11.3: ROC plots for the two optimization methods: the “solid-green solid-red dashed-red” curve corresponds to $ ext{wAFROC}_ ext{AUC}$ optimization and the “solid-green dashed-green” curve corresponds to Youden-index optimization. The \(\text{wAFROC}_\text{AUC}\) optimizations yield greater performance than Youden-index optimizations and the difference decreases with increasing \(\lambda\).

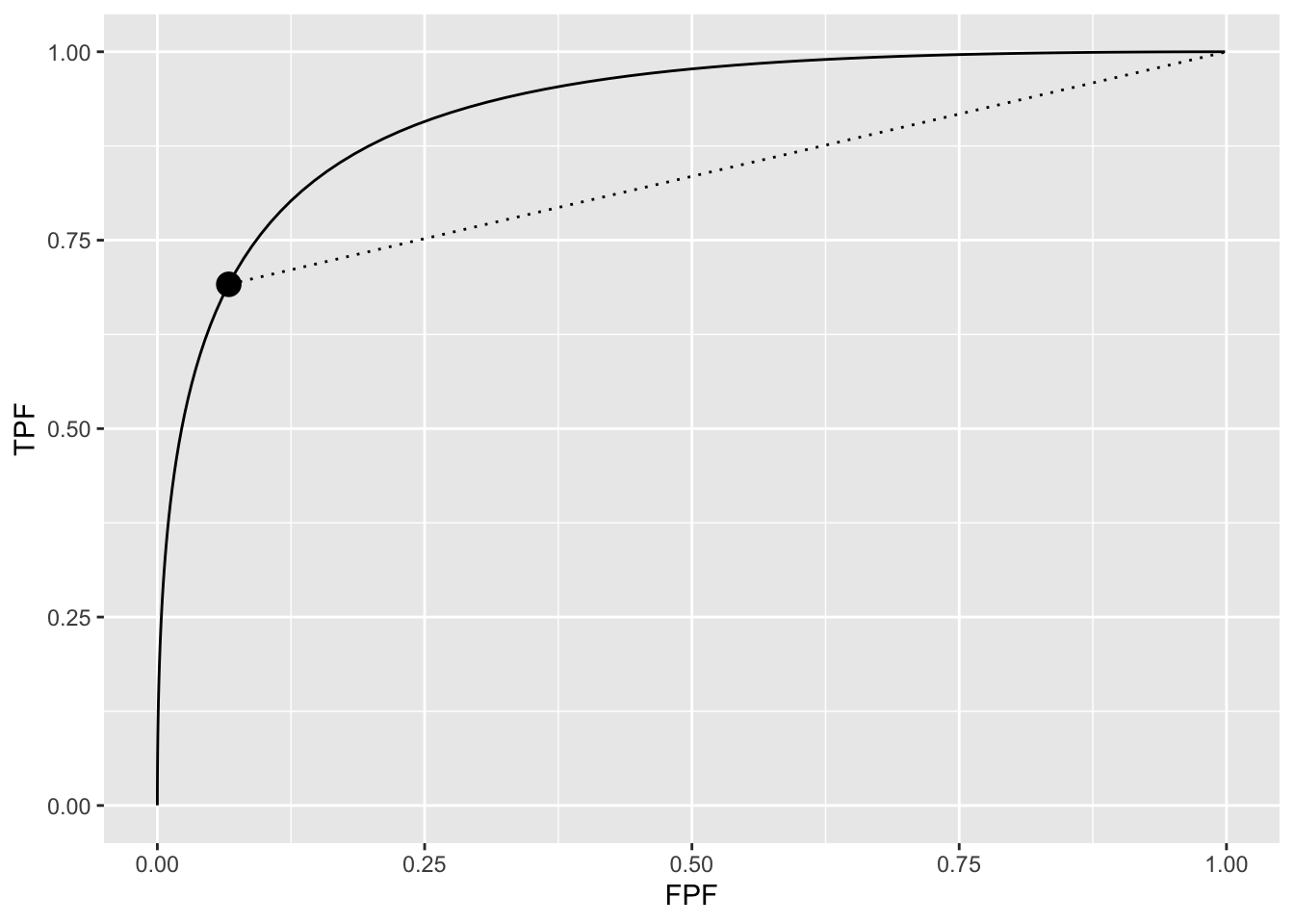

11.4.5 Why not maximize ROC-AUC?

Since the ROC curves show similarities to the wAFROC curves, why not maximize \(\text{ROC}_\text{AUC}\) instead of \(\text{wAFROC}_\text{AUC}\)? It can be shown that as long as one restricts to proper ROC models this always results in \(\zeta_1 = -\infty\), i.e., all latent marks are to be shown to the radiologist, an obviously incorrect strategy. This result can be understood from the following geometrical argument.

For a proper ROC curve the slope decreases monotonically as the operating point moves up the curve and at each point the slope is greater than that of the straight curve connecting the point to (1,1). This geometry ensures that AUC under any curve with a finite \(\zeta_1\) is smaller than that under the full curve. Therefore maximum AUC can only be attained by choosing \(\zeta_1 = -\infty\), see Fig. 11.4.

FIGURE 11.4: In the region above the dot the proper curve is above the dotted line, meaning that performance of an observer who adopts a finite \(\zeta_1\) is less than performance of an observer who adopts \(\zeta_1 = -\infty\).

11.5 Varying \(\nu\) and \(\mu\) optimizations

Details of varying \(\nu\) (with \(\mu\) and \(\lambda\) held constant) are in Appendix 11.10.1. The results, summarized in Table 11.3, are similar to those just described for varying \(\lambda\) but, since unlike as was the case with increasing \(\lambda\), increasing \(\nu\) results in increasing performance, the directions of the effects are reversed. For \(\text{wAFROC}_\text{AUC}\) optimization the optimal reporting threshold \(\zeta_1\) decreases with increasing \(\nu\). In contrast the Youden-index based optimal threshold is almost independent of \(\nu\). For \(\text{wAFROC}_\text{AUC}\) optimization the FROC operating point moves to higher NLF values while the Youden-index based operating point stays at a near constant NLF value, see Fig. 11.8). As before, \(\text{wAFROC}_\text{AUC}\) optimizations yield higher performances than Youden-index optimizations (particularly for larger \(\nu\)): see Fig. 11.9 for the wAFROC and Fig. 11.10 for the ROC. The difference between the two optimization methods increases with increasing \(\nu\) (for comparison the difference between the methods decreases with increasing \(\lambda\) – this is what I meant by “reversed effects”).

Details of varying \(\mu\) (with \(\lambda\) and \(\nu\) held constant) are in Appendix 11.10.2. The results are summarized in Table 11.4. Increasing \(\mu\) is accompanied by increasing \(\zeta_1\) (i.e., stricter reporting threshold) and increasing \(\text{wAFROC}_\text{AUC}\) and \(\text{ROC}_\text{AUC}\). Performance measured either way is higher for \(\text{wAFROC}_\text{AUC}\) optimizations but the difference tends to shrink at the larger values of \(\mu\). LLF is relatively constant for \(\text{wAFROC}_\text{AUC}\) optimizations while it increases slowly with \(\mu\) for Youden-index optimizations. NLF decreases with increasing \(\mu\) for both optimization methods, i.e, the FROC operating point shifts leftward, see Fig. 11.11). Again, \(\text{wAFROC}_\text{AUC}\) optimization yields a lower reporting threshold and higher performance than Youden-index optimization, see Fig. 11.12 for the wAFROC and Fig. 11.13 for the ROC. The difference between the two optimization methods decreases with increasing \(\mu\).

11.6 Limiting situations

Limiting situations covering high and low performances are described in 11.10.3.

For high performance, defined as \(\text{ROC}_\text{AUC} > 0.9\), both methods place the optimal operating point near the inflection point on the upper-left corner of the wAFROC or ROC. The \(\text{wAFROC}_\text{AUC}\) based method chooses a lower threshold than the Youden-index method resulting in a higher operating point on the FROC and higher \(\text{wAFROC}_\text{AUC}\) and \(\text{ROC}_\text{AUC}\). The difference between the two methods decreases as \(\text{ROC}_\text{AUC} \rightarrow 1\).

For low performance, defined as \(0.5 < \text{ROC}_\text{AUC} < 0.6\), the Youden-index method selected a lower threshold compared to \(\text{wAFROC}_\text{AUC}\) optimization, resulting in a higher operating point on the FROC, greater \(\text{ROC}_\text{AUC}\) but sharply lower \(\text{wAFROC}_\text{AUC}\). The difference between the two methods increases as \(\text{ROC}_\text{AUC} \rightarrow 0.5\). In this limit the \(\text{wAFROC}_\text{AUC}\) method severely limits the numbers of marks shown to the radiologist as compared to the Youden-index based method.

11.7 Trends

No matter how the RSM parameters are varied the trend is that \(\text{wAFROC}_\text{AUC}\) optimizations result in lower optimal thresholds \(\zeta_1\) (i.e.,laxer reporting criteria that result in more displayed marks) than Youden-index optimizations. Accordingly the \(\text{wAFROC}_\text{AUC}\) optimizations yield FROC operating points at higher NLF values (i.e., red dots to the right of the black dots in FROC plots), greater \(\text{wAFROC}_\text{AUC}\)s (red curves above the green curves in wAFROC plots) and greater \(\text{ROC}_\text{AUC}\)s (red curves above the green curves in ROC plots). These trends are true no matter how the RSM parameters are varied provided CAD performance is not too low.

If CAD performance is very low there are instructive exceptions where \(\text{wAFROC}_\text{AUC}\) optimizations yield greater \(\zeta_1\) (i.e., stricter reporting criteria that result in fewer displayed marks) than Youden-index optimizations. This finding is true no matter how the RSM parameters are varied.

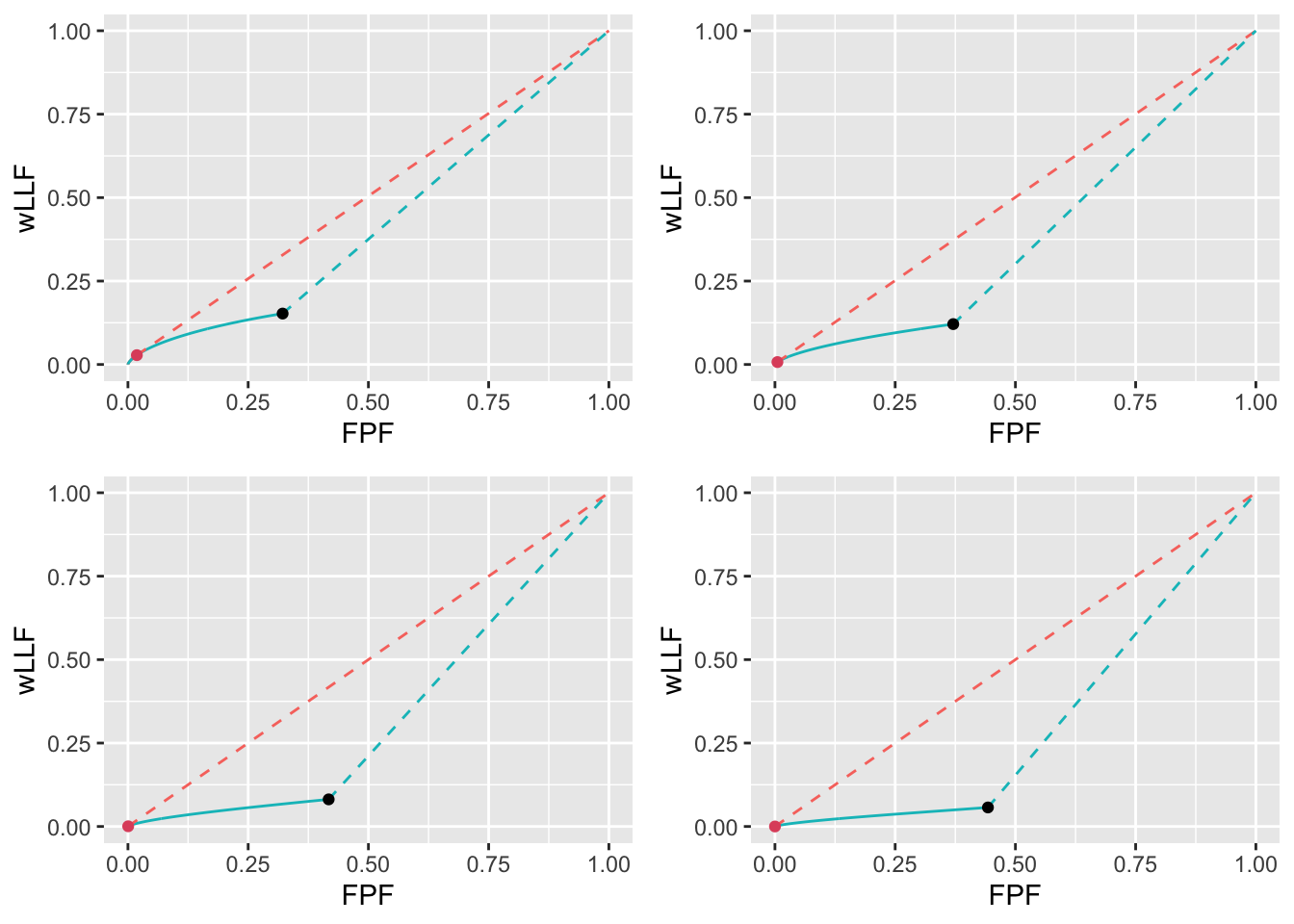

Consider for example the low performance varying \(\nu\) optimizations described in Appendix 11.10.3.6. The FROC plots, Fig. 11.29, corresponding to \(\mu = 1\), \(\lambda = 10\), \(\nu = 0.1, 0.2, 0.3, 0.4\), show that the \(\text{wAFROC}_\text{AUC}\) optimal operating points are very close to the origin \(\text{NLF} = 0\), i.e., very few marks are displayed. In contrast the Youden-index optimal operating points are shifted towards larger \(\text{NLF}\) values allowing more marks to be displayed. The wAFROC plots, Fig. 11.30, show a large difference in AUCs between the two methods, especially for the smaller values of \(\nu\): for example, for \(\nu=0.1\), the \(\text{wAFROC}_\text{AUC}\) corresponding to \(\text{wAFROC}_\text{AUC}\) optimization is 0.5000002 while that corresponding to Youden-index optimization is 0.2923394. Clearly the \(\text{wAFROC}_\text{AUC}\) optimization yields a larger \(\text{wAFROC}_\text{AUC}\) relative to Youden-index optimization, which it must as \(\text{wAFROC}_\text{AUC}\) is the quantity being optimized.

While Youden-index optimizations yield smaller \(\text{wAFROC}_\text{AUC}\) values they do yield larger \(\text{ROC}_\text{AUC}\) values as is evident by comparing the ROC plots, Fig. 11.31. For \(\nu=0.1\) the \(\text{ROC}_\text{AUC}\) corresponding to \(\text{wAFROC}_\text{AUC}\) optimization is 0.5000024 while that corresponding to Youden-index optimization is 0.5143474. Clearly \(\text{wAFROC}_\text{AUC}\) optimization yields a very close to chance-level \(\text{ROC}_\text{AUC}\) while Youden-index optimization yields a slightly larger \(\text{ROC}_\text{AUC}\).

Keep in mind that \(\text{ROC}_\text{AUC}\) measures classification accuracy performance between non-diseased and diseased cases: it does not care about lesion localization accuracy. In contrast \(\text{wAFROC}_\text{AUC}\) measures both lesion localization accuracy and lesion classification accuracy. By choosing an optimal operating point close to the origin the low performance CAD does not get credit for missing almost all the lesions on diseased cases but it does get credit for not marking non-diseased cases.

11.8 Applying the method

Assume that one has designed an algorithmic observer that has been optimized with respect to all other parameters except the reporting threshold. At this point the algorithm reports every suspicious region no matter how low the malignancy index. The mark-rating pairs are entered into a RJafroc format Excel input file, as describe here. The next step is to read the data file – DfReadDataFile() – convert it to an ROC dataset – DfFroc2Roc() – and then perform a radiological search model (RSM) fit to the dataset using function FitRsmRoc(). This yields the necessary \(\lambda, \mu, \nu\) parameters. These values are used to perform the computations described in this chapter to determine the optimal reporting threshold. The RSM parameter values and the reporting threshold determine the optimal reporting point on the FROC curve. The designer sets the algorithm to only report marks with confidence levels exceeding this threshold. These steps are illustrated in the following example.

11.8.1 A CAD application

Not having access to any CAD FROC datasets the standalone CAD LROC dataset described in (Hupse et al. 2013) was used to create a simulated FROC (i.e., \(\text{ROC}_\text{AUC}\) equivalent) dataset which is embedded in RJafroc as object datasetCadSimuFroc. In the following code the first reader for this dataset, corresponding to CAD, is extracted using DfExtractDataset (the other reader data, corresponding to radiologists, are ignored). The function DfFroc2Roc converts dsCad to an ROC dataset. The function DfBinDataset bins the data to about 7 bins. Each diseased case contains one lesion: lesDistr = c(1). FitRsmRoc fits the binned ROC dataset to the radiological search model (RSM). Object fit contains the RSM parameters required to perform the optimizations described in previous sections.

ds <- RJafroc::datasetCadSimuFroc

dsCad <- RJafroc::DfExtractDataset(ds, rdrs = 1)

dsCadRoc <- RJafroc::DfFroc2Roc(dsCad)

dsCadRocBinned <- RJafroc::DfBinDataset(dsCadRoc, opChType = "ROC")

lesDistrCad <- c(1) # LROC dataset has one lesion per diseased case

relWeightsCad <- c(1)

fit <- RJafroc::FitRsmRoc(dsCadRocBinned, lesDistrCad)

cat(sprintf("fitted values: mu = %5.3f,", fit$mu),

sprintf("lambda = %5.3f,", fit$lambda),

sprintf("nu = %5.3f.", fit$nu))

#> fitted values: mu = 2.756, lambda = 6.778, nu = 0.803.11.8.1.1 Summary table

Table 11.2 summarizes the results. As compared to Youden-index optimization the \(\text{wAFROC}_\text{AUC}\) based optimization results in a lower reporting threshold \(\zeta_1\), larger figures of merit – see Fig. 11.6 for \(\text{wAFROC}_\text{AUC}\) and Fig. 11.7 for \(\text{ROC}_\text{AUC}\) – and a higher operating point on the FROC, see Fig. 11.5. These results match the trends shown in Table 11.1.

| FOM | \(\lambda\) | \(\zeta_1\) | \(\text{wAFROC}\) | \(\text{ROC}\) | \(\left( \text{NLF}, \text{LLF}\right)\) |

|---|---|---|---|---|---|

| wAFROC | 6.778 | 1.739 | 0.774 | 0.815 | (0.278, 0.679) |

| Youden | 1.982 | 0.770 | 0.798 | (0.161, 0.627) |

11.8.1.2 FROC

Fig. 11.5 shows FROC curves with superimposed optimal operating points. With NLF = 0.278, a four-view mammogram would show about 1.2 false CAD marks per patient and lesion-level sensitivity would be about 68 percent.

FIGURE 11.5: FROC plots with superposed optimal operating points. The red dot is using \(\text{wAFROC}_\text{AUC}\) optimization and black dot is using Youden-index optimization.

11.8.1.3 wAFROC

Fig. 11.6 shows wAFROC curves using the two methods. The red curve is using \(\text{wAFROC}_\text{AUC}\) optimization and the green curve is using Youden-index optimization. The difference in AUCs is small - following the trend described in Appendix 11.5 for the larger values of \(\lambda\).

FIGURE 11.6: The color coding is as in previous figures. The two \(\text{wAFROC}_\text{AUC}\)s are 0.774 (wAFROC optimization) and 0.770 (Youden-index optimization).

11.8.1.4 ROC

Fig. 11.7 shows ROC curves using the two methods. The red curve is using \(\text{wAFROC}_\text{AUC}\) optimization and the green curve is using Youden-index optimization.

FIGURE 11.7: The color coding is as in previous figures. The two \(\text{ROC}_\text{AUC}\)s are 0.815 (wAFROC optimization) and 0.798 (Youden-index optimization).

11.9 TBA Discussion

muArr <- c(2)

lambdaArr <- c(1, 2, 5, 10)

nuArr <- c(0.9)

lesDistr <- c(0.5, 0.5)

relWeights <- c(0.5, 0.5)

source("R/optim-op-point/doOneTable.R", local = knitr::knit_global())In Table 11.1 the \(\lambda\) parameter controls the average number of perceived NLs per case. For \(\lambda = 1\) there is, on average, one perceived NL for every case and the optimal \(\text{wAFROC}_\text{AUC}\) based threshold is \(\zeta_1\) = -0.007. For \(\lambda = 10\) there are ten perceived NLs for every case and the optimal \(\text{wAFROC}_\text{AUC}\) based threshold is \(\zeta_1\) = 1.856. The reason for the increase in \(\zeta_1\) should be obvious: with increasing numbers of latent NLs (perceived false marks) per case it is necessary to adopt a stricter criteria because otherwise the reader would be shown 10 times the number of false marks per case.

The \(\text{ROC}_\text{AUC}\)s are reported as a check of the less familiar \(\text{wAFROC}_\text{AUC}\) figure of merit. With some notable exceptions the behavior of the two optimization methods is independent of whether it is measured via the \(\text{wAFROC}_\text{AUC}\) or the \(\text{ROC}_\text{AUC}\): either way the \(\text{wAFROC}_\text{AUC}\) optimizations yield higher AUC values and higher operating points on the FROC than the corresponding Youden-index optimizations. The exceptions occur when CAD performance is very low in which situation the .

In this example the difference in \(\text{wAFROC}_\text{AUC}\), \(\text{ROC}_\text{AUC}\) and the operating points between the two methods decreases as performance increases, which is the opposite of that found when \(\lambda\) or \(\nu\) were varied. With constant \(\lambda\) and \(\nu\) the numbers of latent NLs and LLs are unchanging; all that happens is the values of the z-samples from LLs increase as \(\mu\) increases, which allows the optimal threshold to increase (this can be understood as a “ROC-paradigm” effect: as the normal distributions are more widely separated, the optimal threshold will increase, approaching, in the limit, half the separation, since in that limit TPF = 1 and FPF = 0).

This is due to two reinforcing effects: performance goes down with increasing numbers of NLs per case and performance goes down with increasing optimal reporting threshold (see 7.7 for explanation of the \(\zeta_1\) dependence of AUC performance). It is difficult to unambiguously infer performance based on the FROC operating points: as \(\lambda\) increases LLF decreases but for \(\text{wAFROC}_\text{AUC}\) optimizations NLF peaks while for Youden-index optimizations it increases.

The FROC plots also illustrate the decrease in \(\text{LLF}\) with increasing \(\lambda\): the black dots move to smaller ordinates, as do the red dots, which would seem to imply decreasing performance. However, the accompanying change in \(\text{NLF}\) rules out an unambiguous determination of the direction of the change in overall performance based on the FROC curve.

For very low performance, defined as \(0.5 < \text{ROC}_\text{AUC} < 0.6\), the Youden-index method chooses a lower threshold compared to \(\text{wAFROC}_\text{AUC}\) optimization, resulting in a higher operating point on the FROC, greater \(\text{ROC}_\text{AUC}\) but sharply lower \(\text{wAFROC}_\text{AUC}\). The difference between the two methods increases as \(\text{ROC}_\text{AUC} \rightarrow 0.5\). In this limit the \(\text{wAFROC}_\text{AUC}\) method severely limits the numbers of marks shown to the radiologist as compared to the Youden-index based method.

11.10 Appendices

11.10.1 Varying \(\nu\) optimizations

For \(\mu = 2\) and \(\lambda = 1\) optimizations were performed for \(\nu = 0.6, 0.7, 0.8, 0.9\).

muArr <- c(2)

lambdaArr <- c(1)

nuArr <- c(0.6, 0.7, 0.8, 0.9)

lesDistr <- c(0.5, 0.5)

relWeights <- c(0.5, 0.5)11.10.1.1 Summary table

| FOM | \(\nu\) | \(\zeta_1\) | \(\text{wAFROC}_\text{AUC}\) | \(\text{ROC}_\text{AUC}\) | \(\left( \text{NLF}, \text{LLF}\right)\) |

|---|---|---|---|---|---|

| \(\text{wAFROC}_\text{AUC}\) | 0.6 | 0.888 | 0.701 | 0.804 | (0.187, 0.520) |

| 0.7 | 0.674 | 0.751 | 0.851 | (0.250, 0.635) | |

| 0.8 | 0.407 | 0.805 | 0.893 | (0.342, 0.756) | |

| 0.9 | -0.007 | 0.864 | 0.929 | (0.503, 0.880) | |

| Youden-index | 0.6 | 1.022 | 0.700 | 0.797 | (0.153, 0.502) |

| 0.7 | 1.044 | 0.745 | 0.835 | (0.148, 0.581) | |

| 0.8 | 1.069 | 0.788 | 0.868 | (0.143, 0.659) | |

| 0.9 | 1.095 | 0.831 | 0.899 | (0.137, 0.735) |

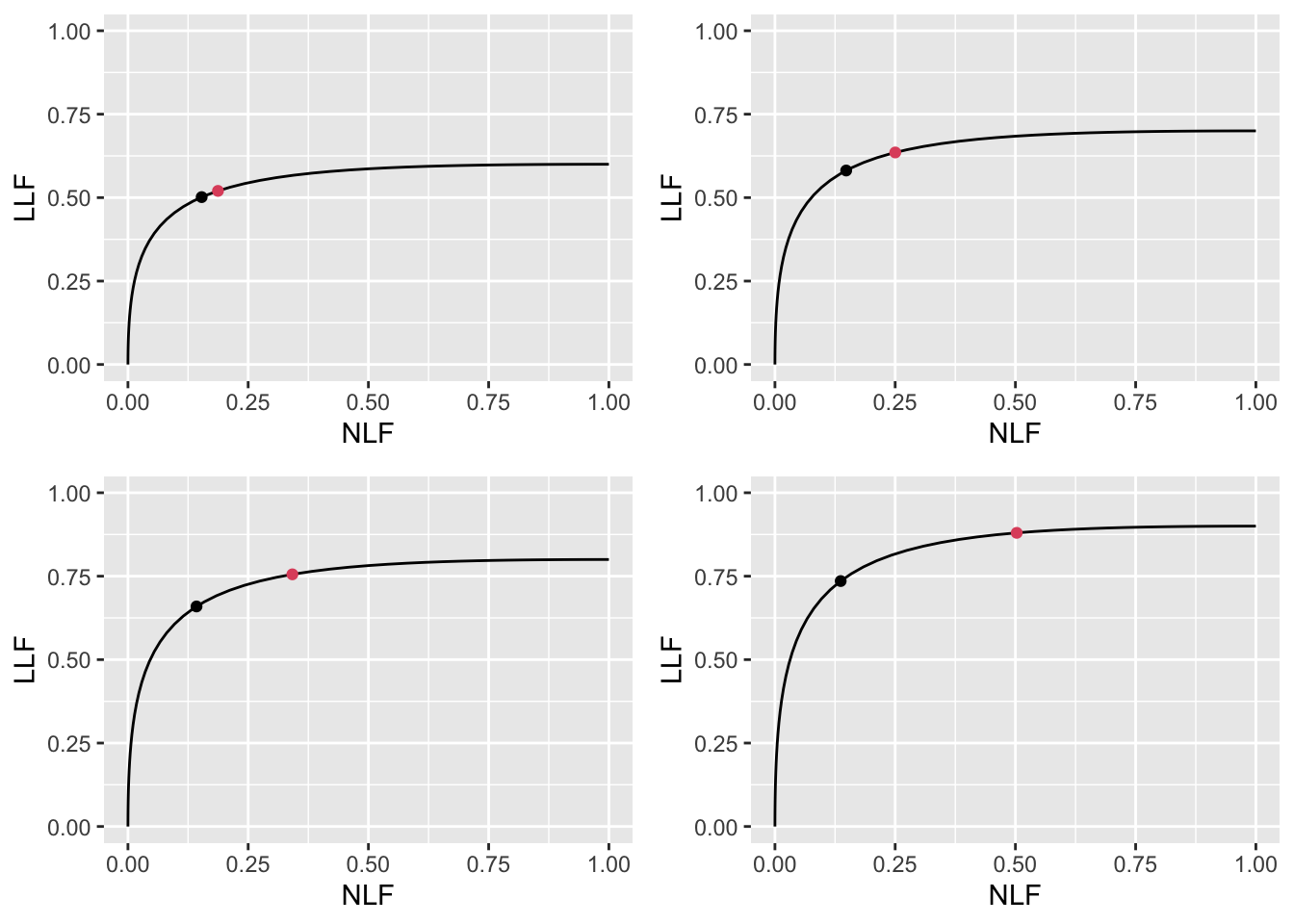

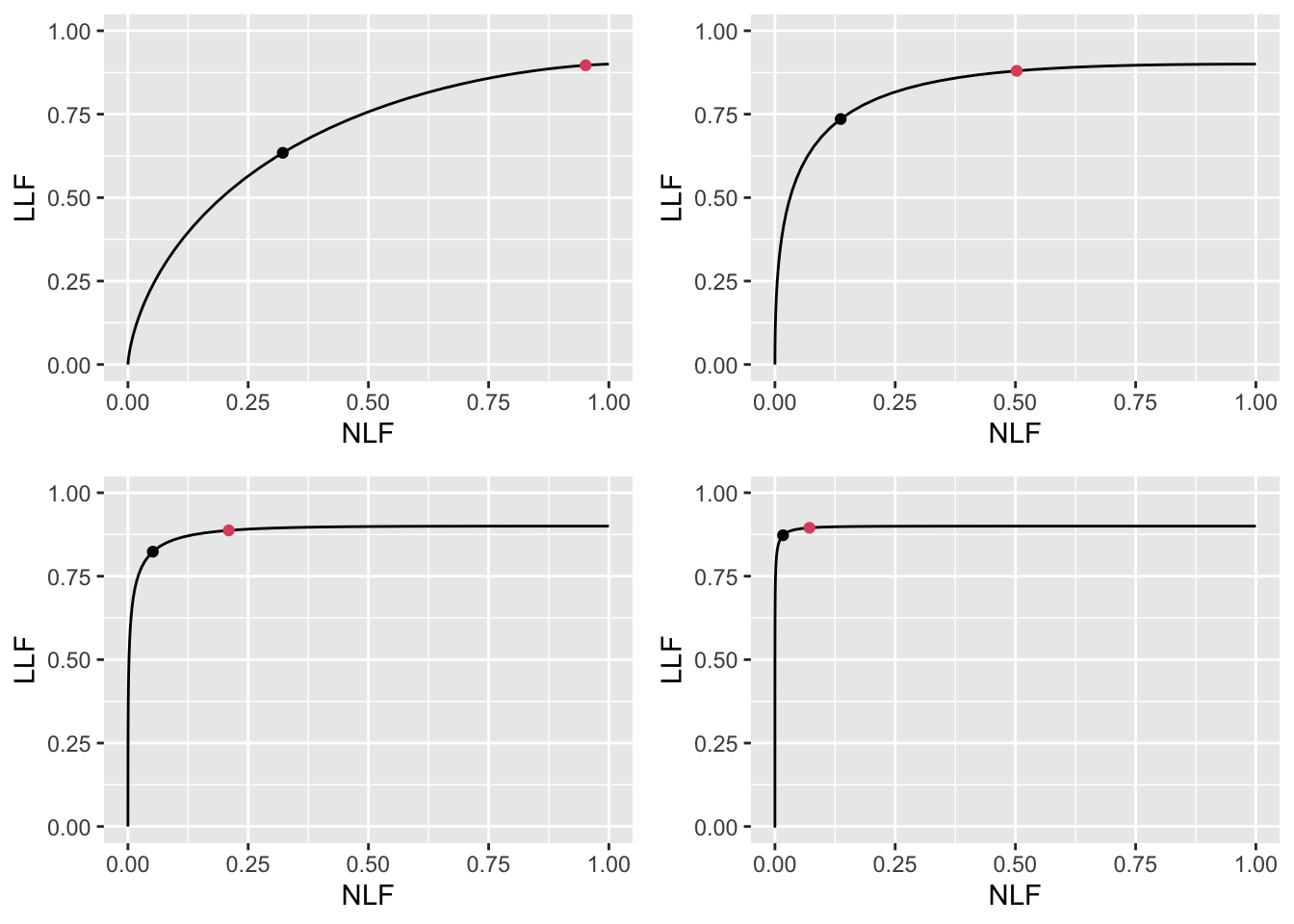

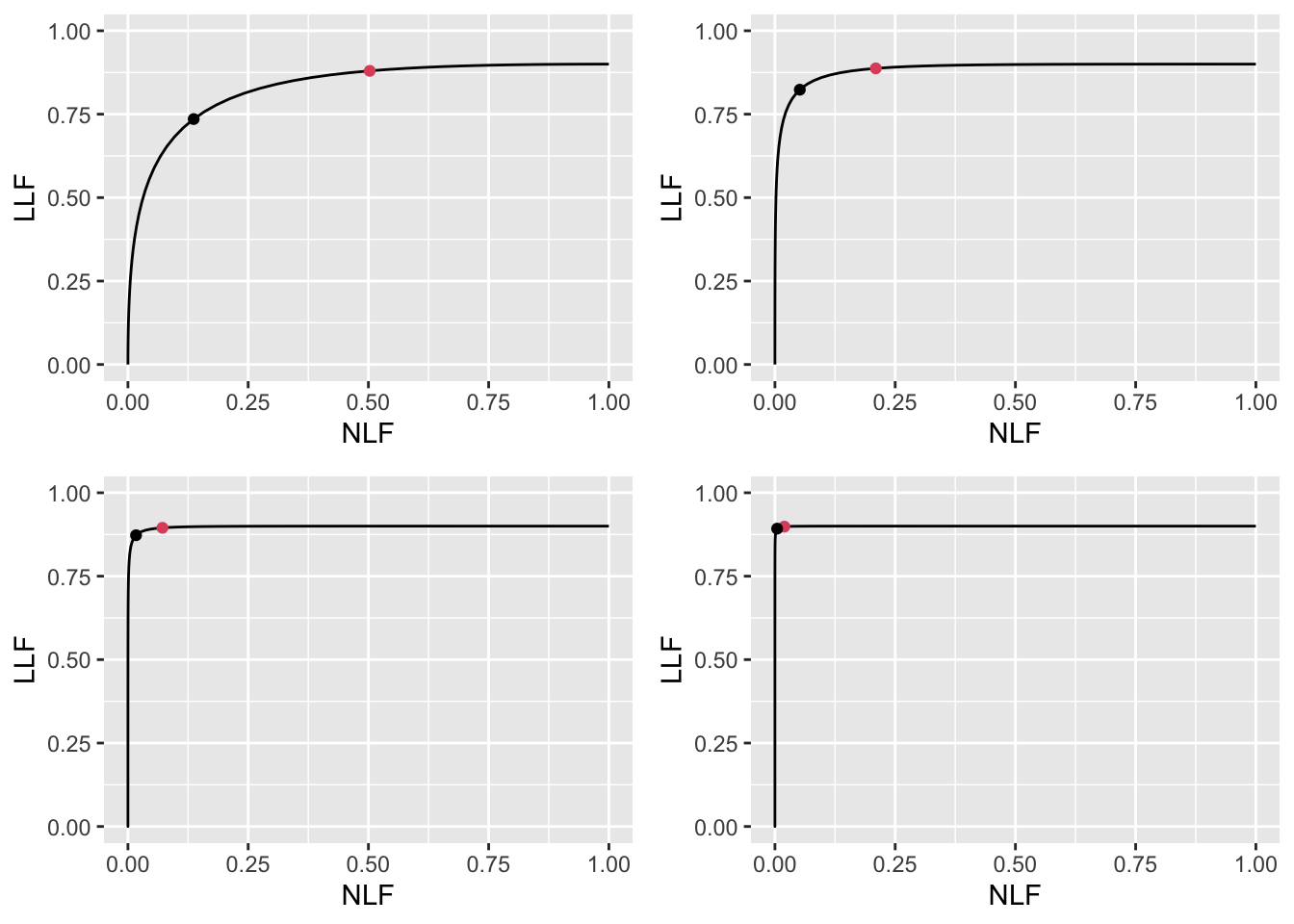

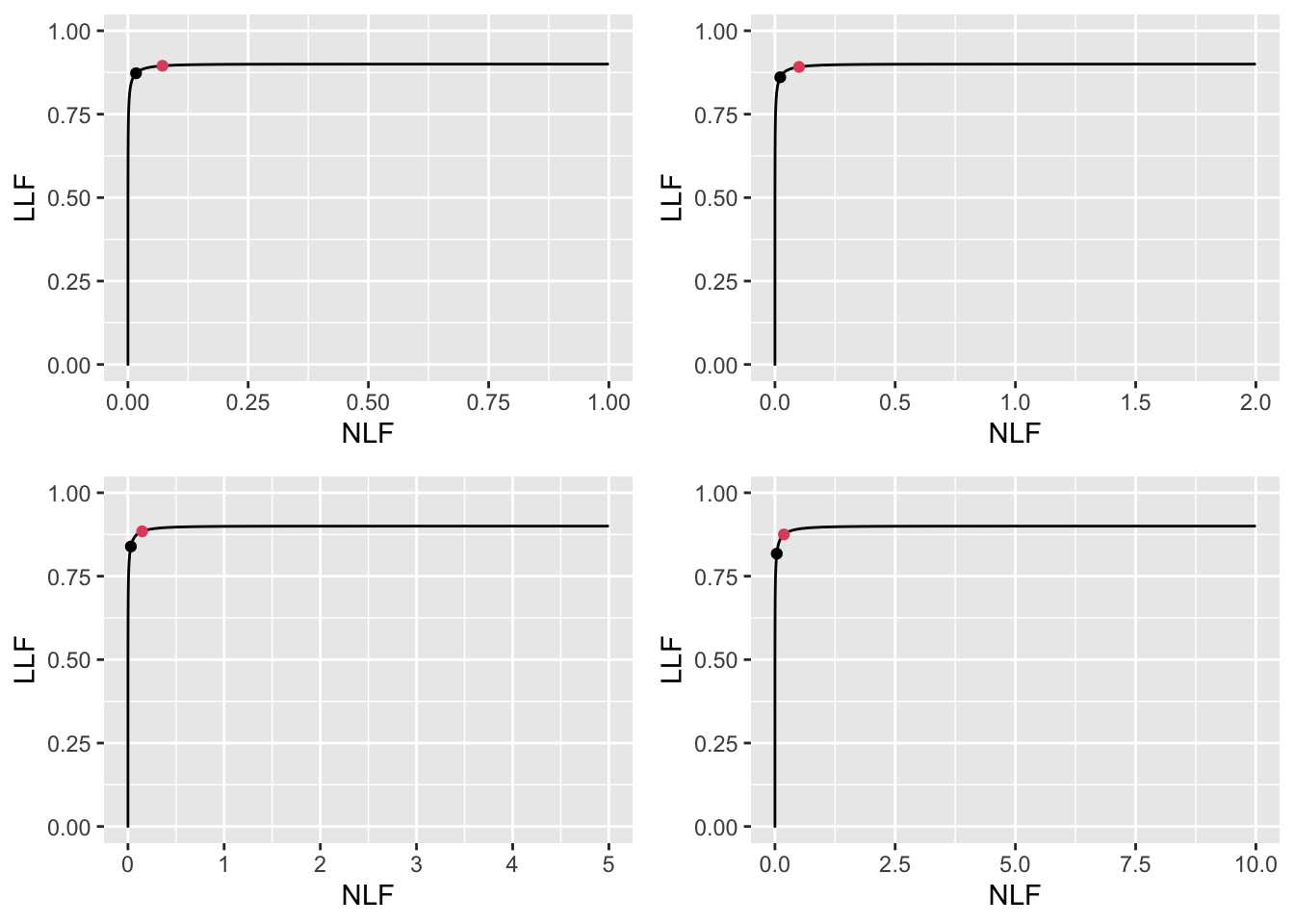

11.10.1.2 FROC

FIGURE 11.8: Varying \(\nu\) FROC plots with superimposed operating points. The red dot corresponds to \(\text{wAFROC}_\text{AUC}\) optimization and the black dot to Youden-index optimization. The values of \(\nu\) are: top-left \(\nu = 0.6\), top-right \(\nu = 0.7\), bottom-left \(\nu = 0.8\) and bottom-right \(\nu = 0.9\). Each red dot is above the corresponding black dot and their separation increases as \(\nu\) increases, i.e., as CAD performance increases.

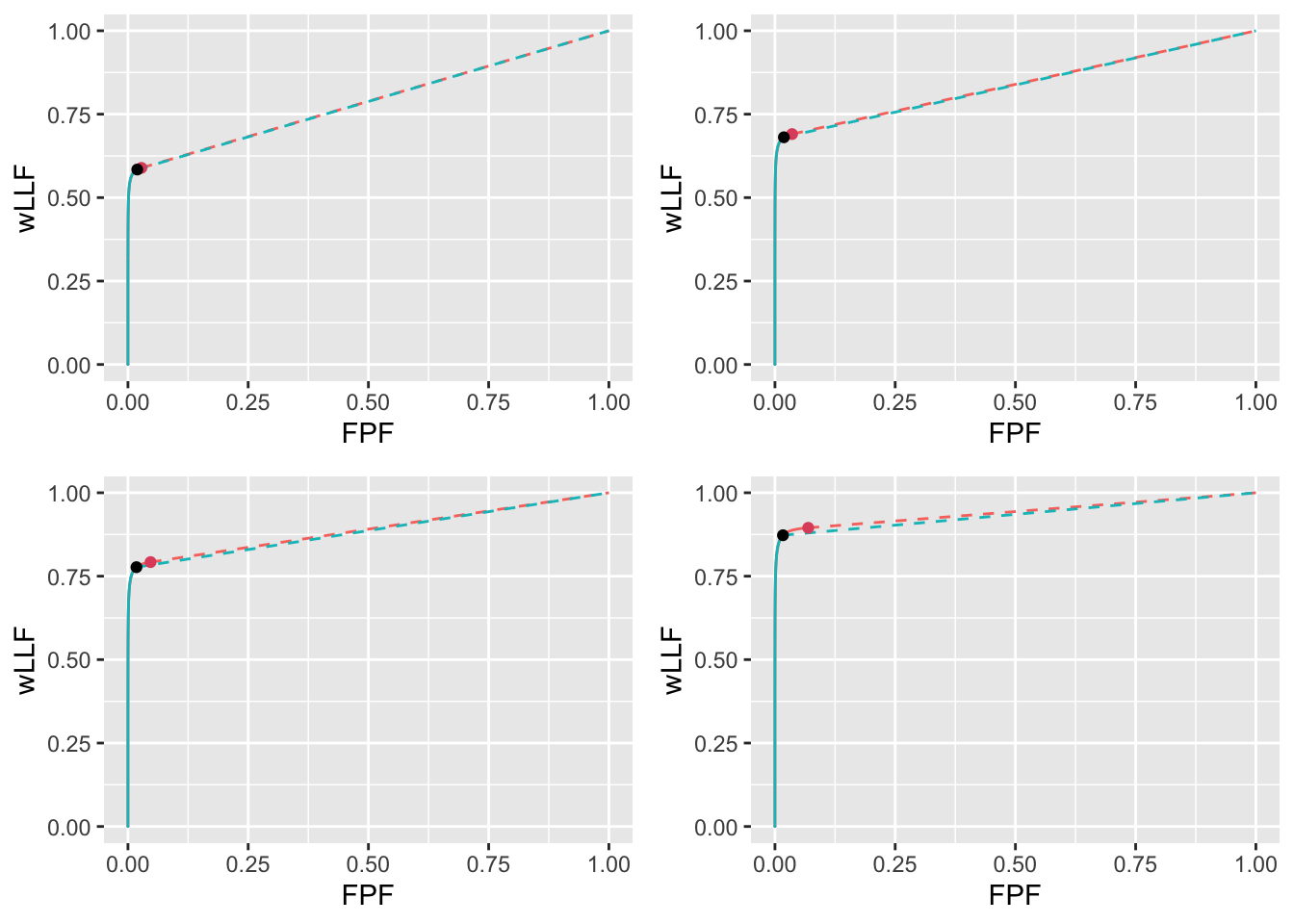

11.10.1.3 wAFROC

FIGURE 11.9: Varying \(\nu\) wAFROC plots for the two optimization methods with superimposed operating points with superimposed operating points. The color coding is as in previous figures. The values of \(\nu\) are: top-left \(\nu = 0.6\), top-right \(\nu = 0.7\), bottom-left \(\nu = 0.8\) and bottom-right \(\nu = 0.9\).

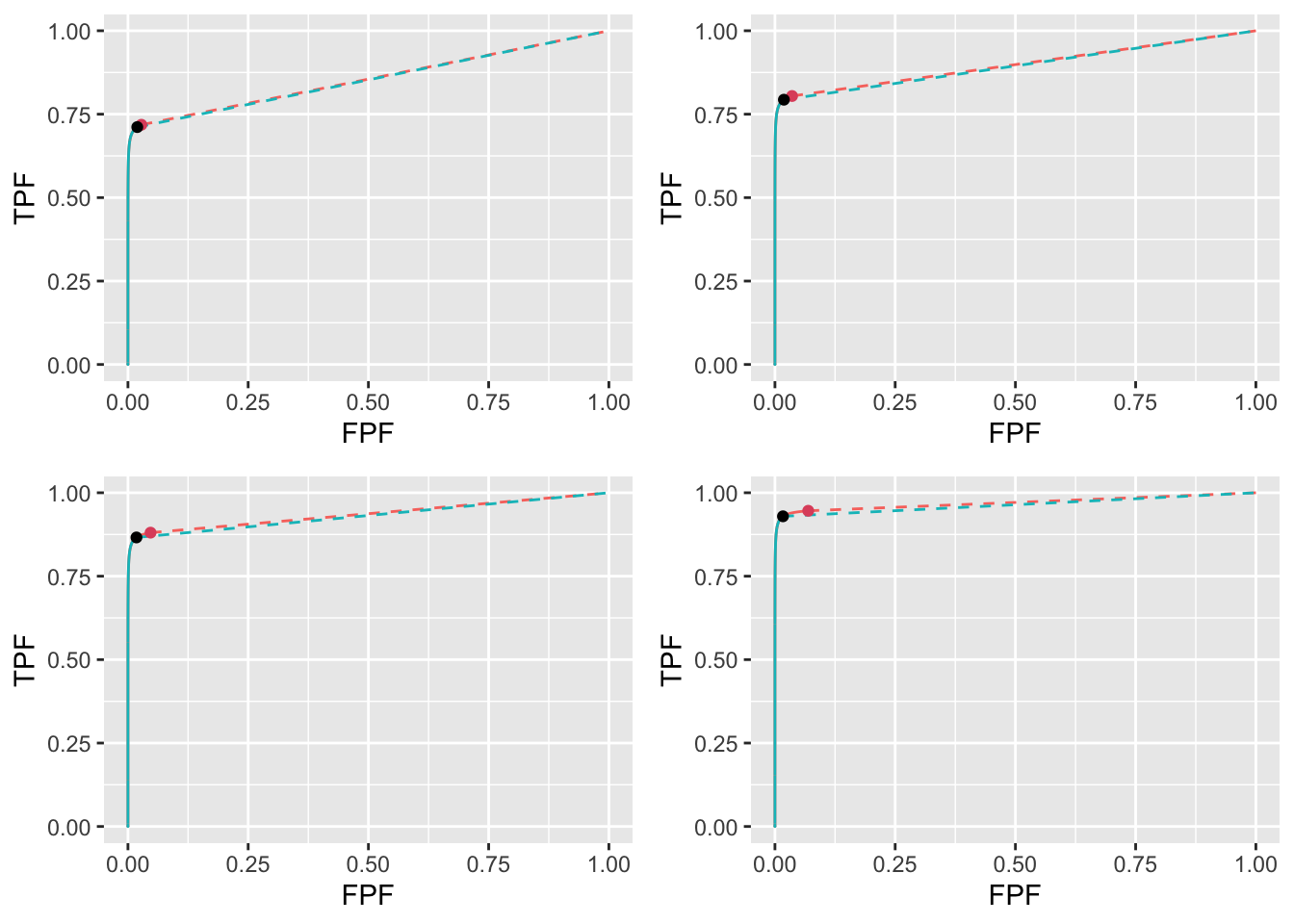

11.10.1.4 ROC

FIGURE 11.10: Varying \(\nu\) ROC plots for the two optimization methods with superimposed operating points with superimposed operating points. The color coding is as in previous figures. The values of \(\nu\) are: top-left \(\nu = 0.6\), top-right \(\nu = 0.7\), bottom-left \(\nu = 0.8\) and bottom-right \(\nu = 0.9\).

11.10.2 Varying \(\mu\) optimizations

For \(\lambda = 1\) and \(\nu = 0.9\) optimizations were performed for \(\mu = 1, 2, 3, 4\).

muArr <- c(1, 2, 3, 4)

lambdaArr <- 1

nuArr <- 0.911.10.2.1 Summary table

| FOM | \(\mu\) | \(\zeta_1\) | \(\text{wAFROC}_\text{AUC}\) | \(\text{ROC}_\text{AUC}\) | \(\left( \text{NLF}, \text{LLF}\right)\) |

|---|---|---|---|---|---|

| \(\text{wAFROC}_\text{AUC}\) | 1 | -1.663 | 0.745 | 0.850 | (0.952, 0.897) |

| 2 | -0.007 | 0.864 | 0.929 | (0.503, 0.880) | |

| 3 | 0.808 | 0.922 | 0.961 | (0.210, 0.887) | |

| 4 | 1.463 | 0.942 | 0.970 | (0.072, 0.895) | |

| Youden-index | 1 | 0.462 | 0.704 | 0.815 | (0.322, 0.634) |

| 2 | 1.095 | 0.831 | 0.899 | (0.137, 0.735) | |

| 3 | 1.629 | 0.903 | 0.945 | (0.052, 0.823) | |

| 4 | 2.124 | 0.935 | 0.964 | (0.017, 0.873) |

11.10.2.2 FROC

FIGURE 11.11: Varying \(\mu\) FROC plots with superimposed operating points. The red dot corresponds to \(\text{wAFROC}_\text{AUC}\) optimization and the black dot to Youden-index optimization. The values of \(\mu\) are: top-left \(\mu = 1\), top-right \(\mu = 2\), bottom-left \(\mu = 3\) and bottom-right \(\mu = 4\).

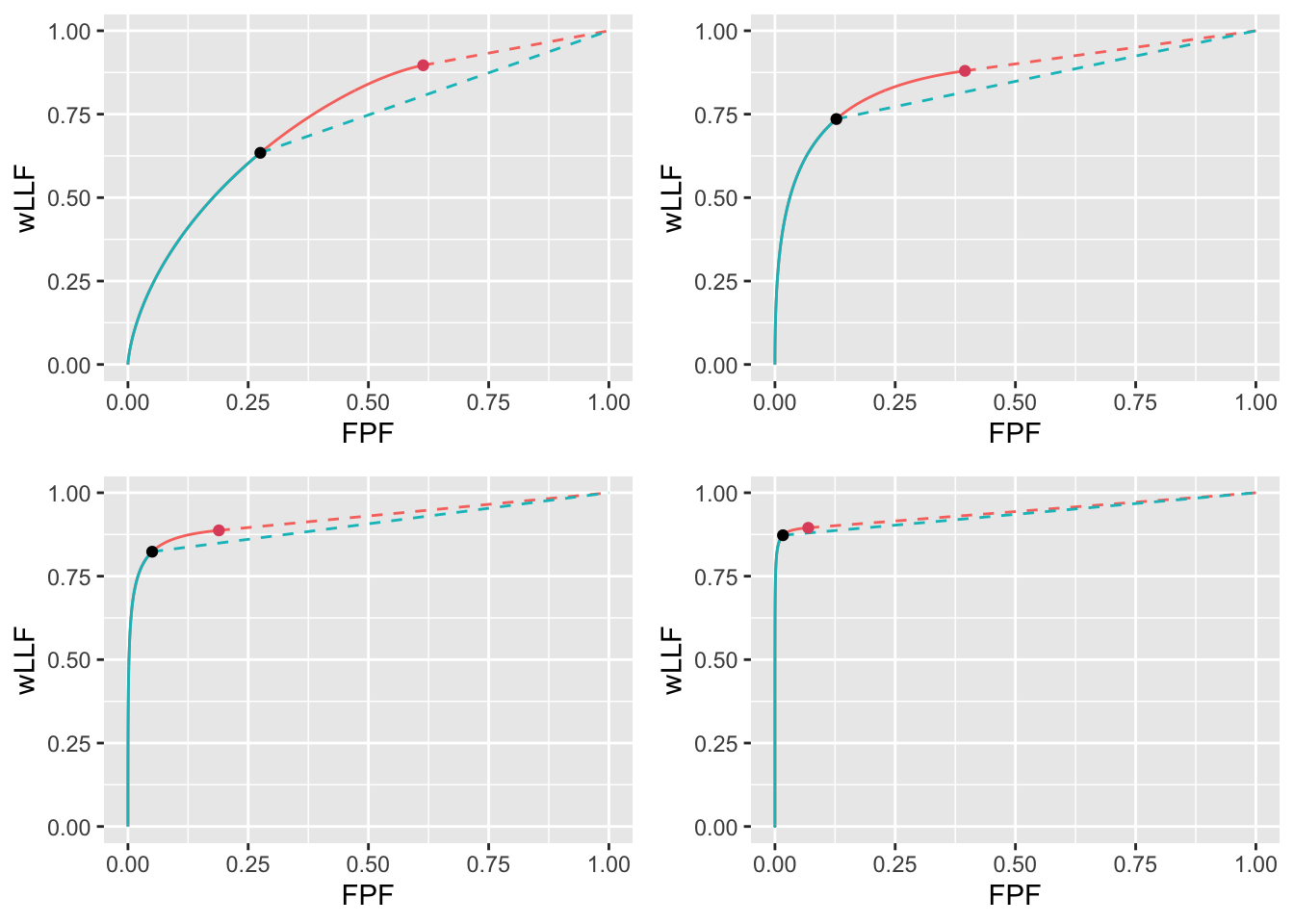

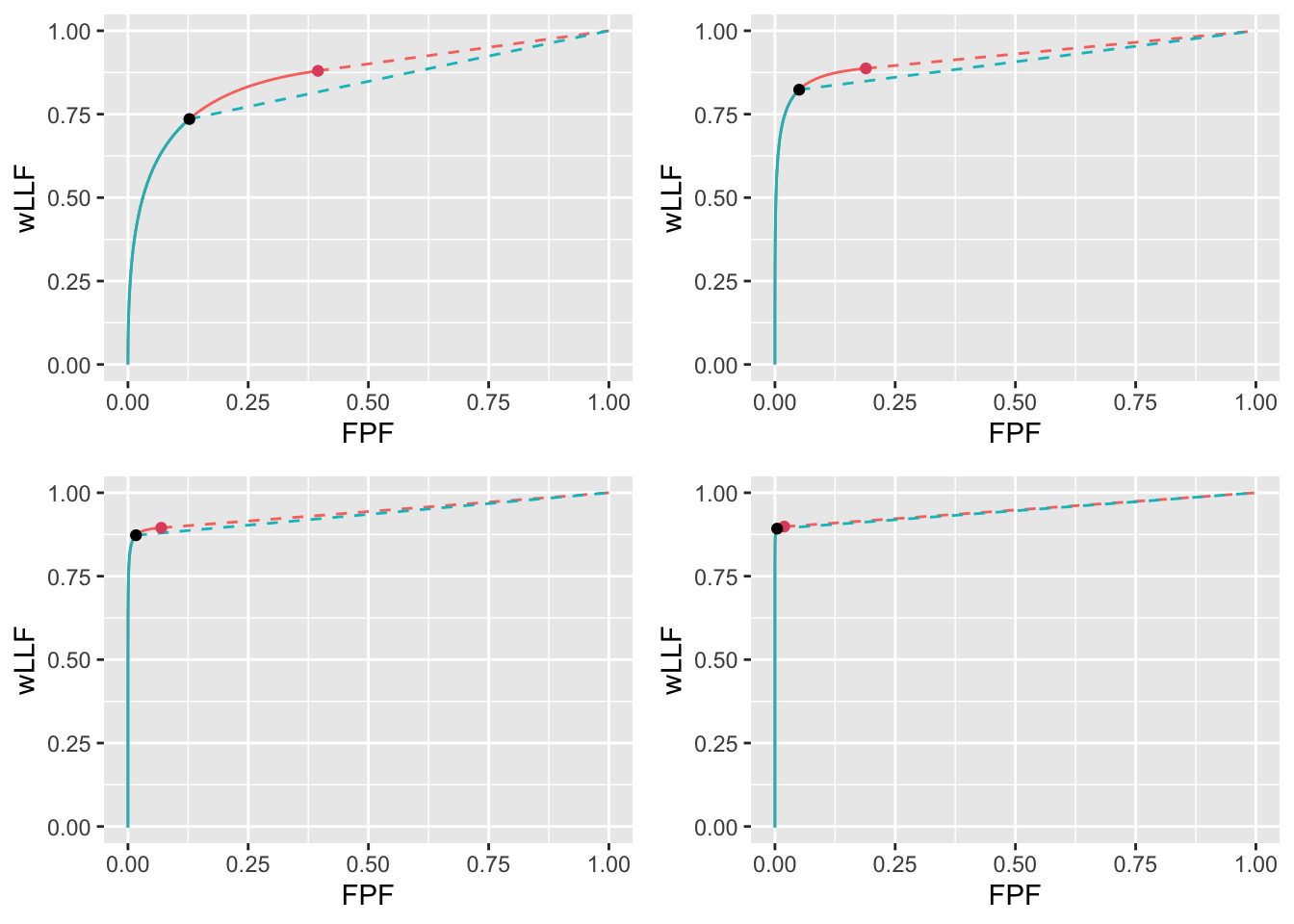

11.10.2.3 wAFROC

FIGURE 11.12: Varying \(\mu\) wAFROC plots for the two optimization methods with superimposed operating points with superimposed operating points. The color coding is as in previous figures. The values of \(\mu\) are: top-left \(\mu = 1\), top-right \(\mu = 2\), bottom-left \(\mu = 3\) and bottom-right \(\mu = 4\).

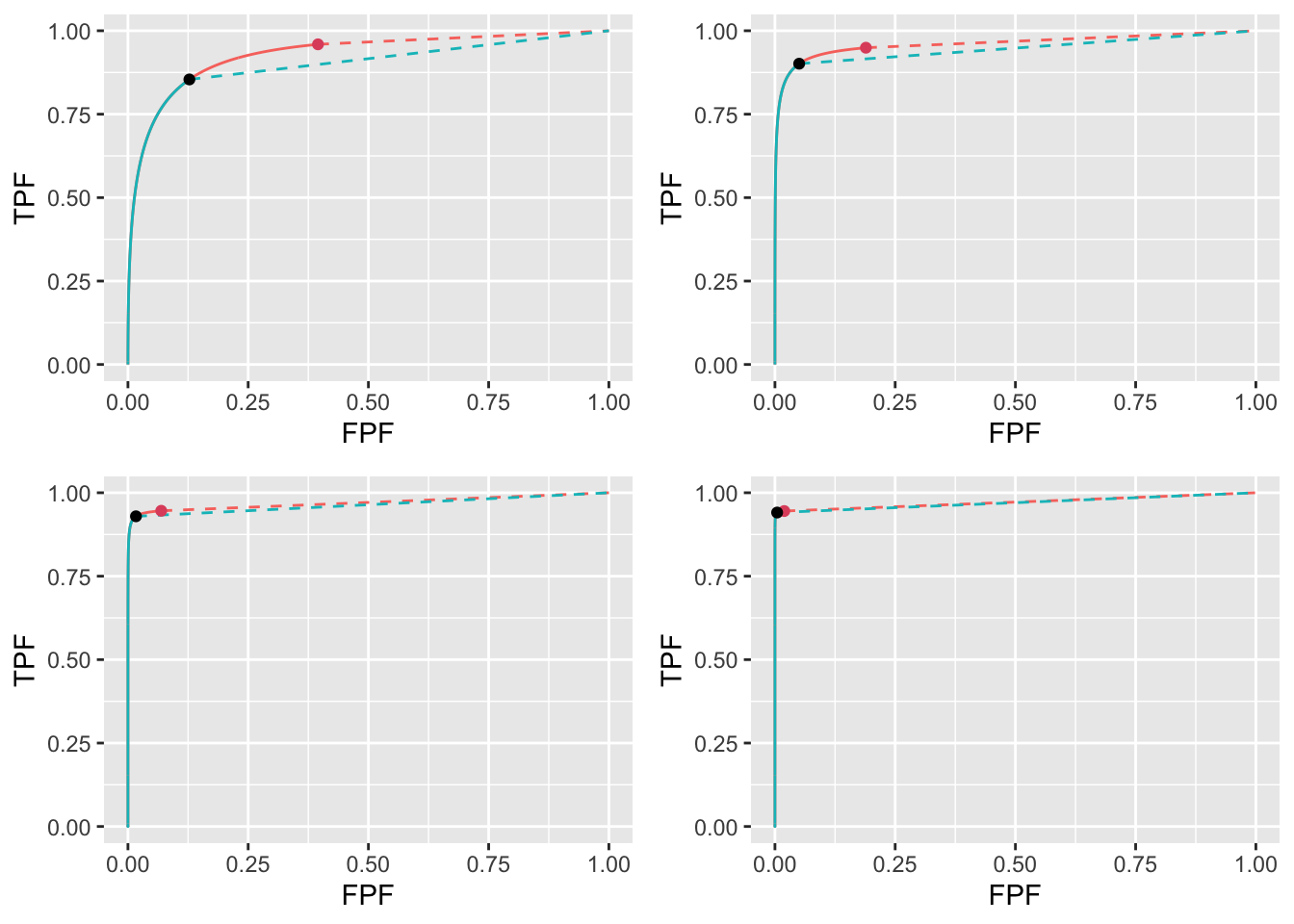

11.10.2.4 ROC

FIGURE 11.13: Varying \(\mu\) ROC plots for the two optimization methods with superimposed operating points with superimposed operating points. The color coding is as in previous figures. The values of \(\mu\) are: top-left \(\mu = 1\), top-right \(\mu = 2\), bottom-left \(\mu = 3\) and bottom-right \(\mu = 4\).

11.10.3 Limiting cases

11.10.3.1 High performance varying \(\mu\)

muArr <- c(2, 3, 4, 5)

nuArr <- c(0.9)

lambdaArr <- c(1)11.10.3.1.1 Summary table

| FOM | \(\mu\) | \(\zeta_1\) | \(\text{wAFROC}_\text{AUC}\) | \(\text{ROC}_\text{AUC}\) | \(\left( \text{NLF}, \text{LLF}\right)\) |

|---|---|---|---|---|---|

| \(\text{wAFROC}_\text{AUC}\) | 2 | -0.007 | 0.864 | 0.929 | (0.503, 0.880) |

| 3 | 0.808 | 0.922 | 0.961 | (0.210, 0.887) | |

| 4 | 1.463 | 0.942 | 0.970 | (0.072, 0.895) | |

| 5 | 2.063 | 0.948 | 0.972 | (0.020, 0.899) | |

| Youden-index | 2 | 1.095 | 0.831 | 0.899 | (0.137, 0.735) |

| 3 | 1.629 | 0.903 | 0.945 | (0.052, 0.823) | |

| 4 | 2.124 | 0.935 | 0.964 | (0.017, 0.873) | |

| 5 | 2.608 | 0.946 | 0.970 | (0.005, 0.892) |

11.10.3.1.2 FROC

FIGURE 11.14: High performance varying \(\mu\) FROC plots with superimposed operating points. The red dot corresponds to \(\text{wAFROC}_\text{AUC}\) optimization and the black dot to Youden-index optimization. The values of \(\mu\) are: top-left \(\mu = 2\), top-right \(\mu = 3\), bottom-left \(\mu = 4\) and bottom-right \(\mu = 5\).

11.10.3.1.3 wAFROC

FIGURE 11.15: High performance varying \(\mu\) wAFROC plots for the two optimization methods with superimposed operating points with superimposed operating points. The color coding is as in previous figures. The values of \(\mu\) are: top-left \(\mu = 2\), top-right \(\mu = 3\), bottom-left \(\mu = 4\) and bottom-right \(\mu = 5\).

11.10.3.1.4 ROC

FIGURE 11.16: High performance varying \(\mu\) ROC plots for the two optimization methods with superimposed operating points with superimposed operating points. The color coding is as in previous figures. The values of \(\mu\) are: top-left \(\mu = 2\), top-right \(\mu = 3\), bottom-left \(\mu = 4\) and bottom-right \(\mu = 5\).

11.10.3.2 Low performance varying \(\mu\)

muArr <- c(1, 2, 3, 4)

nuArr <- c(0.1)

lambdaArr <- c(10)11.10.3.2.1 Summary table

| FOM | \(\mu\) | \(\zeta_1\) | \(\text{wAFROC}_\text{AUC}\) | \(\text{ROC}_\text{AUC}\) | \(\left( \text{NLF}, \text{LLF}\right)\) |

|---|---|---|---|---|---|

| \(\text{wAFROC}_\text{AUC}\) | 1 | 5.000 | 0.500 | 0.500 | (0.000, 0.000) |

| 2 | 3.298 | 0.502 | 0.507 | (0.005, 0.010) | |

| 3 | 3.018 | 0.518 | 0.536 | (0.013, 0.049) | |

| 4 | 3.130 | 0.536 | 0.559 | (0.009, 0.081) | |

| Youden-index | 1 | 1.563 | 0.292 | 0.514 | (0.590, 0.029) |

| 2 | 1.865 | 0.397 | 0.535 | (0.311, 0.055) | |

| 3 | 2.198 | 0.478 | 0.555 | (0.140, 0.079) | |

| 4 | 2.564 | 0.523 | 0.567 | (0.052, 0.092) |

11.10.3.2.2 FROC

FIGURE 11.17: Low performance varying \(\mu\) FROC plots with superimposed operating points. The red dot corresponds to \(\text{wAFROC}_\text{AUC}\) optimization and the black dot to Youden-index optimization. The values of \(\mu\) are: top-left \(\mu = 1\), top-right \(\mu = 2\), bottom-left \(\mu = 3\) and bottom-right \(\mu = 4\).

11.10.3.2.3 wAFROC

FIGURE 11.18: Low performance varying \(\mu\) wAFROC plots for the two optimization methods with superimposed operating points with superimposed operating points. The color coding is as in previous figures. The values of \(\mu\) are: top-left \(\mu = 1\), top-right \(\mu = 2\), bottom-left \(\mu = 3\) and bottom-right \(\mu = 4\).

11.10.3.2.4 ROC

FIGURE 11.19: Low performance varying \(\mu\) ROC plots for the two optimization methods with superimposed operating points with superimposed operating points. The color coding is as in previous figures. The values of \(\mu\) are: top-left \(\mu = 1\), top-right \(\mu = 2\), bottom-left \(\mu = 3\) and bottom-right \(\mu = 4\).

11.10.3.3 High performance varying \(\lambda\)

muArr <- c(4)

nuArr <- c(0.9)

lambdaArr <- c(1,2,5,10)11.10.3.3.1 Summary table

| FOM | \(\lambda\) | \(\zeta_1\) | \(\text{wAFROC}_\text{AUC}\) | \(\text{ROC}_\text{AUC}\) | \(\left( \text{NLF}, \text{LLF}\right)\) |

|---|---|---|---|---|---|

| \(\text{wAFROC}_\text{AUC}\) | 1 | 1.463 | 0.942 | 0.970 | (0.072, 0.895) |

| 2 | 1.644 | 0.938 | 0.968 | (0.100, 0.892) | |

| 5 | 1.889 | 0.930 | 0.965 | (0.147, 0.884) | |

| 10 | 2.082 | 0.920 | 0.960 | (0.187, 0.875) | |

| Youden-index | 1 | 2.124 | 0.935 | 0.964 | (0.017, 0.873) |

| 2 | 2.291 | 0.928 | 0.960 | (0.022, 0.861) | |

| 5 | 2.508 | 0.915 | 0.952 | (0.030, 0.839) | |

| 10 | 2.669 | 0.903 | 0.944 | (0.038, 0.818) |

11.10.3.3.2 FROC

FIGURE 11.20: High performance varying \(\lambda\) FROC plots with superimposed operating points. The red dot corresponds to \(\text{wAFROC}_\text{AUC}\) optimization and the black dot to Youden-index optimization. The values of \(\lambda\) are: top-left \(\lambda = 1\), top-right \(\lambda = 2\), bottom-left \(\lambda = 5\) and bottom-right \(\lambda = 10\).

11.10.3.3.3 wAFROC

FIGURE 11.21: High performance varying \(\lambda\) wAFROC plots for the two optimization methods with superimposed operating points. The color coding is as in previous figures. The values of \(\lambda\) are: top-left \(\lambda = 1\), top-right \(\lambda = 2\), bottom-left \(\lambda = 5\) and bottom-right \(\lambda = 10\).

11.10.3.3.4 ROC

FIGURE 11.22: High performance varying \(\lambda\) ROC plots for the two optimization methods with superimposed operating points. The color coding is as in previous figures. The values of \(\lambda\) are: top-left \(\lambda = 1\), top-right \(\lambda = 2\), bottom-left \(\lambda = 5\) and bottom-right \(\lambda = 10\).

11.10.3.4 Low performance varying \(\lambda\)

muArr <- c(1)

nuArr <- c(0.2)

lambdaArr <- c(1, 2, 5, 10)11.10.3.4.1 Summary table

| FOM | \(\lambda\) | \(\zeta_1\) | \(\text{wAFROC}_\text{AUC}\) | \(\text{ROC}_\text{AUC}\) | \(\left( \text{NLF}, \text{LLF}\right)\) |

|---|---|---|---|---|---|

| \(\text{wAFROC}_\text{AUC}\) | 1 | 2.081 | 0.505 | 0.520 | (0.019, 0.028) |

| 2 | 2.795 | 0.501 | 0.505 | (0.005, 0.007) | |

| 5 | 3.718 | 0.500 | 0.500 | (0.001, 0.001) | |

| 10 | 4.412 | 0.500 | 0.500 | (0.000, 0.000) | |

| Youden-index | 1 | 0.284 | 0.423 | 0.587 | (0.388, 0.153) |

| 2 | 0.734 | 0.380 | 0.566 | (0.463, 0.121) | |

| 5 | 1.237 | 0.335 | 0.542 | (0.540, 0.081) | |

| 10 | 1.568 | 0.309 | 0.528 | (0.585, 0.057) |

11.10.3.4.2 FROC

FIGURE 11.23: Low performance varying \(\lambda\) FROC plots with superimposed operating points. The red dot corresponds to \(\text{wAFROC}_\text{AUC}\) optimization and the black dot to Youden-index optimization. The values of \(\lambda\) are: top-left \(\lambda = 1\), top-right \(\lambda = 2\), bottom-left \(\lambda = 5\) and bottom-right \(\lambda = 10\).

11.10.3.4.3 wAFROC

FIGURE 11.24: Low performance varying \(\lambda\) wAFROC plots for the two optimization methods with superimposed operating points. The color coding is as in previous figures. The values of \(\lambda\) are: top-left \(\lambda = 1\), top-right \(\lambda = 2\), bottom-left \(\lambda = 5\) and bottom-right \(\lambda = 10\).

11.10.3.4.4 ROC

FIGURE 11.25: Low performance varying \(\lambda\) ROC plots for the two optimization methods with superimposed operating points. The color coding is as in previous figures. The values of \(\lambda\) are: top-left \(\lambda = 1\), top-right \(\lambda = 2\), bottom-left \(\lambda = 5\) and bottom-right \(\lambda = 10\).

11.10.3.5 High performance varying \(\nu\)

muArr <- c(4)

lambdaArr <- c(1)

nuArr <- c(0.6, 0.7, 0.8, 0.9)11.10.3.5.1 Summary table

| FOM | \(\nu\) | \(\zeta_1\) | \(\text{wAFROC}_\text{AUC}\) | \(\text{ROC}_\text{AUC}\) | \(\left( \text{NLF}, \text{LLF}\right)\) |

|---|---|---|---|---|---|

| \(\text{wAFROC}_\text{AUC}\) | 0.6 | 1.905 | 0.788 | 0.855 | (0.028, 0.589) |

| 0.7 | 1.796 | 0.839 | 0.898 | (0.036, 0.690) | |

| 0.8 | 1.663 | 0.890 | 0.936 | (0.048, 0.792) | |

| 0.9 | 1.463 | 0.942 | 0.970 | (0.072, 0.895) | |

| Youden-index | 0.6 | 2.063 | 0.788 | 0.852 | (0.020, 0.584) |

| 0.7 | 2.080 | 0.837 | 0.894 | (0.019, 0.681) | |

| 0.8 | 2.100 | 0.886 | 0.931 | (0.018, 0.777) | |

| 0.9 | 2.124 | 0.935 | 0.964 | (0.017, 0.873) |

11.10.3.5.2 FROC

FIGURE 11.26: High performance varying \(\nu\) FROC plots with superimposed operating points. The red dot corresponds to \(\text{wAFROC}_\text{AUC}\) optimization and the black dot to Youden-index optimization. The values of \(\nu\) are: top-left \(\nu = 0.6\), top-right \(\nu = 0.7\), bottom-left \(\nu = 0.8\) and bottom-right \(\nu = 0.9\).

11.10.3.5.3 wAFROC

FIGURE 11.27: High performance varying \(\nu\) wAFROC plots for the two optimization methods with superimposed operating points. The color coding is as in previous figures. The values of \(\nu\) are: top-left \(\nu = 0.6\), top-right \(\nu = 0.7\), bottom-left \(\nu = 0.8\) and bottom-right \(\nu = 0.9\).

11.10.3.5.4 ROC

FIGURE 11.28: High performance varying \(\nu\) ROC plots for the two optimization methods with superimposed operating points. The color coding is as in previous figures. The values of \(\nu\) are: top-left \(\nu = 0.6\), top-right \(\nu = 0.7\), bottom-left \(\nu = 0.8\) and bottom-right \(\nu = 0.9\).

11.10.3.6 Low performance varying \(\nu\)

muArr <- c(1)

lambdaArr <- c(10)

nuArr <- c(0.1, 0.2, 0.3, 0.4)11.10.3.6.1 Summary table

| FOM | \(\nu\) | \(\zeta_1\) | \(\text{wAFROC}_\text{AUC}\) | \(\text{ROC}_\text{AUC}\) | \(\left( \text{NLF}, \text{LLF}\right)\) |

|---|---|---|---|---|---|

| \(\text{wAFROC}_\text{AUC}\) | 0.1 | 5.000 | 0.500 | 0.500 | (0.000, 0.000) |

| 0.2 | 4.412 | 0.500 | 0.500 | (0.000, 0.000) | |

| 0.3 | 4.006 | 0.500 | 0.500 | (0.000, 0.000) | |

| 0.4 | 3.718 | 0.500 | 0.501 | (0.001, 0.001) | |

| Youden-index | 0.1 | 1.563 | 0.292 | 0.514 | (0.590, 0.029) |

| 0.2 | 1.568 | 0.309 | 0.528 | (0.585, 0.057) | |

| 0.3 | 1.572 | 0.325 | 0.542 | (0.580, 0.085) | |

| 0.4 | 1.577 | 0.342 | 0.556 | (0.574, 0.113) |

11.10.3.6.2 FROC

FIGURE 11.29: Low performance varying \(\nu\) FROC plots with superimposed operating points. The red dot corresponds to \(\text{wAFROC}_\text{AUC}\) optimization and the black dot to Youden-index optimization. The values of \(\nu\) are: top-left \(\nu = 0.1\), top-right \(\nu = 0.2\), bottom-left \(\nu = 0.3\) and bottom-right \(\nu = 0.4\).

11.10.3.6.3 wAFROC

FIGURE 11.30: Low performance varying \(\nu\) wAFROC plots for the two optimization methods with superimposed operating points. The color coding is as in previous figures. The values of \(\nu\) are: top-left \(\nu = 0.1\), top-right \(\nu = 0.2\), bottom-left \(\nu = 0.3\) and bottom-right \(\nu = 0.4\).

11.10.3.6.4 ROC

FIGURE 11.31: Low performance varying \(\nu\) ROC plots for the two optimization methods with superimposed operating points. The color coding is as in previous figures. The values of \(\nu\) are: top-left \(\nu = 0.1\), top-right \(\nu = 0.2\), bottom-left \(\nu = 0.3\) and bottom-right \(\nu = 0.4\).